Table of Contents

Why Read this Guide?

This guide serves as a crucial companion for cybersecurity professionals, offering an in-depth understanding of how to effectively prioritize vulnerabilities in the digital landscape.

Crafted by thought leaders in this space, the guide distills years of experience, data analysis, and interactions with standards groups and tool vendors into a practical, pragmatic, user-centric approach to cybersecurity. It illuminates the complexities of managing the ever-growing list of vulnerabilities and provides actionable strategies for mitigating real-world risks.

Through a blend of theoretical insights and real-world applications, this guide emphasizes the significance of not relying solely on conventional scoring systems like CVSS but adopting a multifaceted strategy that includes CISA KEV and EPSS for a more nuanced and effective prioritization process.

Whether you're a cybersecurity novice or a seasoned expert, this guide equips you with the knowledge and tools to navigate the treacherous world of cybersecurity threats. It is an indispensable resource for enhancing your organization's security posture and fostering a culture of proactive risk management.

Introduction ↵

Forewords¶

Jay Jacobs¶

A witty quote attributed to several notable figures including Niels Bohr, Yogi Berra and a Dutch Politician states, "It is difficult to make predictions, especially about the future." While an entertaining turn of phrase, this is exactly what every security practitioner is being asked to do everyday. Every security control, every framework we follow, every patch we prioritize is a prediction about the future. It is a statement saying out of all the possible ways we could improve security, we are predicting these actions will yield the best possible future.

The word "prediction" may generate a negative connotation for some as it conjures up visions of charlatans with crystal balls. But whether you prefer forecasting, prognostication or following your gut, it doesn't really matter. The fact remains that we must make decisions everyday about the future and typically make those decisions with incomplete information.

Everyone knows that you probably should not take a card on 17 in blackjack, and betting on a single number in roulette is a long shot. But why? Short answer is that the odds are against a positive outcome with those decisions. Yes, it is possible to get 21 by hitting on 17, and single numbers are paid out in roulette. In the long run though, the more a player can play the odds, the better they do over time. Prediction is difficult, being correct every single time about future events is just not feasible, but by digging into the data, pulling out the important signals can make your decisions better today than they were tomorrow. That's what this framework is attempting to give practitioners – an approach to reduce your uncertainty around vulnerability prioritization and push you closer towards playing the odds.

Patrick Garrity¶

Chris Madden continues to make significant contributions to the security community, having previously shared Yahoo's innovative approach to optimizing DevOps pipelines at Bsides Dublin. In this guide, Chris presents a practical and data-driven approach to risk-based prioritization of vulnerabilities.

Within these pages, readers will discover valuable insights from a practitioner's perspective, empowering them to make informed risk decisions, prioritize CVEs effectively, and implement these principles within their own environments.

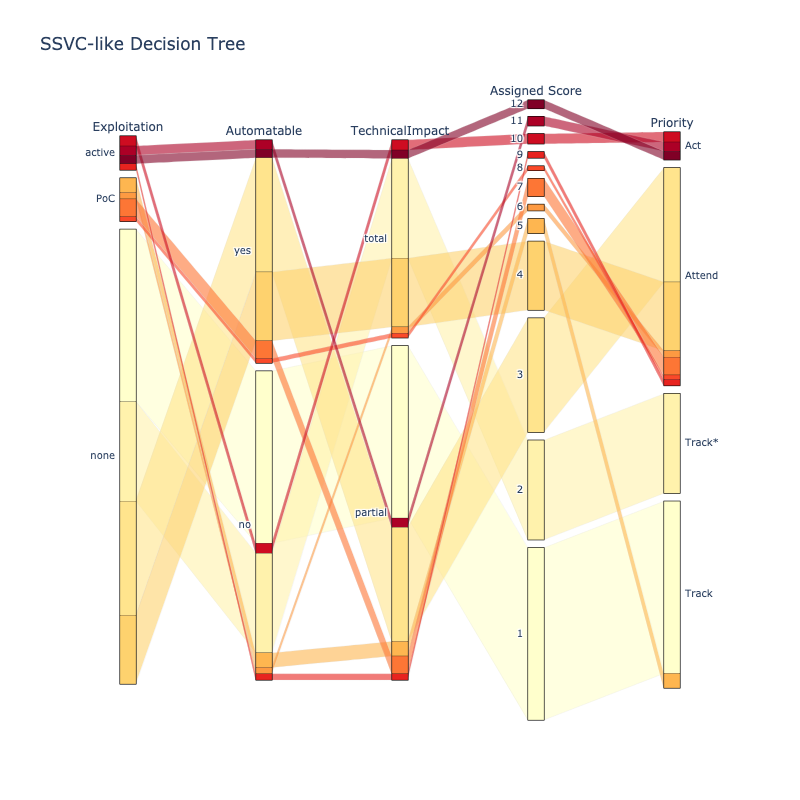

Chris skillfully combines human-based logic, represented by decision trees aligned with Stakeholder-Specific Vulnerability Categorization (SSVC), with established open standards such as the Common Vulnerability Scoring System (CVSS), CISA Known Exploited Vulnerabilities (CISA KEV), and the Exploit Prediction Scoring System (EPSS). By doing so, he equips readers with a comprehensive understanding of how to leverage these standards effectively.

With a meticulous emphasis on data-driven analysis and recommendations, Chris provides depth and clarity that are essential for any modern vulnerability management program. This guide is an indispensable resource for security professionals seeking to enhance their approach to risk prioritization.

Francesco Cipollone CEO & Founder Phoenix Security ¶

Security practitioners are currently inundated by vulnerabilities and the state of prioritization does not have any guideline on how to fix vulnerabilities and where to focus.

This guide is a brilliant starting point for any practitioner that wants to apply prioritization techniques and start making data driven and risk driven decisions.

As a practitioner myself, I wish I had such a guiding group and guidance when leading vulnerability management efforts.

Special Kudos to the EPSS group and Chris Madden driving the initiative with an unbiased view and a clean, clear data driven approach.

Preface¶

I embarked on this journey because, as a Product Security engineer, my role is to enable the flow of value to our customers by helping deliver high quality software efficiently and securely.

A large part of that was to be able to prioritize the ever increasing number of published vulnerabilities (CVEs) by Real Risk.

Lots of dumb questions and data analysis later, and experience deploying Risk Based Prioritization at scale in production, this guide represents the distillation of that knowledge into a user-centric system view - what I wish I knew before I started - and learnt by interacting with other users, standards groups, and tool vendors.

The Risk Based Prioritization described in this guide significantly reduces the

- cost of vulnerability management

- risk by reducing the time adversaries have access to vulnerable systems they are trying to exploit

- My family - who give life to living

- My employer Yahoo for cultivating such a rich environment for people to thrive.

- My colleague Lisa for the expert input, keeping all this real, and tolerating more dumb questions than any human should endure in one lifetime!

- The friendly vibrant community in this space - many of whom have contributed content to this guide.

Introduction¶

About this Guide

This Risk Based Prioritization Guide is a pragmatic user-centric view of Relative Risk per Vulnerability, the related standards and data sources, and how you can apply them for an effective Risk Based Prioritization for your organization.

It is written by, or contributed to, some of the thought leaders in this space for YOU.

CISA, Gartner, and others, recommend focusing on vulnerabilities that are known-exploited as an effective approach to risk mitigation and prevention, yet very few organizations do this.

Maybe because they don't know they should, why they should, or how they should? This guide will cover all these points.

After reading this guide you should be able to

- Understand Risk

- the main standards and how they fit together

- the key risk factors, especially known exploitation or likelihood of exploitation

- Prioritize CVEs by Risk

- apply this understanding to Prioritize CVEs by Risk for your organization resulting in

- a significant reduction in your security effort

- a significant improvement in your security posture by remediating the higher risk vulnerabilities first

- apply this understanding to Prioritize CVEs by Risk for your organization resulting in

- Apply the provided guidance to your environment

- the source code used to do much of the analysis in this guide is provided - so you can apply it to your internal data

- compare what other users, and tool vendors, are doing for Risk Based Prioritization so you can compare it to what you're doing

- ask more informed questions of your tool/solution provider

Overview¶

The guide covers:

- Risk

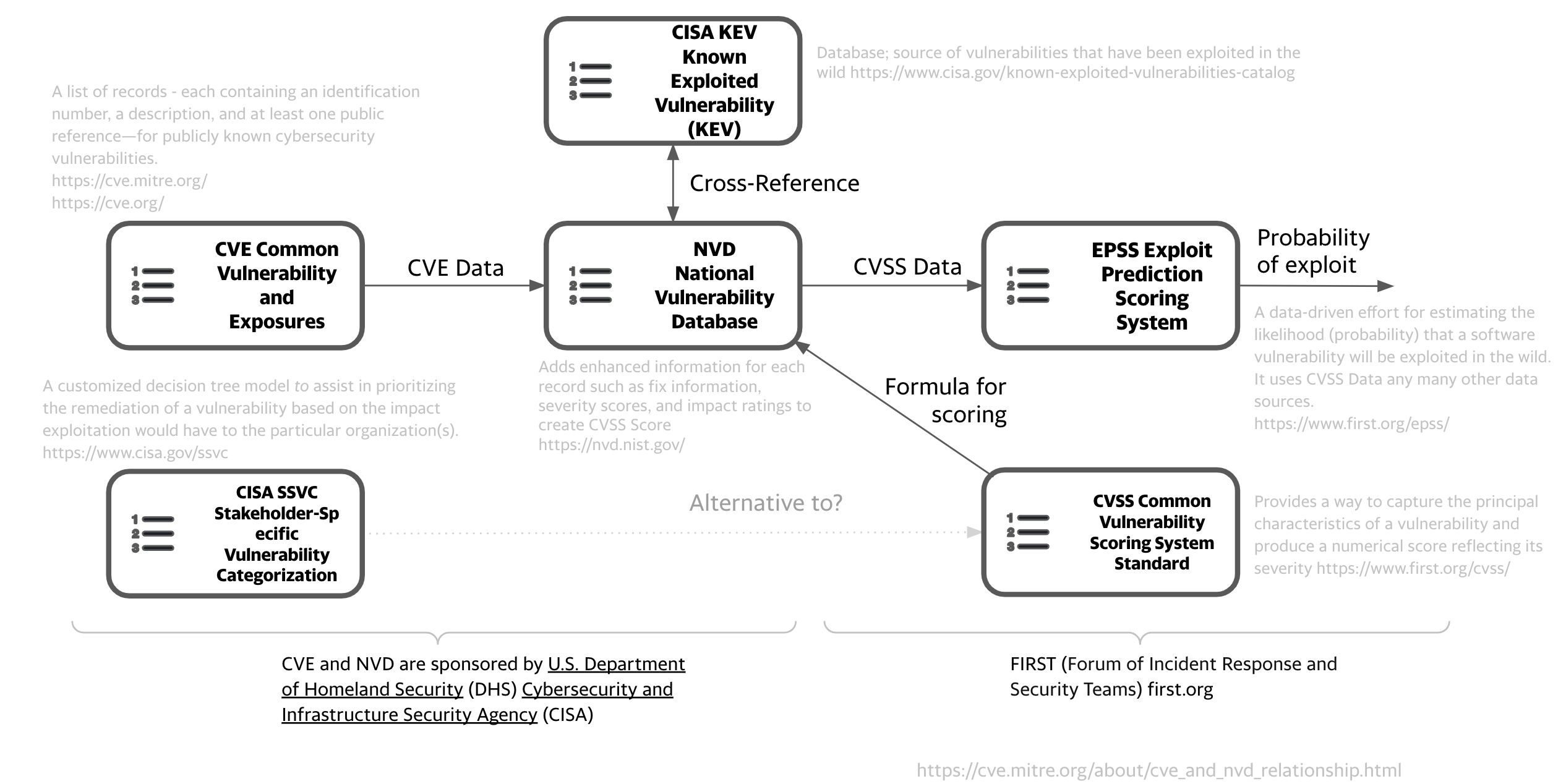

- The Vulnerability Landscape covering the main standards and how they fit together

- What these standards and risk factors look like for different populations of CVEs

- How to use the standards and data sources in your environment

- How the standards and data sources are being used using real examples

- How some vendors are using them in their tools

- How some users are using them in their environments

- Applying all this for Risk Based Prioritization

- Showing 3 different Risk Based Prioritization schemes with data and code.

The guide includes Applied material

on a menu item indicates the content is more hands on - applying the content from the guide.

"🧑💻 Source Code" on a page is a link to the source code used to generate any plots or analysis on public data.

Intended Audience¶

The intended audience is people in these roles:

- Product Engineer: the technical roles: Developer, Product Security, DevSecOps

- Security Manager: the non-technical business roles: includes CISO

- Cyber Defender: network defenders, IT/infosec

- Tool Provider: Tool providers: Tool Vendors, open source tools,...

This is a subset of the Personas/Roles defined in the Requirements chapter.

No prior knowledge is assumed to read the guide - it provides just enough information to understand the advanced topics covered.

A basic knowledge of Jupyter Python is required to run the code (with the data provided or on your data).

How to Use This Guide¶

- For the short version, read these sections and understand the Risk Based Prioritization Models:

- Risk section

- Risk Based Prioritization Schemes is a Colab notebook comparing 3 different Risk Based Prioritization Schemes by applying them to all CVEs

- For a deeper understanding read the full guide.

- To get the most out of this guide, also play with the code

- ACME is a Colab notebook that can be used to analyze any list of CVEs.

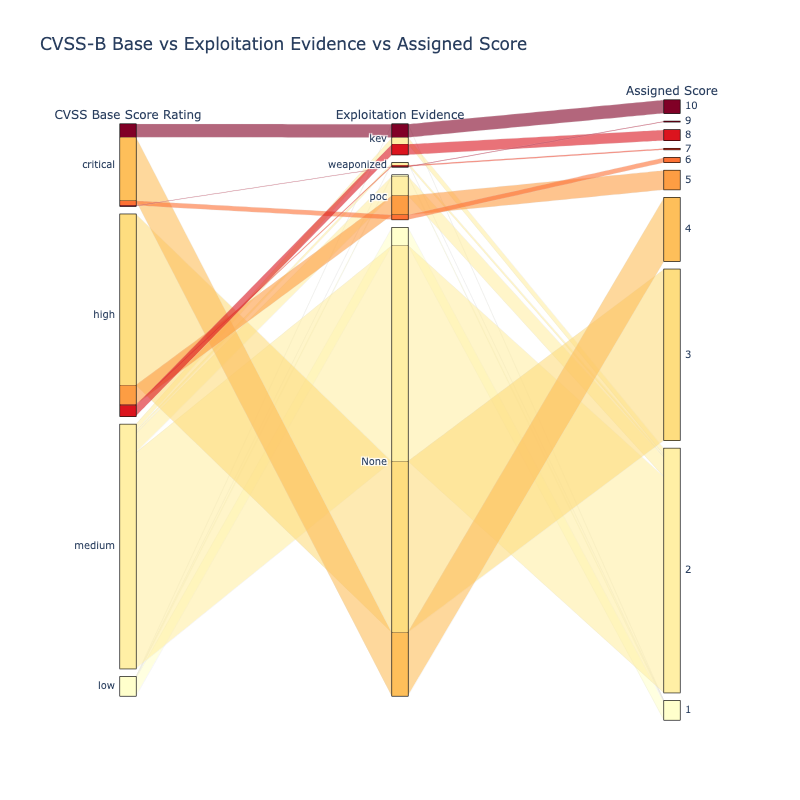

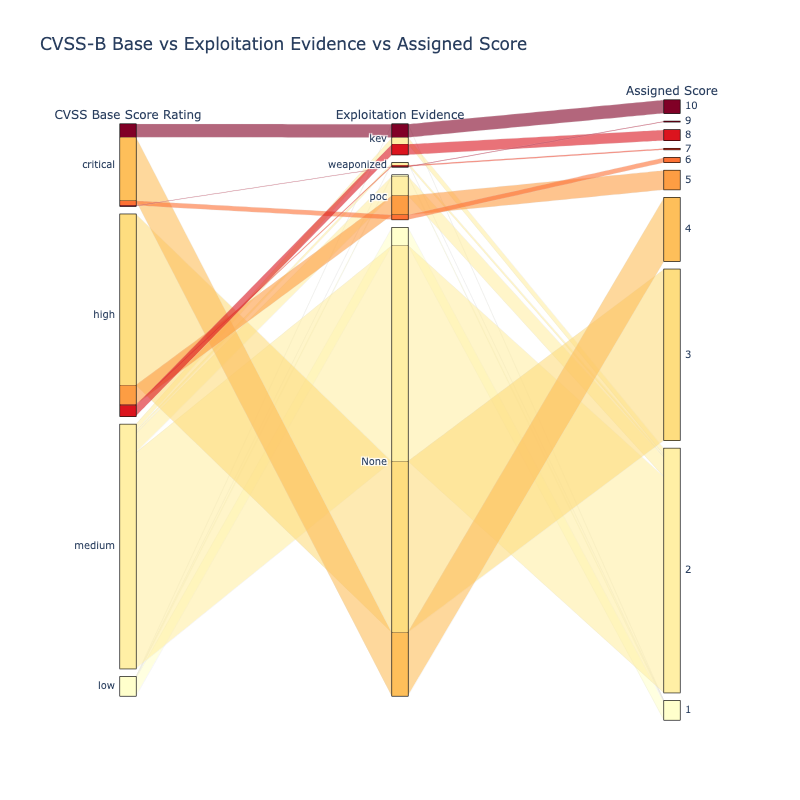

Each of the Risk Based Prioritization Models above use similar risk factors (known exploitation and likelihood of exploitation, with variants of CVSS base metrics parameters or scores) but in very different ways to rank/score the risk/priority. The outcome is the same - a more granular prioritization at the high end of risk than offered by CVSS Base Score.

If you're looking for the "easy button", or the one scheme to rule them all for Risk Based Prioritization, you won't find it (here or anywhere else).

Who Contributed to This Guide¶

Various experts and thought leaders contributed to this guide, including those that:

- developed the standards or solutions described in this guide as used in industry

- have many years of experience in vulnerability management across various roles

How to Contribute to This Guide¶

You can contribute content or suggest changes:

- Suggest content

- Report Errors, typos

- If you're a tool/solution vendor, and would like to provide anonymized, sanitized data - or what scoring system you use and why

- If you'd like to share what your organization is doing (anonymized, sanitized as required) as a good reference example

Writing Style¶

The "writing style" in this guide is succinct, and leads with an opinion, with data and code to back it up i.e. data analysis plots (with source code where possible) and observations and takeaways that you can assess - and apply to your data and environment. This allows the reader to assess the opinion and the code/data and rationale behind it.

Different, and especially opposite, opinions with the data to back them up, are especially welcome! - and will help shape this guide.

Quote

If we have data, let’s look at data. If all we have are opinions, let’s go with mine.

Source Code¶

-

See Source Code for the code

- This includes the data used in the analysis (downloaded Jan 13) and how to download it

- This code is licensed under the Apache 2 Open Source License.

Alternative or Additional Guidance¶

This guide is not an introductory or verbose treatment of topics with broader or background context. For that, consider the following (no affiliation to the authors):

- Effective Vulnerability Management: Managing Risk in the Vulnerable Digital Ecosystem

- Software Transparency: Supply Chain Security in an Era of a Software-Driven Society

- Software Supply Chain Security: Securing the End-to-end Supply Chain for Software, Firmware, and Hardware

Notes¶

Notes

- This guide is not affiliated with any Tool/Company/Vendor/Standard/Forum/Data source.

- Mention of a vendor in this guide is not a recommendation or endorsement of that vendor.

- The choice of vendors was determined by different contributors who had an interest in that vendor.

- Mention of a vendor in this guide is not a recommendation or endorsement of that vendor.

- This guide is a living document i.e. it will change and grow over time - with your input.

- You are responsible for the prioritization and remediation of vulnerabilities in your environment and the associated business and runtime context which is beyond the scope of this guide.

Contributors¶

Thanks to all who contributed!

Many experts volunteered their time and knowledge to this guide - and for that we all benefit and we're truly grateful!

In alphabetical order by first name:

- Allen Householder, CERT

- Aruneesh Salhotra

- Buddy Bergman

- Casey Douglas

- Chris Madden, Yahoo

- Christian Heinrich

- Denny Wan

- Derrick Lewis

- Eoin Keary, Edgescan

- Jay Jacobs, Cyentia, EPSS Co-creator

- Jeffrey Martin

- Jerry Gamblin

- Jonathan Spring, CISA

- Joseph Manahan

- Maor Kuriel

- Patrick Garrity, VulnCheck

- Sasha Romanosky, EPSS Co-creator, CVSS author

- Stephen Shaffer, Peloton

- Steve Finegan

Scope¶

Scope of this Guide

The scope for this guide is

- CVEs only - not vulnerabilities that don't have CVEs, not Zero Days (until they get a CVE)

- Per Vulnerability - not asset/business/runtime/other organization-specific context

CVEs¶

Not all vulnerabilities or exploits have CVEs.

However, many do, and we can use the data associated with a CVE for Risk Based Prioritization.

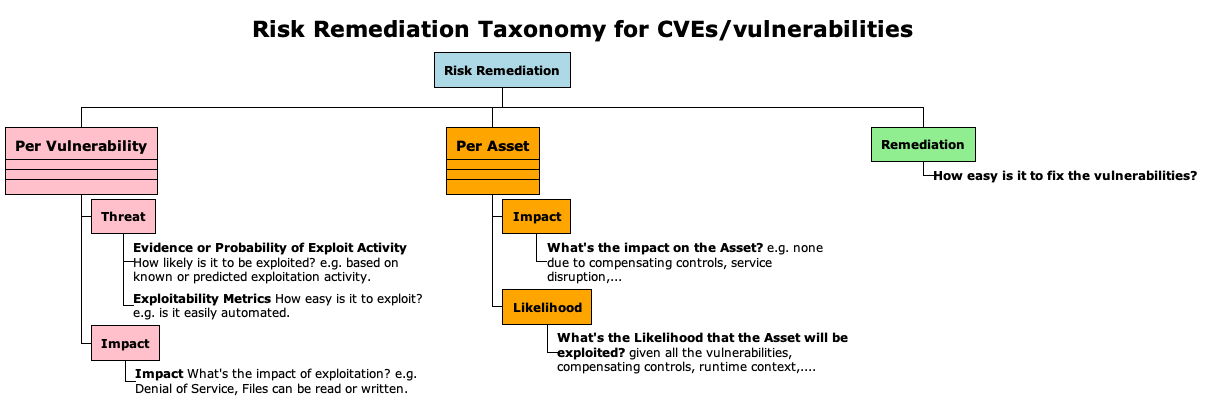

Per Vulnerability¶

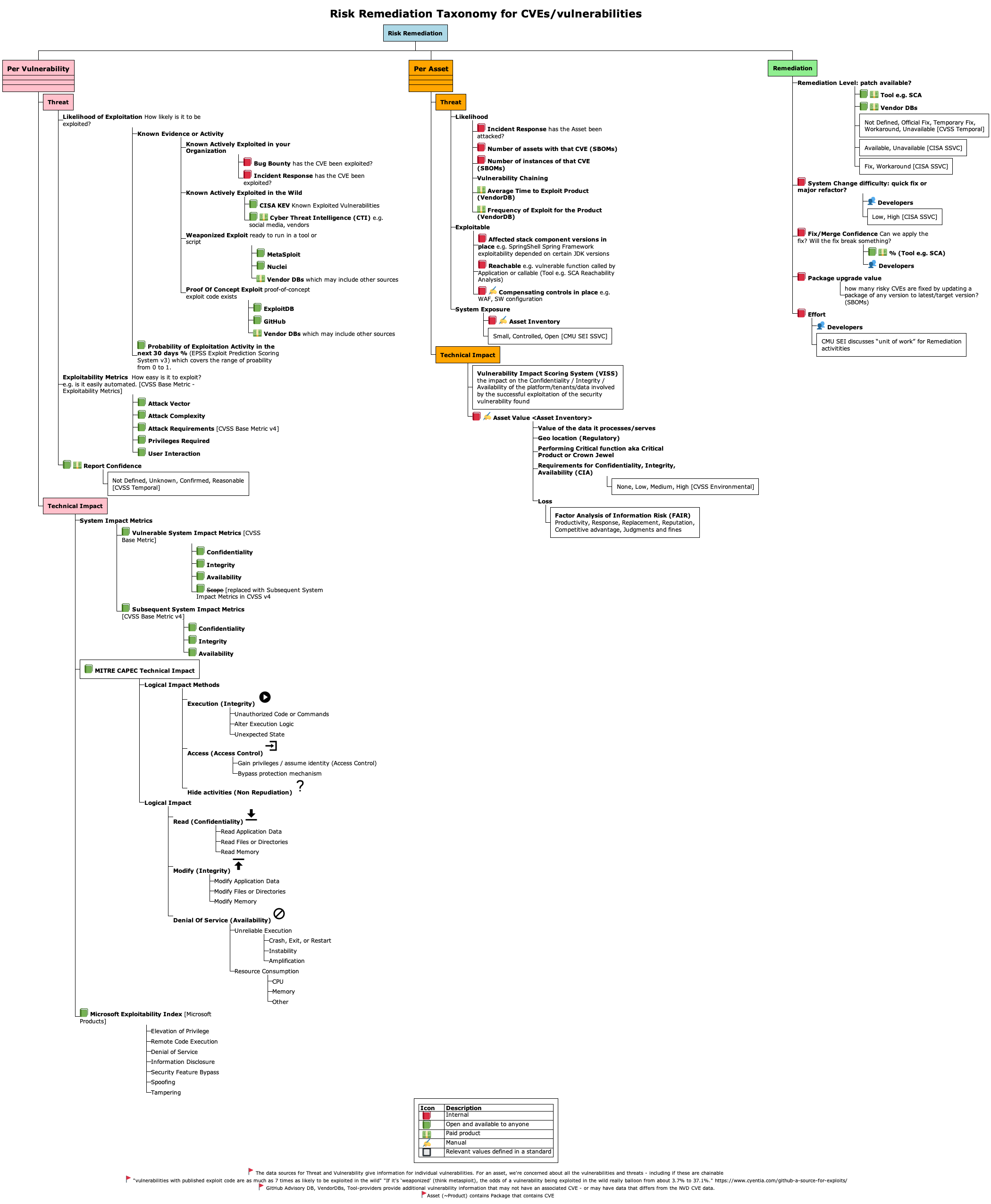

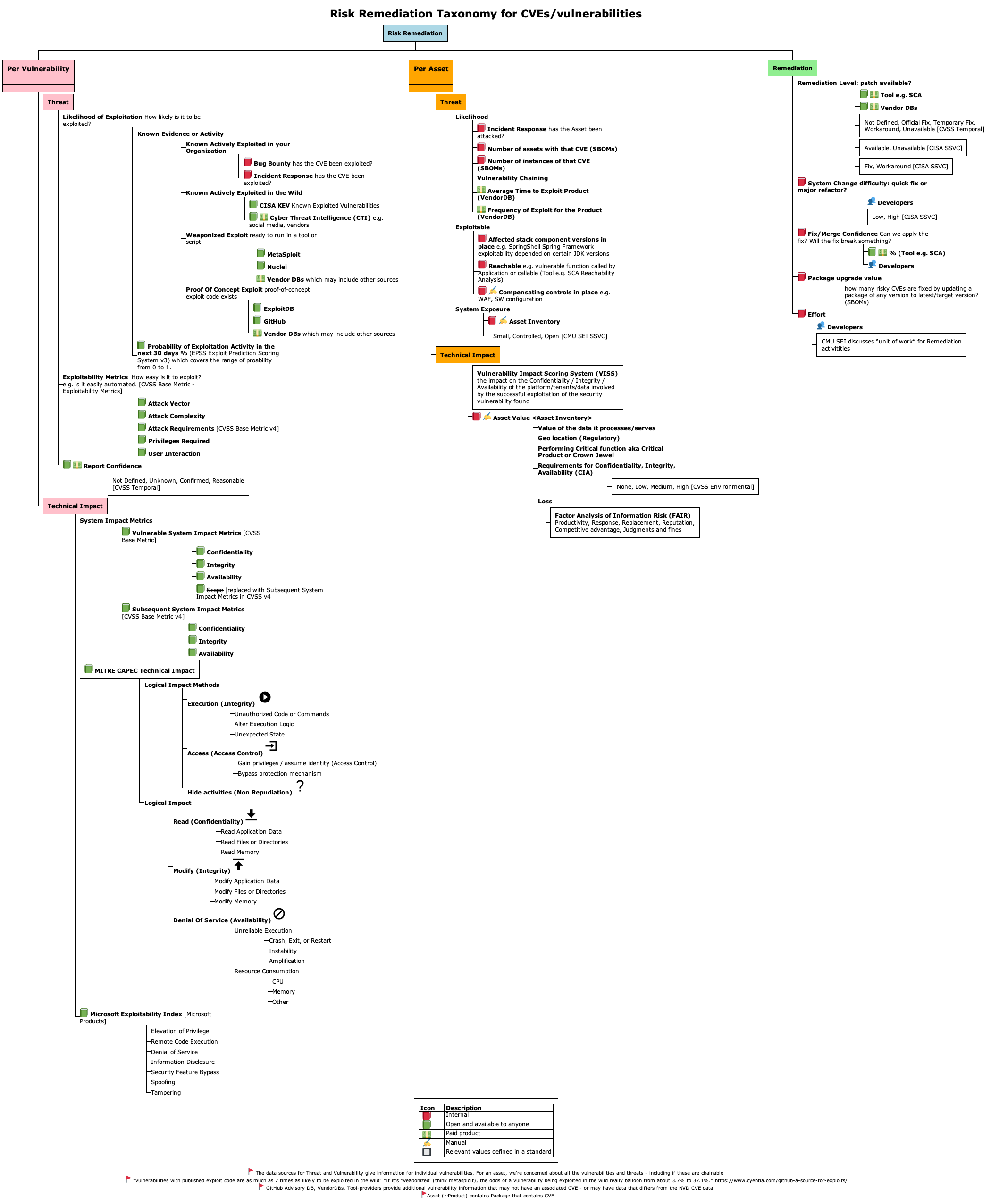

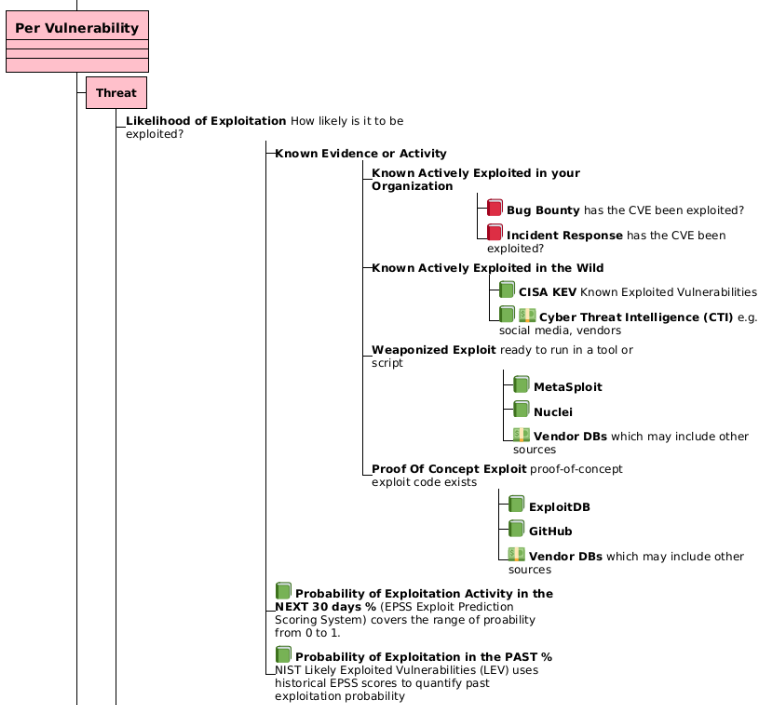

The scope is - "Per Vulnerability" branch in the diagram below - Generic vulnerability data - not the organization-specific context

A different way of looking at this is that this guide (and the prioritization schemes herein), can be used as a first pass triage and prioritization of vulnerabilities, before the overall asset-specific business and runtime context, and remediation context, is considered, and all the context-specific dependencies that go with that.

In other words, Relative Risk per vulnerability.

Having good security hygiene, and a good understanding of your data, is recommended independent of Risk Based Prioritization

Independent of the Risk Based Prioritization in this guide:

- Good Security hygiene e.g. keeping software up to date, is effective in preventing and remediating vulnerabilities.

- Understanding your vulnerability data, and root causes, should inform your remediation.

See where this guide fits in terms of overall Risk Remediation

A more complete picture of Risk Remediation Asset and Remediation branches is shown below.

- While these are out of scope for this guide, it may be useful as a reference for your Risk Remediation.

Source Code and Data¶

Overview

This section gives an overview of the data and the notebooks (code + documentation) for the analysis that is part of this guide:

- Running the Code - the environments it can be run

- Data Sources - the public data sources used

- Analysis - the code for the analysis, the output of which is used in this guide

Running the Code¶

The source code provided with this guide is available as Jupyter Notebooks.

These can be run

- locally or offline (requires that you have a Jupyter Notebooks environment setup)

- online via browser in Colab. (No environment setup required)

The source code notebooks are available in Colab to run from your browser

Quote

Colab, or "Colaboratory", allows you to write and execute Python in your browser, with

- Zero configuration required

- Access to GPUs free of charge

- Easy sharing

Data Sources¶

| Data Source | Detail | ~~ CVE count K |

|---|---|---|

| CISA KEV | Active Exploitation | 1 |

| EPSS | Predictor of Exploitation | 220 |

| Metasploit modules | Weaponized Exploit | 3 |

| Nuclei templates | Weaponized Exploit | 2 |

| ExploitDB | Published Exploit Code | 25 |

| NVD CVE Data | NVD CVEs | 220 |

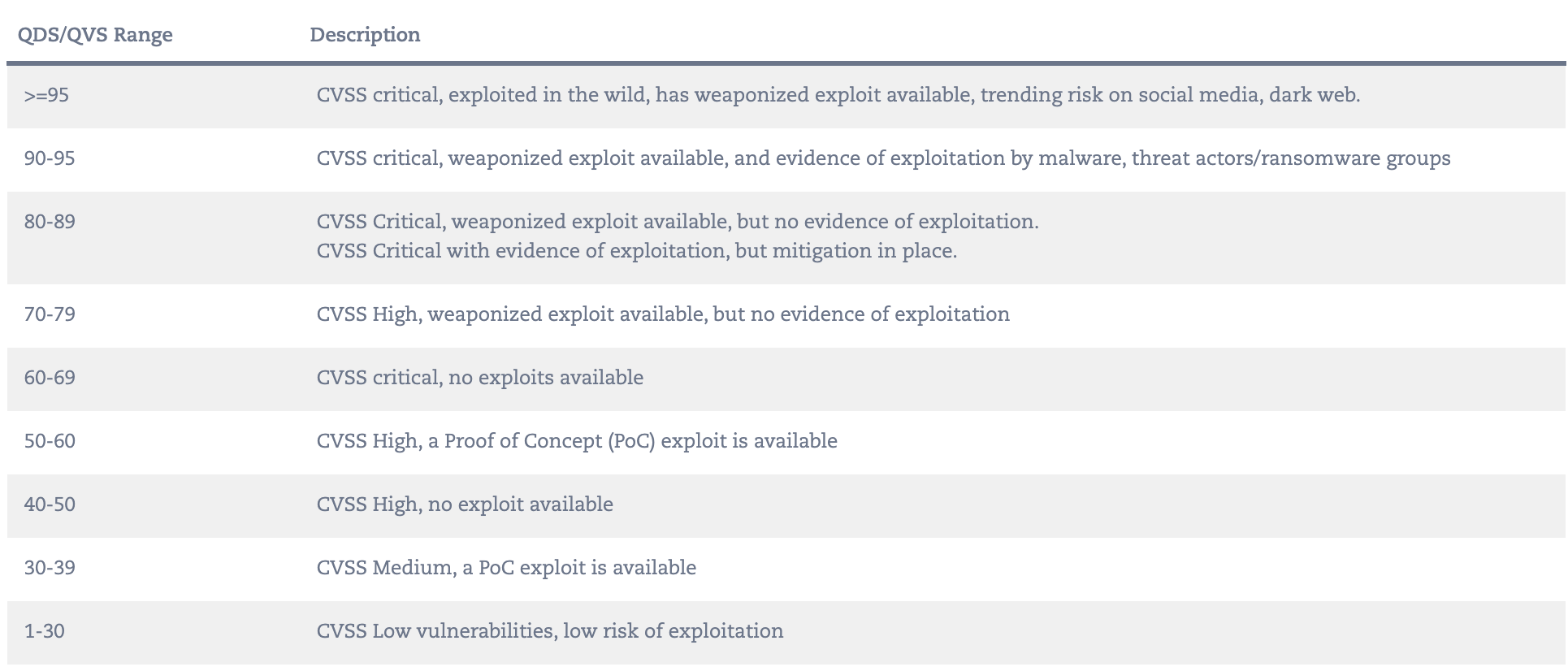

| Qualys TruRisk Report | The 2023 Qualys TruRisk research report lists 190 CVEs from 2022 with QVS scores | .2 |

| Microsoft Security Response Center (MSRC) | CVEs Exploited and with Exploitability Assessment | .2 |

Analysis¶

See analysis directory for these files.

- enrich_cves.ipynb

- Take the data sources from data_in/

- Enrich the CVE data from NVD with the other data sources

- Add an "Exploit" column to indicate the source of the exploitability (used later to set colors of CVE data in plots)

- store the output in data_out/nvd_cves_v3_enriched.csv.gz

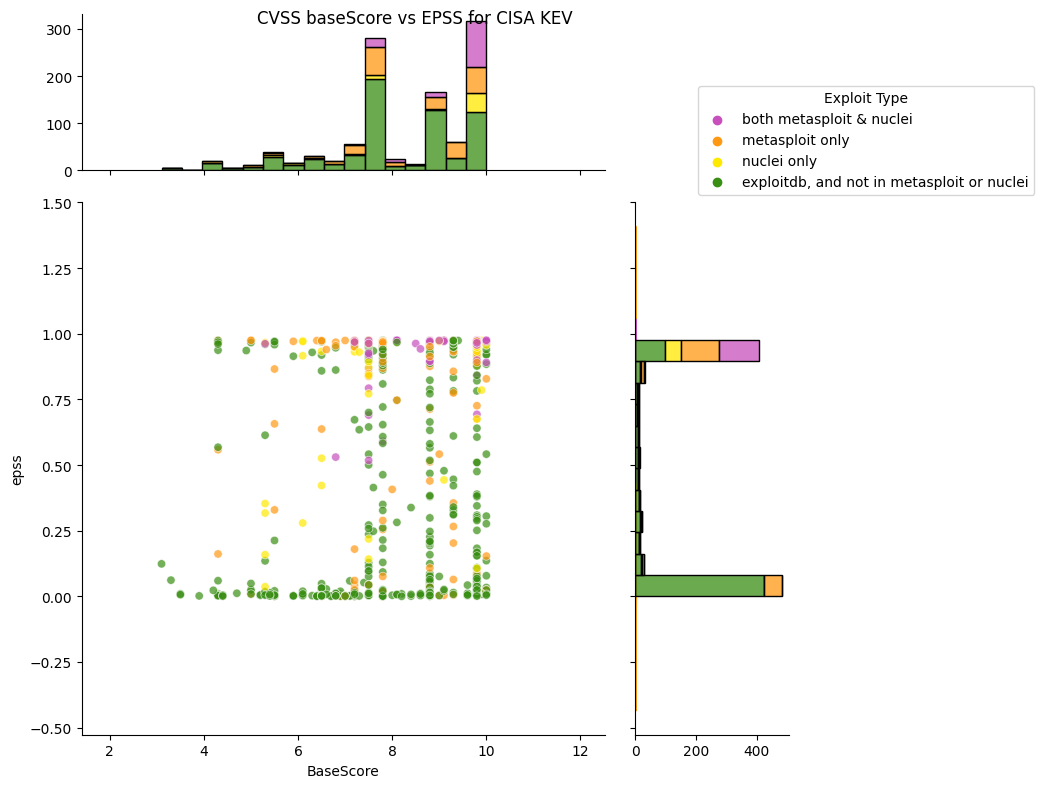

- kev_epss_cvss.ipynb

- Read the enriched CVE data from data_out/CVSSData_enriched.csv.gz

- Read the data from CISA KEV alert reports in ./data_in/cisa_kev/

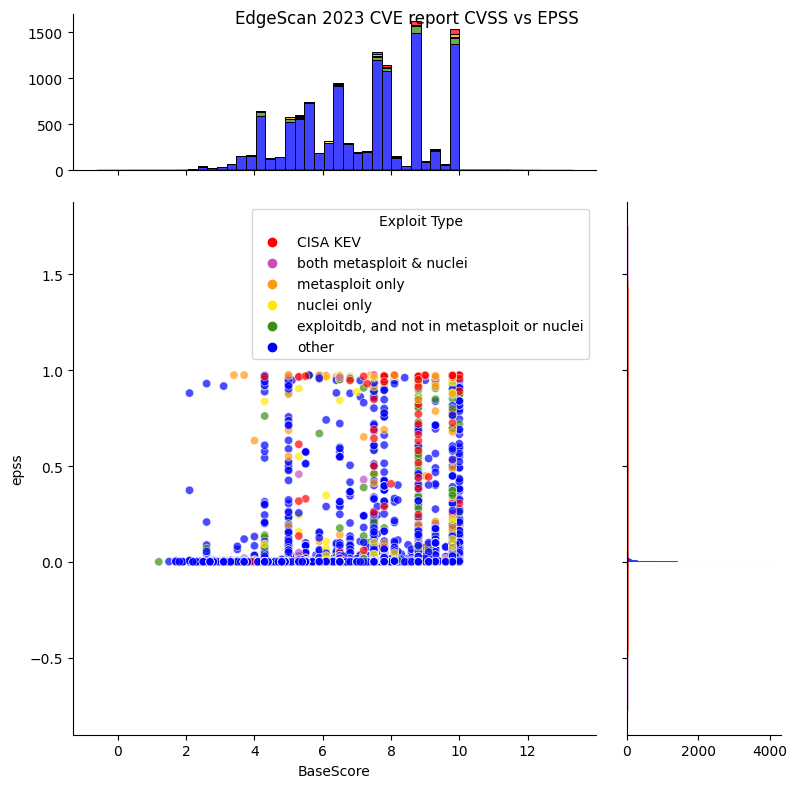

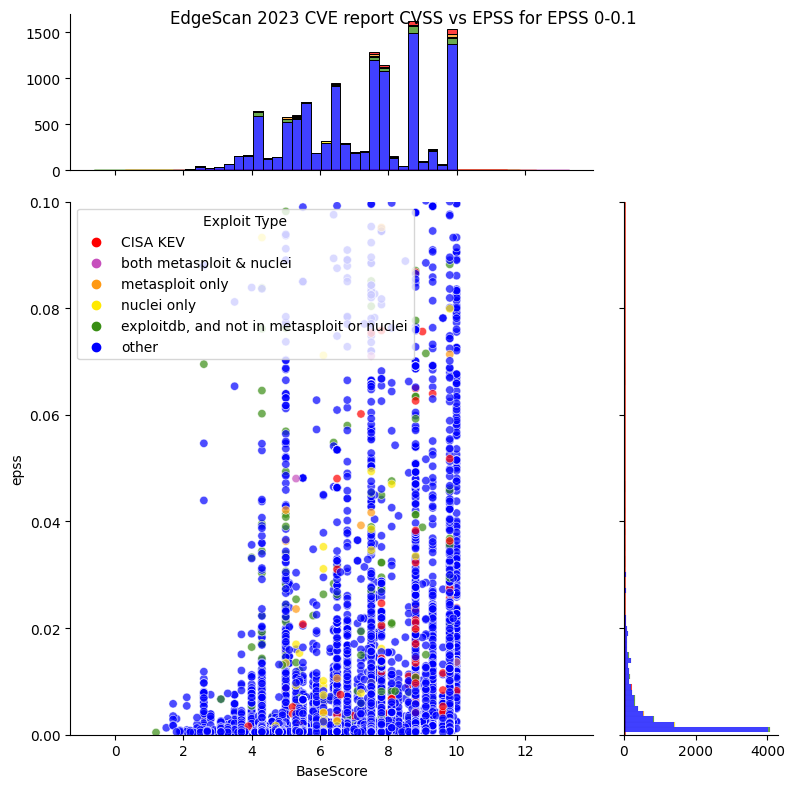

- Plot CISA KEV datasets showing EPSS, CVSS by source of the exploitability

- Write data_out/cisa_kev/csa/csa.csv.gz which is the CISA KEV CyberSecurity Alerts (CSA) subset with EPSS and other data

- qualys.ipynb

- Read the enriched CVE data from data_out/CVSSData_enriched.csv.gz

- Read the data from ./data_in/qualys

- Plot Qualys dataset showing EPSS, CVSS by source of the exploitability

- Write data_out/qualys/qualys.csv.gz which is the Qualys data with EPSS and other data

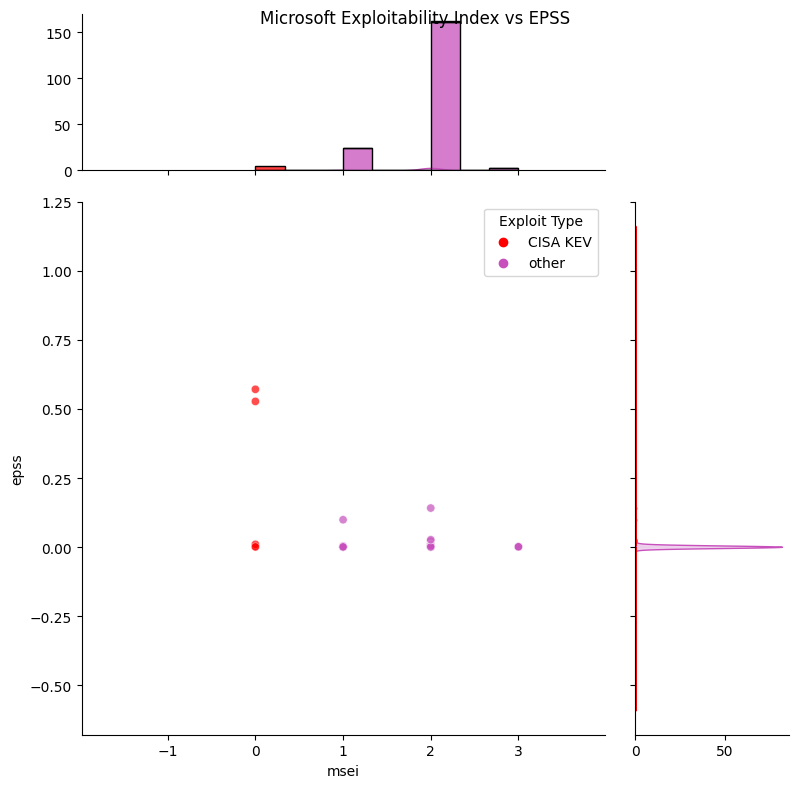

- msrc.ipynb

- Read the enriched CVE data from data_out/CVSSData_enriched.csv.gz

- Read the data from ./data_in/msrc

- Plot Microsoft Exploitability Index dataset showing EPSS, CVSS by source of the exploitability

- Write data_out/msrc/msrc.csv.gz which is the MSEI data with EPSS and other data

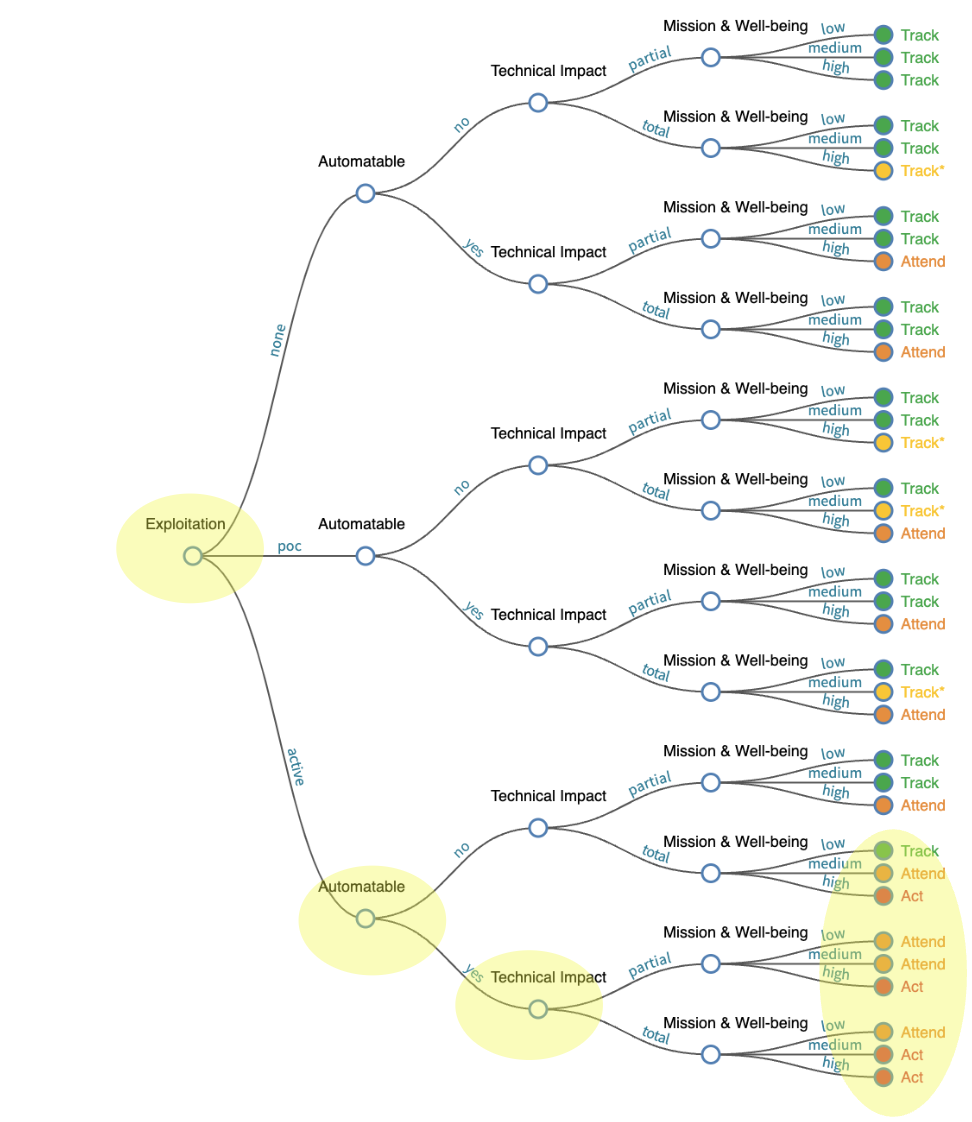

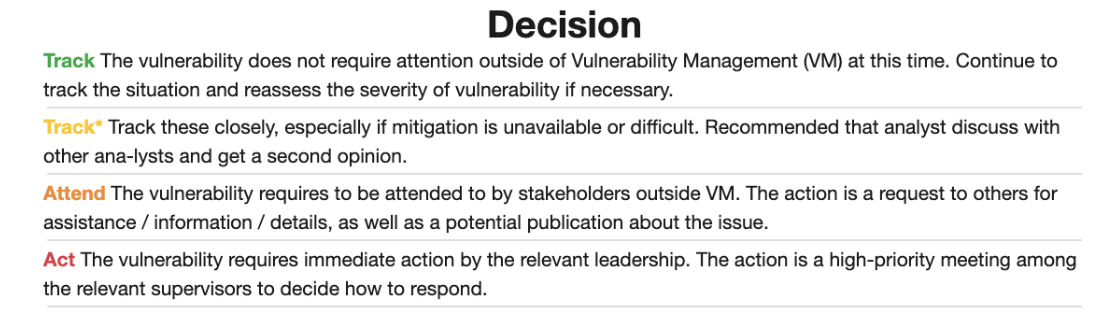

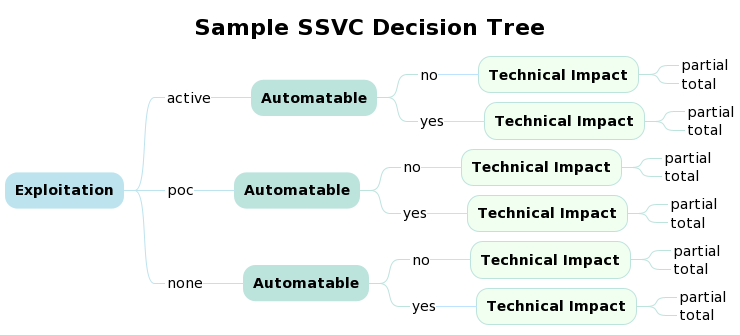

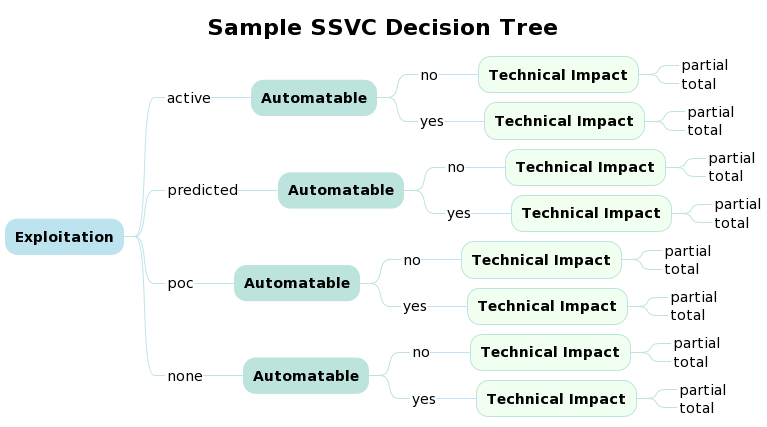

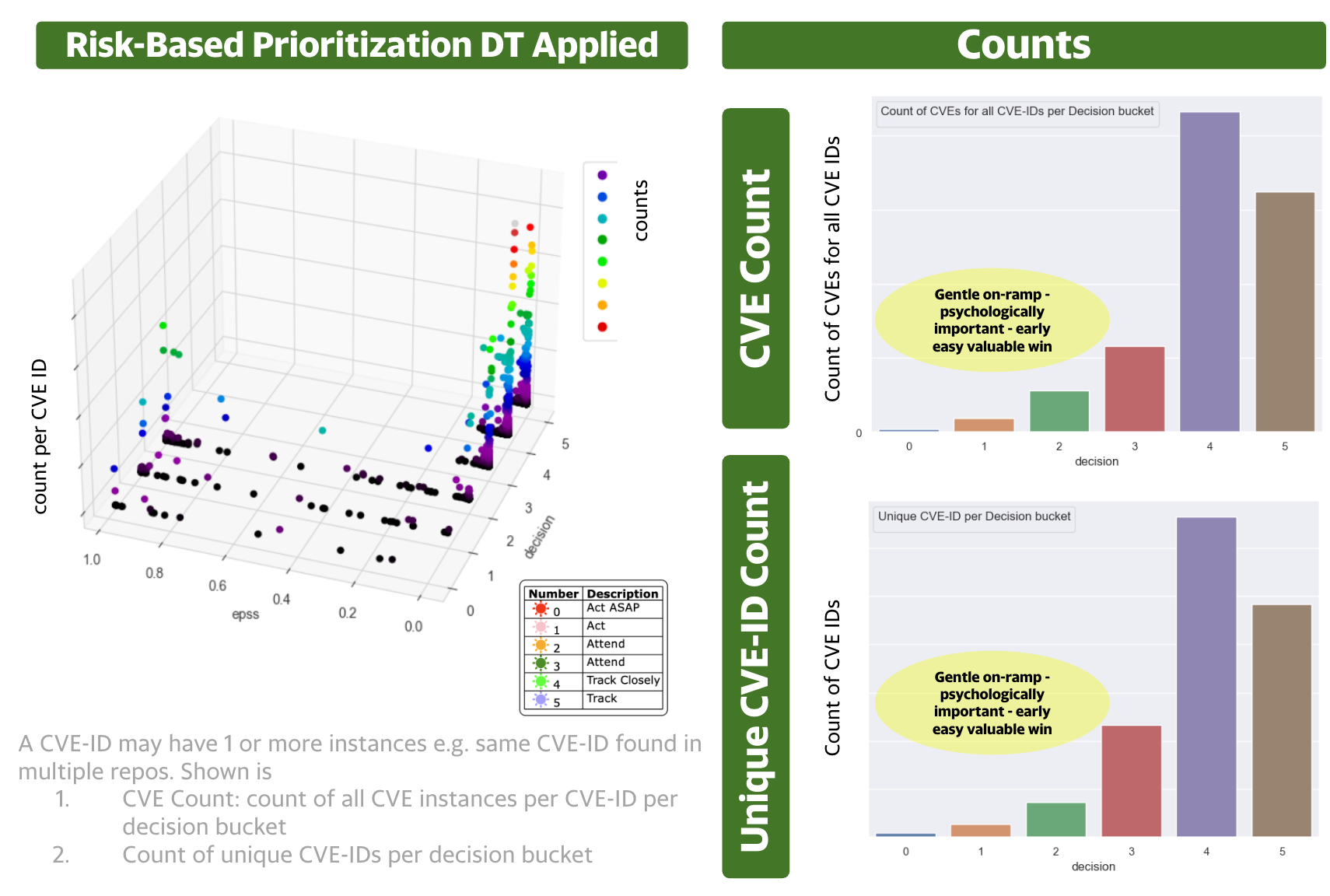

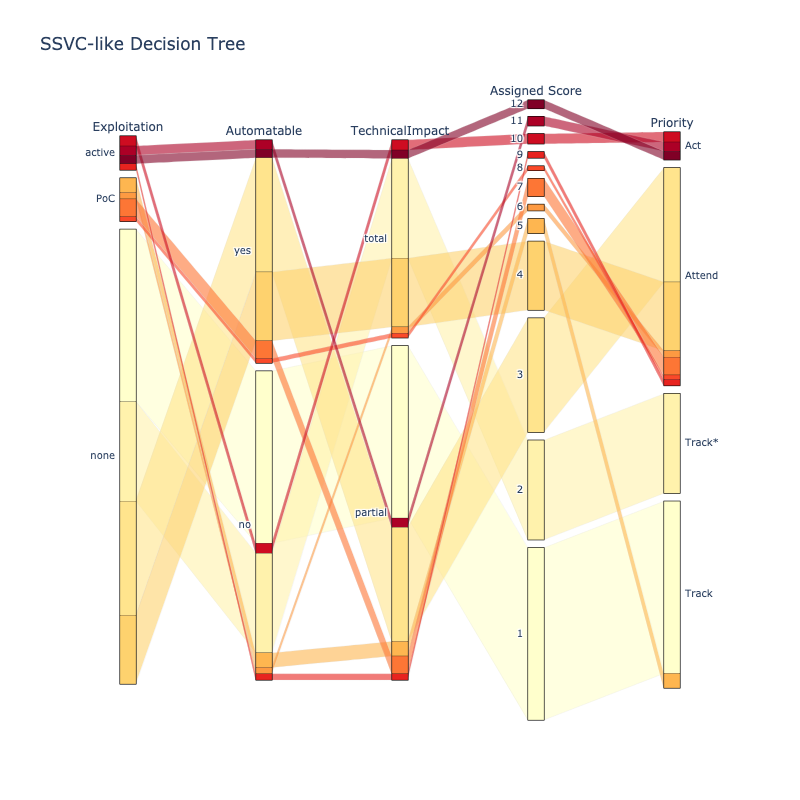

CISA SSVC Decision Trees¶

See cisa_ssvc_dt directory for these files.

CISA SSVC Decision Tree From Scratch Example Implementation¶

- Read the enriched CVE data from data_out/CVSSData_enriched.csv.gz

- Read the Decision Tree definition cisa_ssvc_dt/DT_rbp.csv

- Define the Decision Logic for the Decision Nodes

- Calculate the Decision Node Values for all CVEs

- Do some Exploratory Data Analysis with Venn Diagrams to understand our data

- Calculate the Output Decision from the Decision Node Values

- Plot Flow of All CVEs across the Decision Tree aka Sankey

- Read the Sankey Diagram template definition cisa_ssvc_dt/DT_sankey.csv

- Triage some CVEs

- Read a list of CVEs to triage cisa_ssvc_dt/triage/cves2triage.csv

- Get Decisions

- Plot

CISA SSVC Decision Tree Analysis for Feature Importance¶

- Read the Decision Tree definition cisa_ssvc_dt/DT_rbp.csv

- Perform Feature Importance using 2 methods

- Permutation Importance

- Drop-column Importance

See https://github.com/CERTCC/SSVC/issues/309 for the suggestion to add drop column importance to CISA SSVC.

Getting Data from Data Sources¶

A snapshot of the data used for this guide is available

A snapshot of this data is already available with the source in data_in

- A date.txt file is included in each folder with the data that contains the date of download.

But you can download current data as described here.

- get_data.sh gets the data that can be downloaded automatically and used as-is.

- Other data is manually downloaded - see instructions below.

- MSRC

- ExploitDB

- GPZ

- Larger files are gzip'd

National Vulnerability Database (NVD)¶

Get NVD data automatically

- A notebook or script in nvd downloads the NVD data.

- The data is output to data_out/CVSSData.csv.gz

- Note: The download method used will be deprecated some time after Dec 2023 per https://nvd.nist.gov/vuln/data-feeds

Google Project Zero (GPZ)¶

See 0day "In the Wild" GoogleSheet

- Select "All" tab.

- File - Download as csv

Microsoft Security Response Center (MSRC)¶

- Go to https://msrc.microsoft.com/update-guide/vulnerability

- Edit columns - ensure these columns are selected "Exploitability Assessment" and "Exploited"

- Download

Qualys TruRisk Report¶

The CVE data was extracted from the Qualys TruRisk Report PDF. This data is static so a date.txt is not included.

ExploitDB¶

- Download https://gitlab.com/exploit-database/exploitdb/-/blob/main/files_exploits.csv (manually for now - credentials required for automation)

- Extract the CVEs using the script in the directory i.e. some entries don't have CVEs - and have only Open Source Vulnerability Database (OSVDB) entries instead.

Other Vulnerability Data Sources¶

See other vulnerability data sources that are not currently used here.

Ended: Introduction

Risk ↵

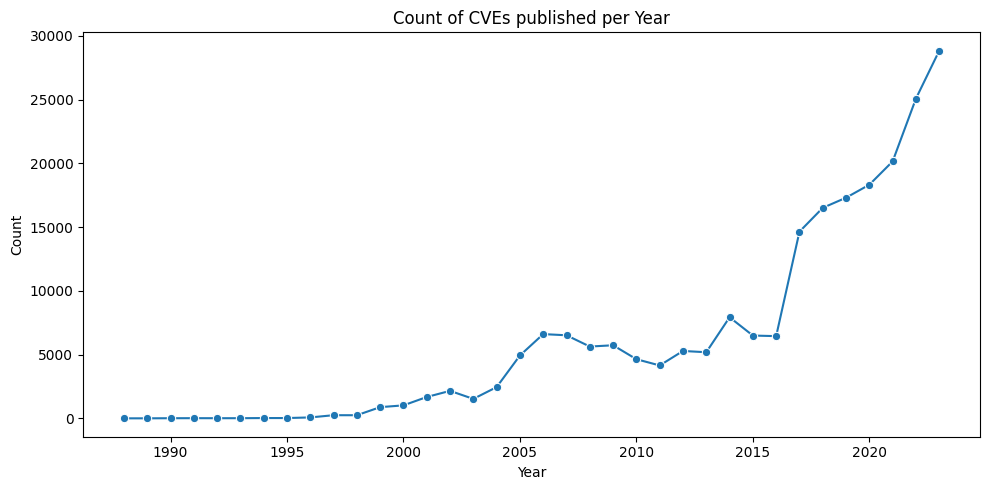

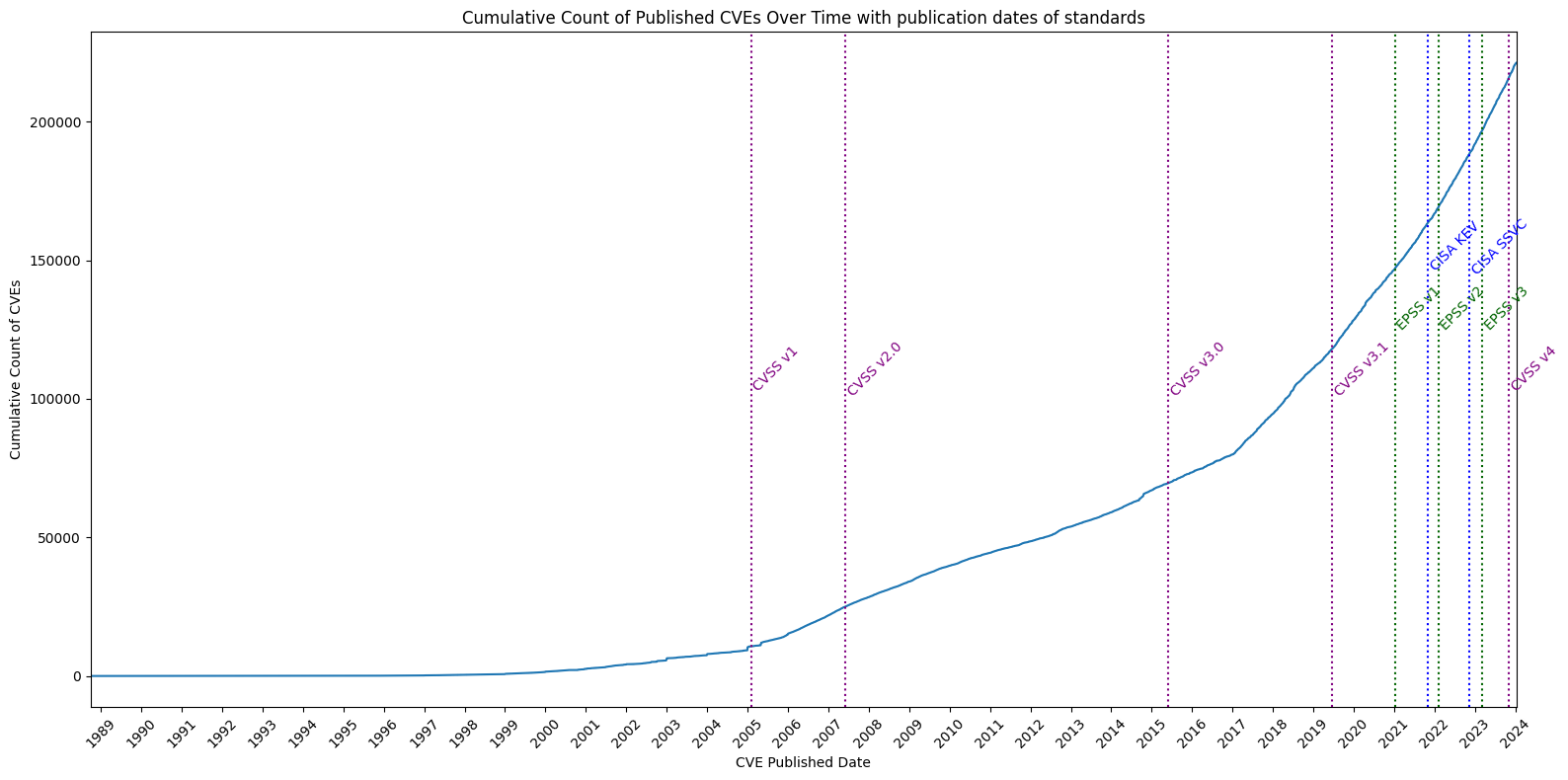

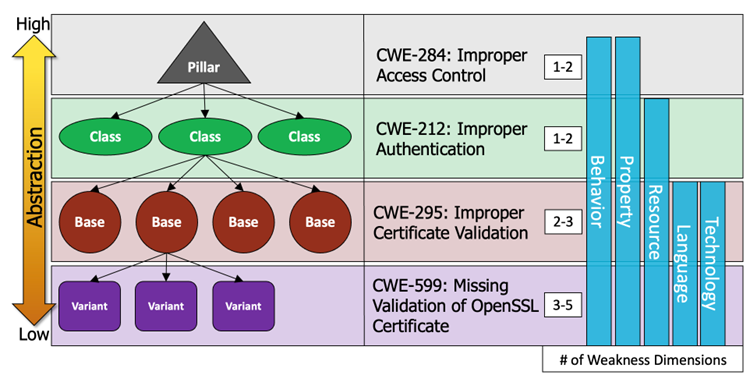

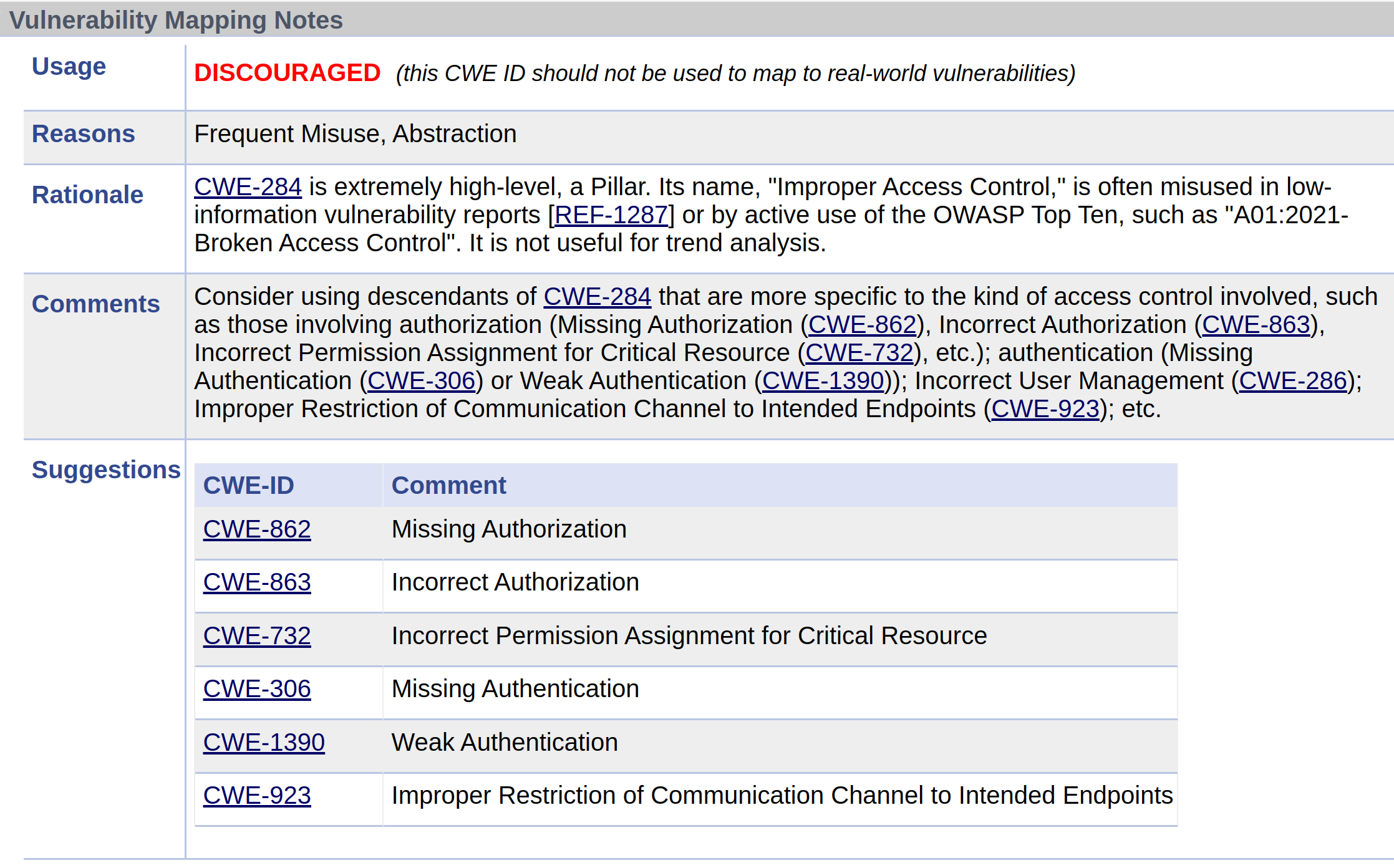

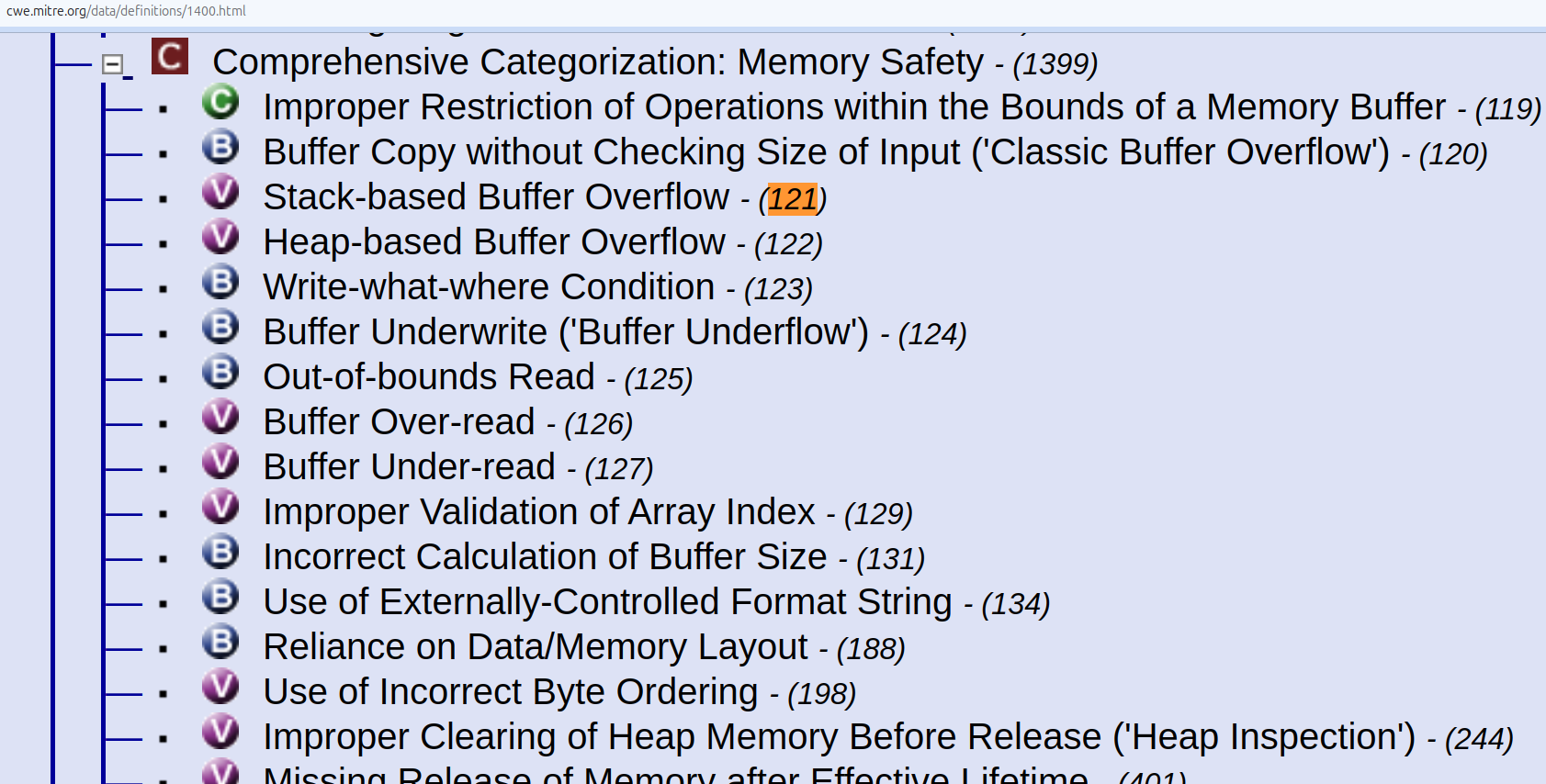

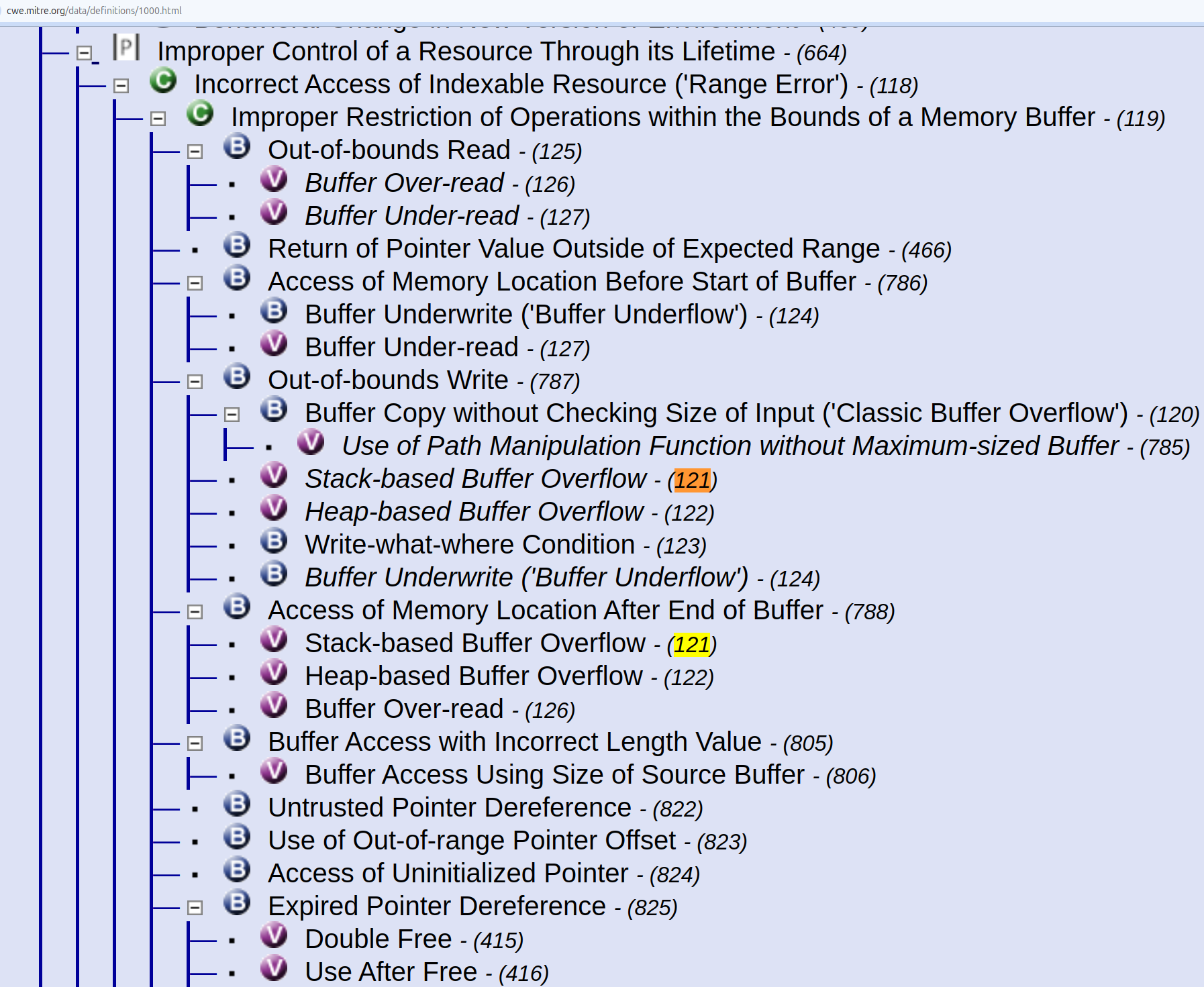

Vulnerability Landscape¶

Overview

A plot of CVE counts per year helps us understand why we need to be able to effectively prioritize CVEs (by Risk).

To do that we need to understand the building blocks we have to work with.

This section gives

- an overview of how the main relevant vulnerability standards fit together

- for recording and ranking vulnerabilities and their exploitation status or likelihood

- the characteristics of vulnerabilities

- a timeline

- with the count, and cumulative count, of CVEs over time (based on the Published date of each CVE)

- when different standards were released

Timeline¶

Vulnerability Standards¶

Key Risk Factor Standards¶

Quote

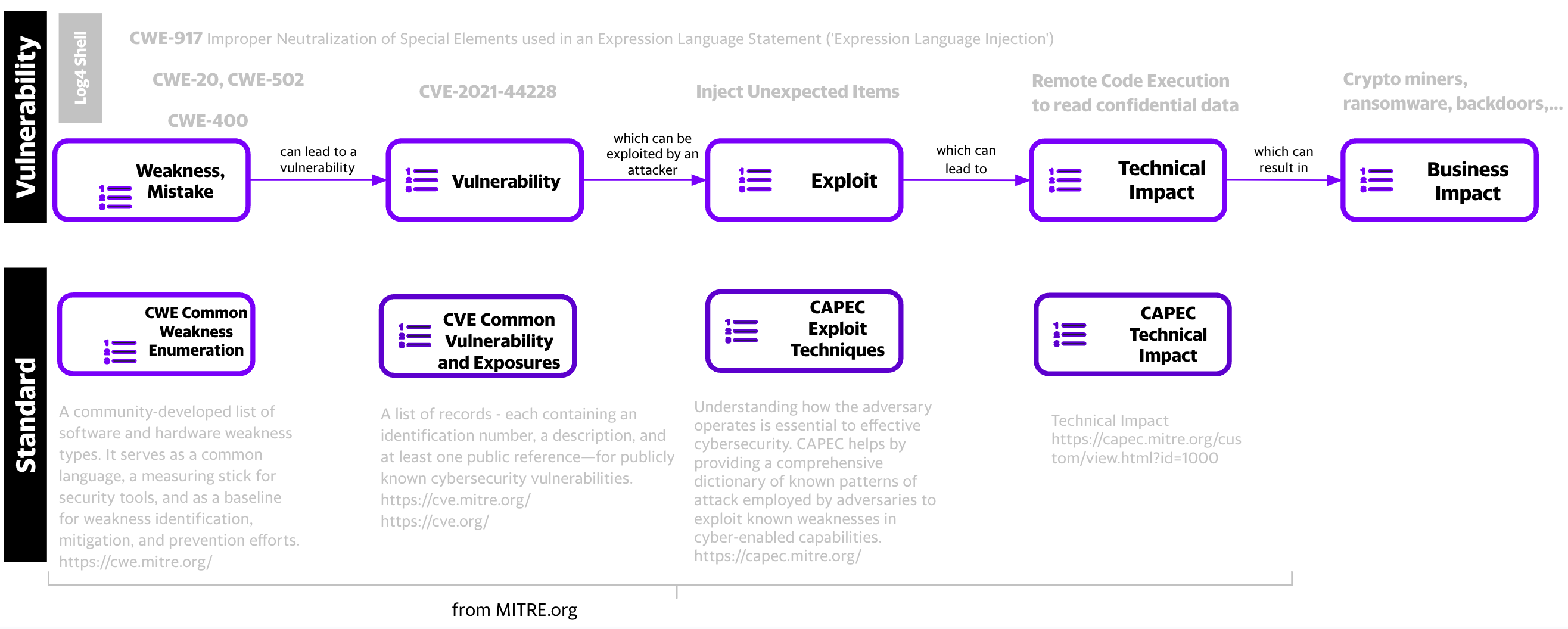

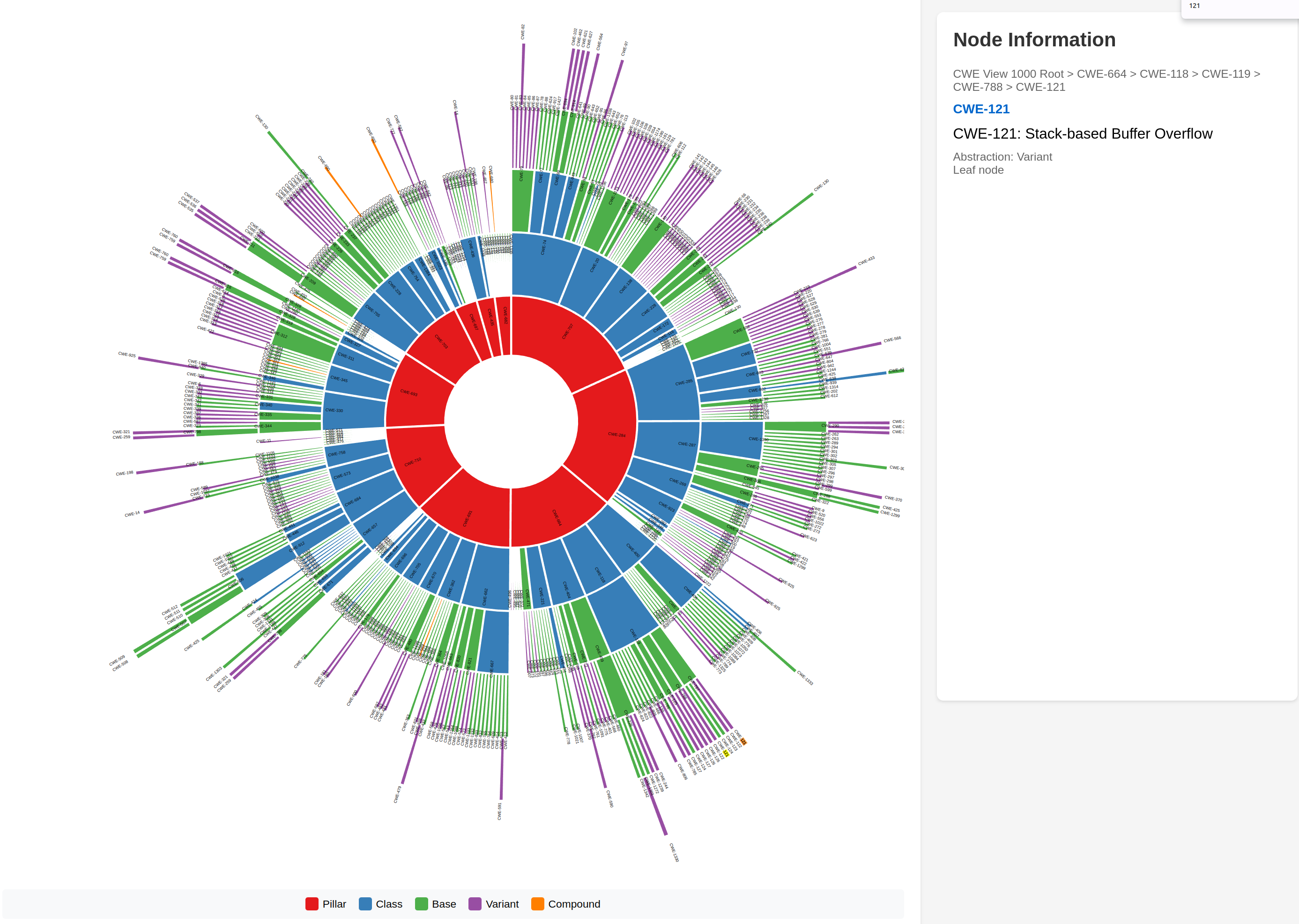

“CWE is the root mistake, which can lead to a vulnerability (tracked by CVE in some cases when known), which can be exploited by an attacker (using techniques covered by CAPEC)”, which can lead to a Technical Impact (or consequence), which can result in a Business Impact

-

“CWE focuses on a type of mistake that, in conditions where exploits will succeed, could contribute to the introduction of vulnerabilities within that product.”

-

“A vulnerability is an occurrence of one or more weaknesses within a product, in which the weakness can be used by a party to cause the product to modify or access unintended data, interrupt proper execution, or perform actions that were not specifically granted to the party who uses the weakness.” https://cwe.mitre.org/documents/cwe_usage/guidance.html

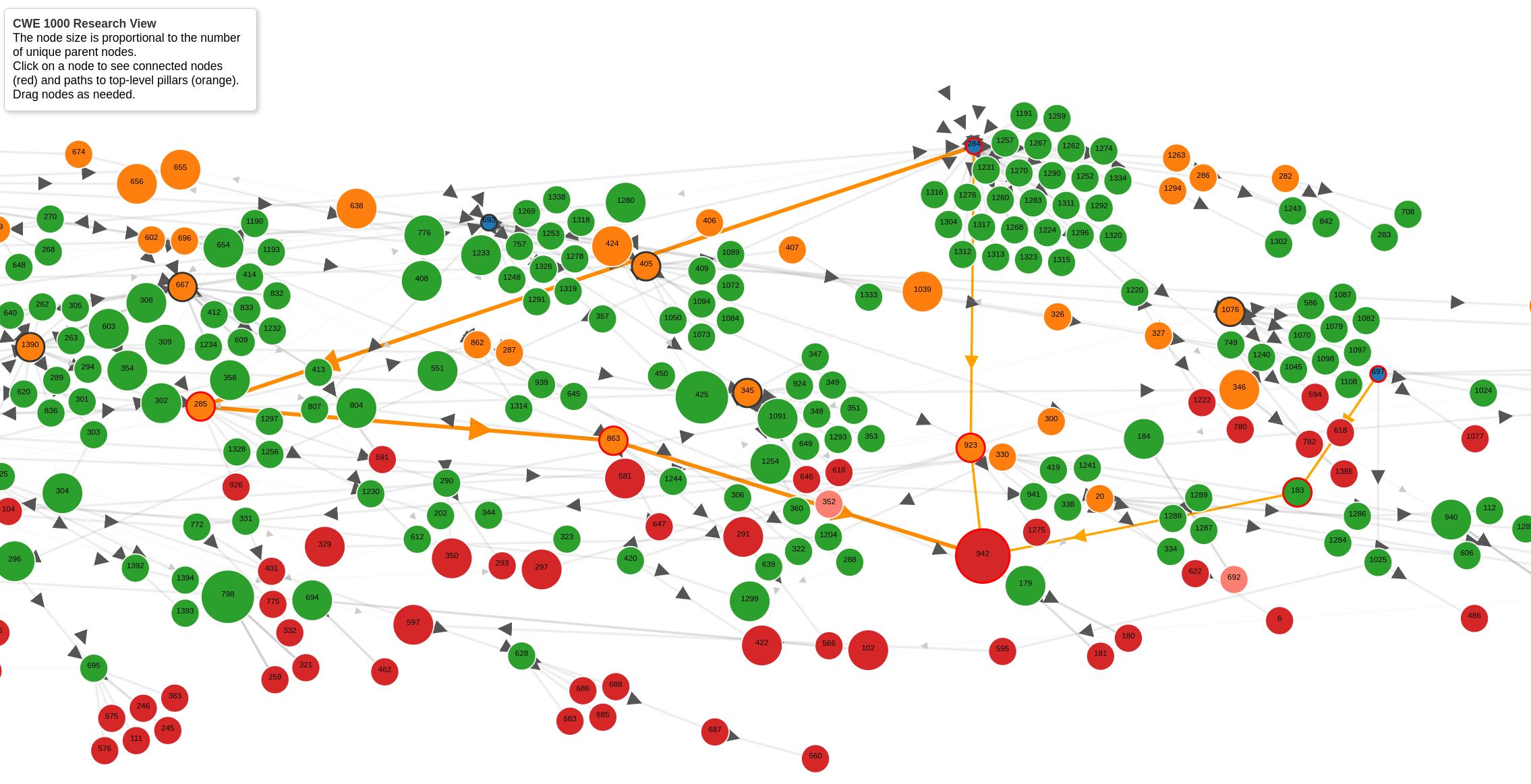

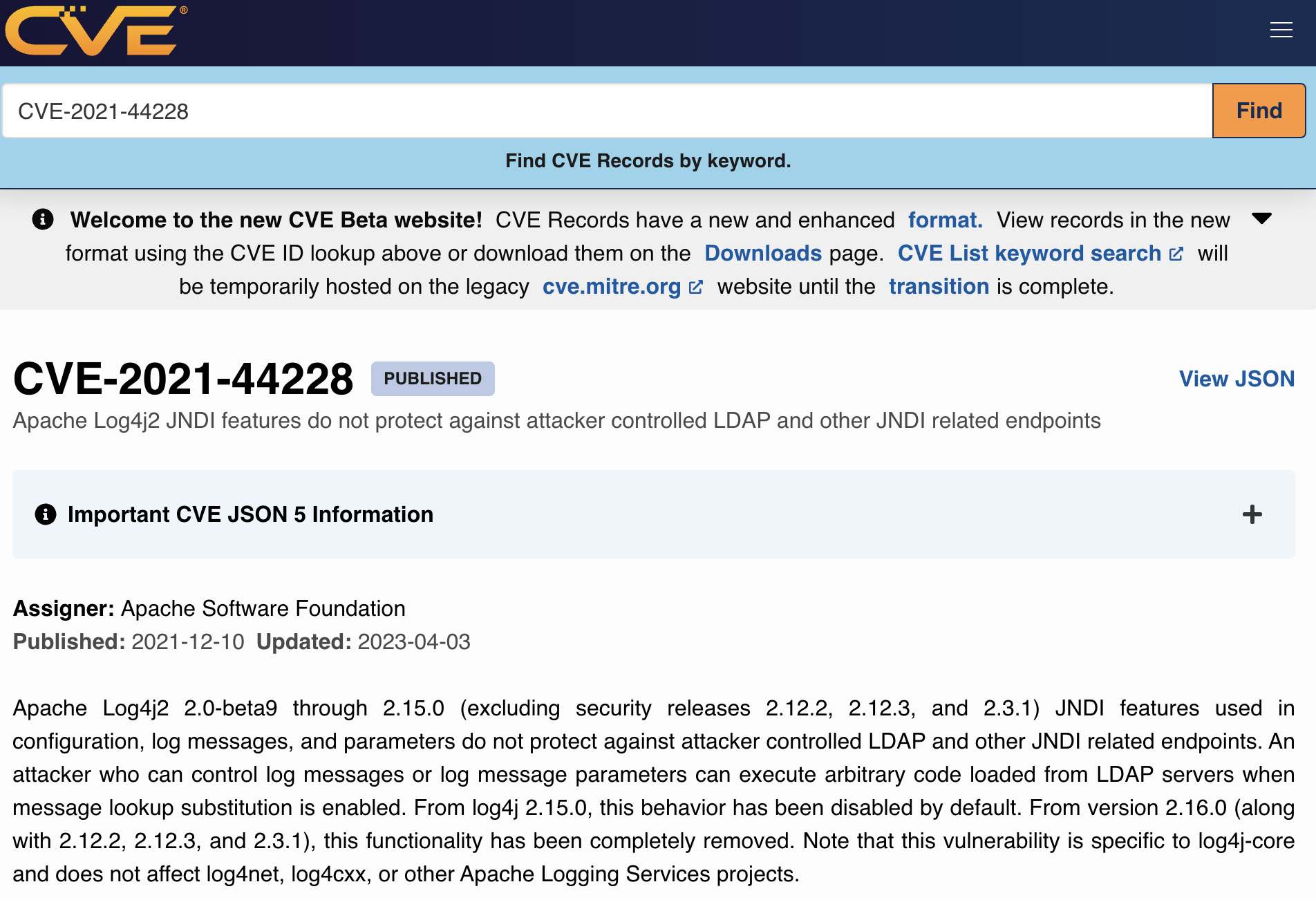

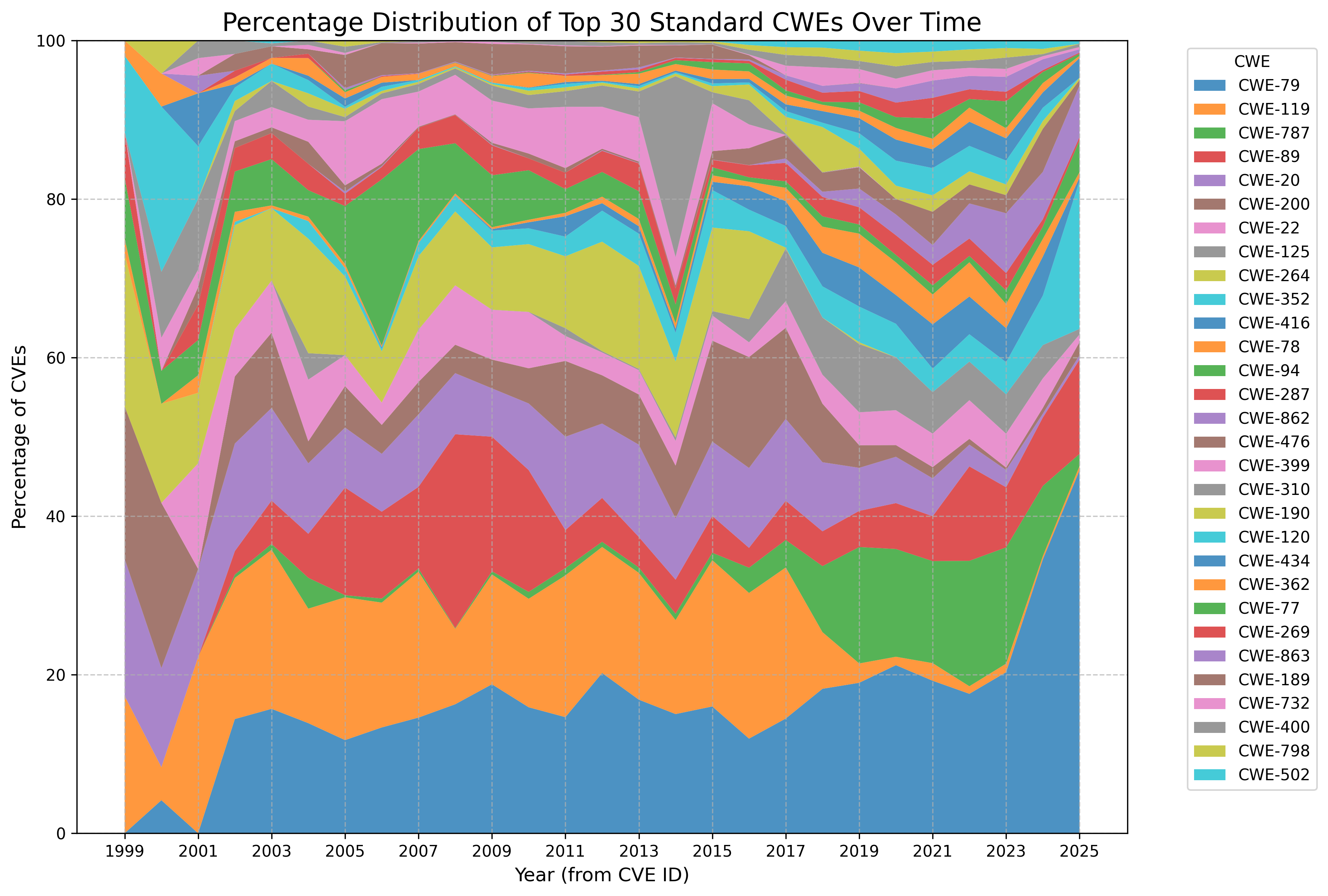

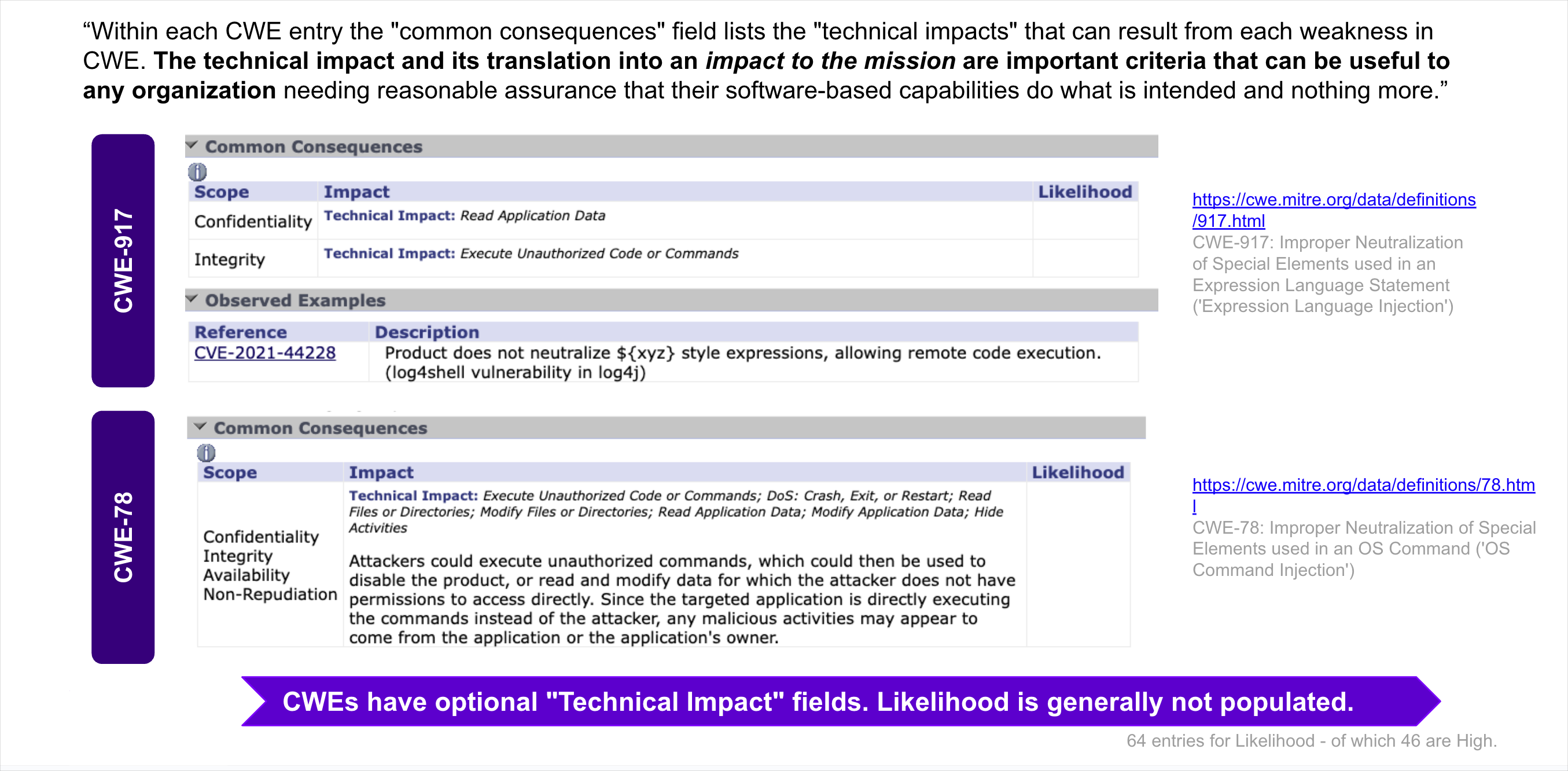

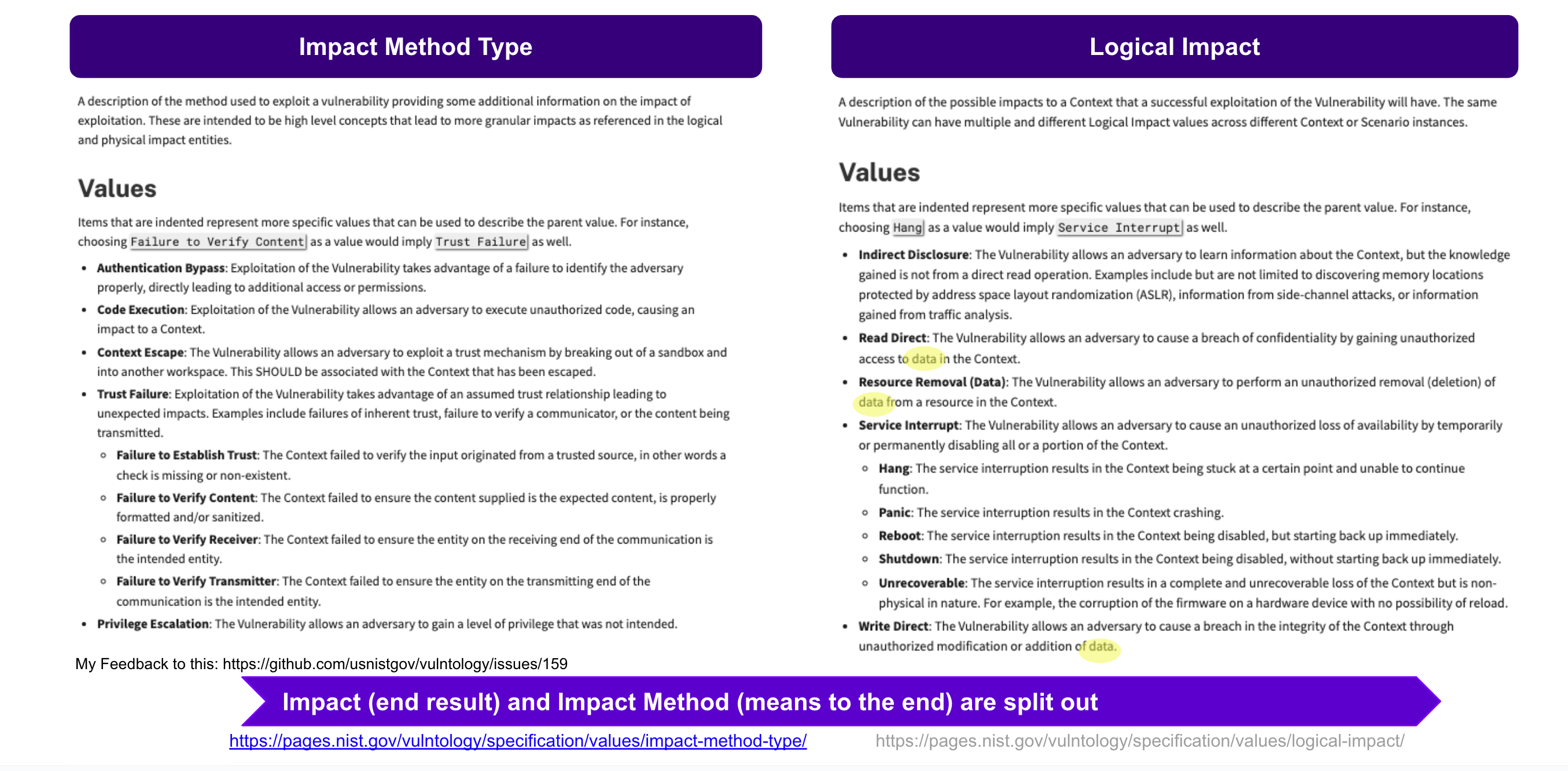

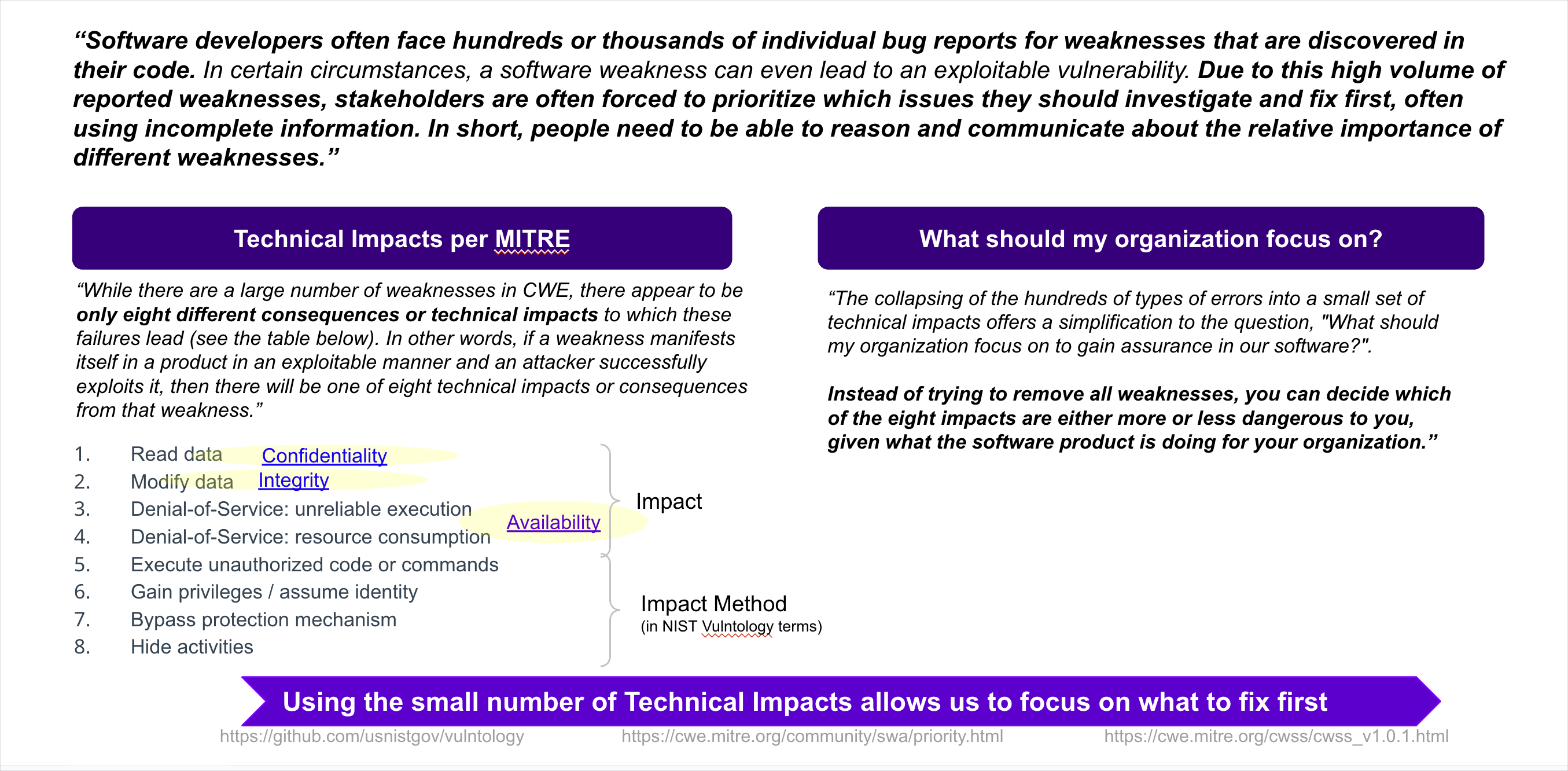

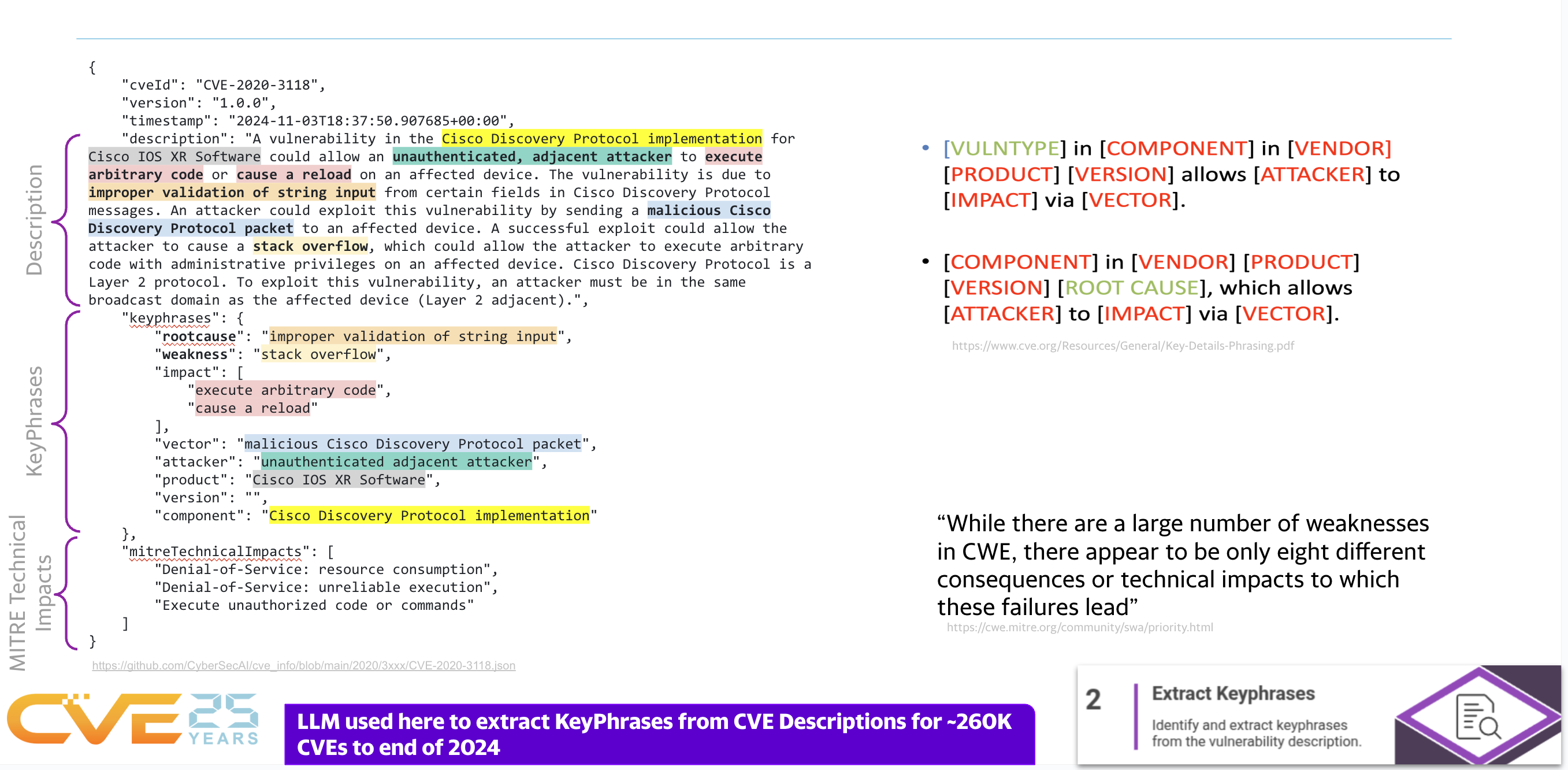

CVE - CWE - Technical Impact

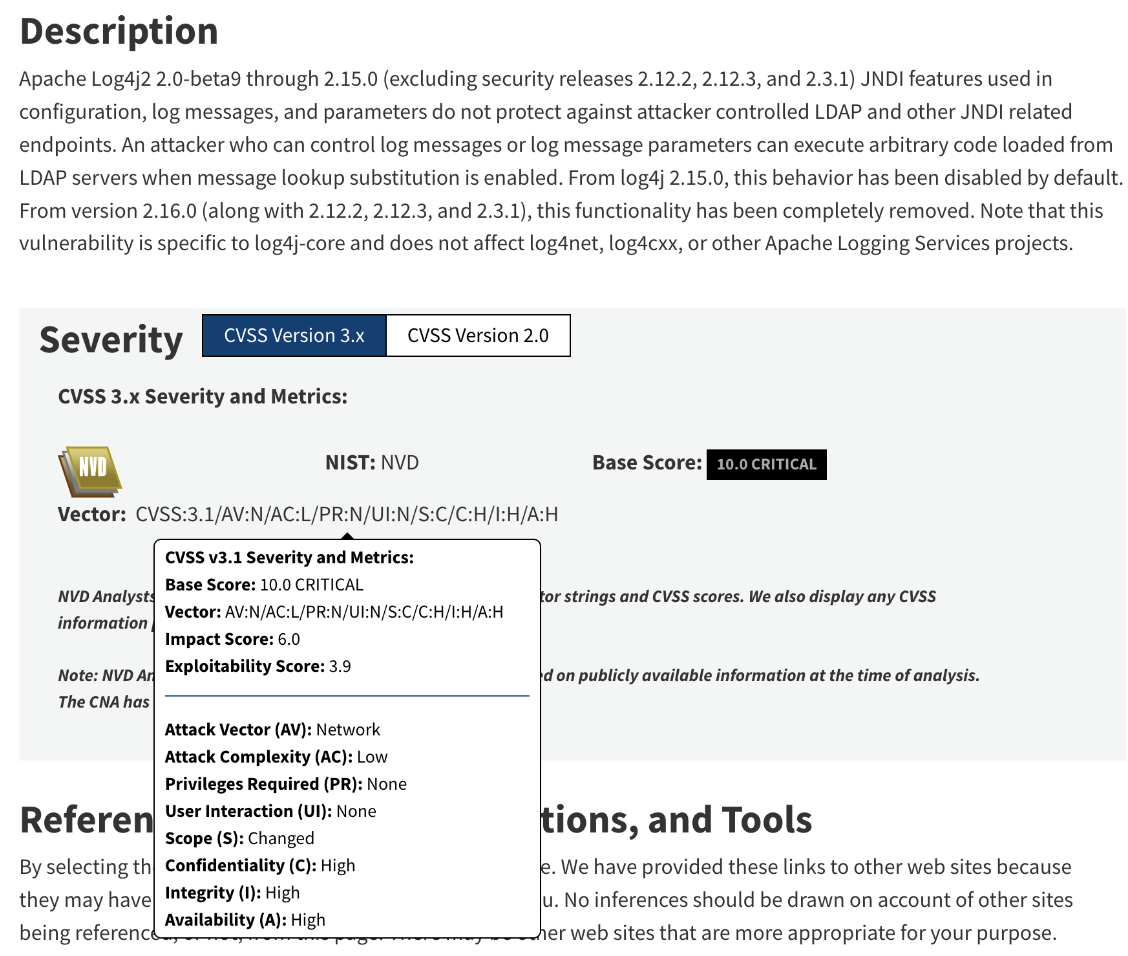

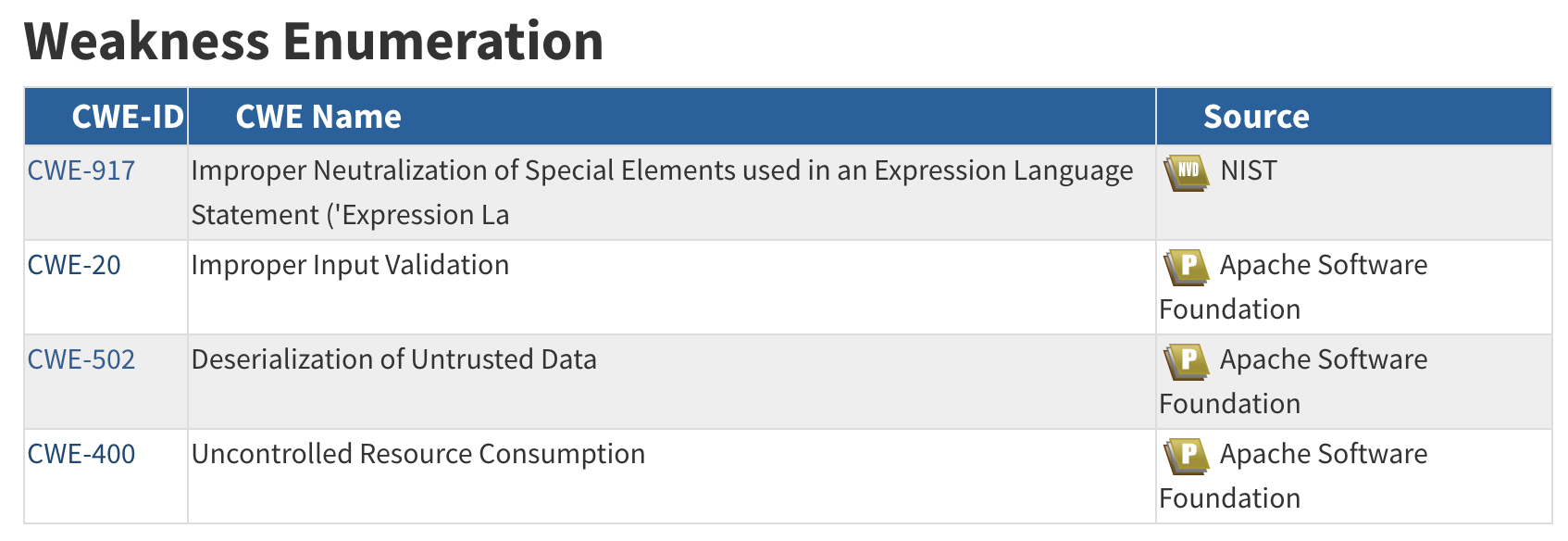

- A CVE may have zero or more CWEs associated with it e.g. Log4Shell CVE-2021-44228 has 4 CWEs

- A CWE may have zero or more Common Consequences/Technical Impacts associated with it e.g. Log4Shell CWE-917 has 2.

- A CWE may be associated with zero or more CVEs.

To understand MITRE CAPEC vs MITRE ATT&CK, see https://capec.mitre.org/about/attack_comparison.html.

Quote

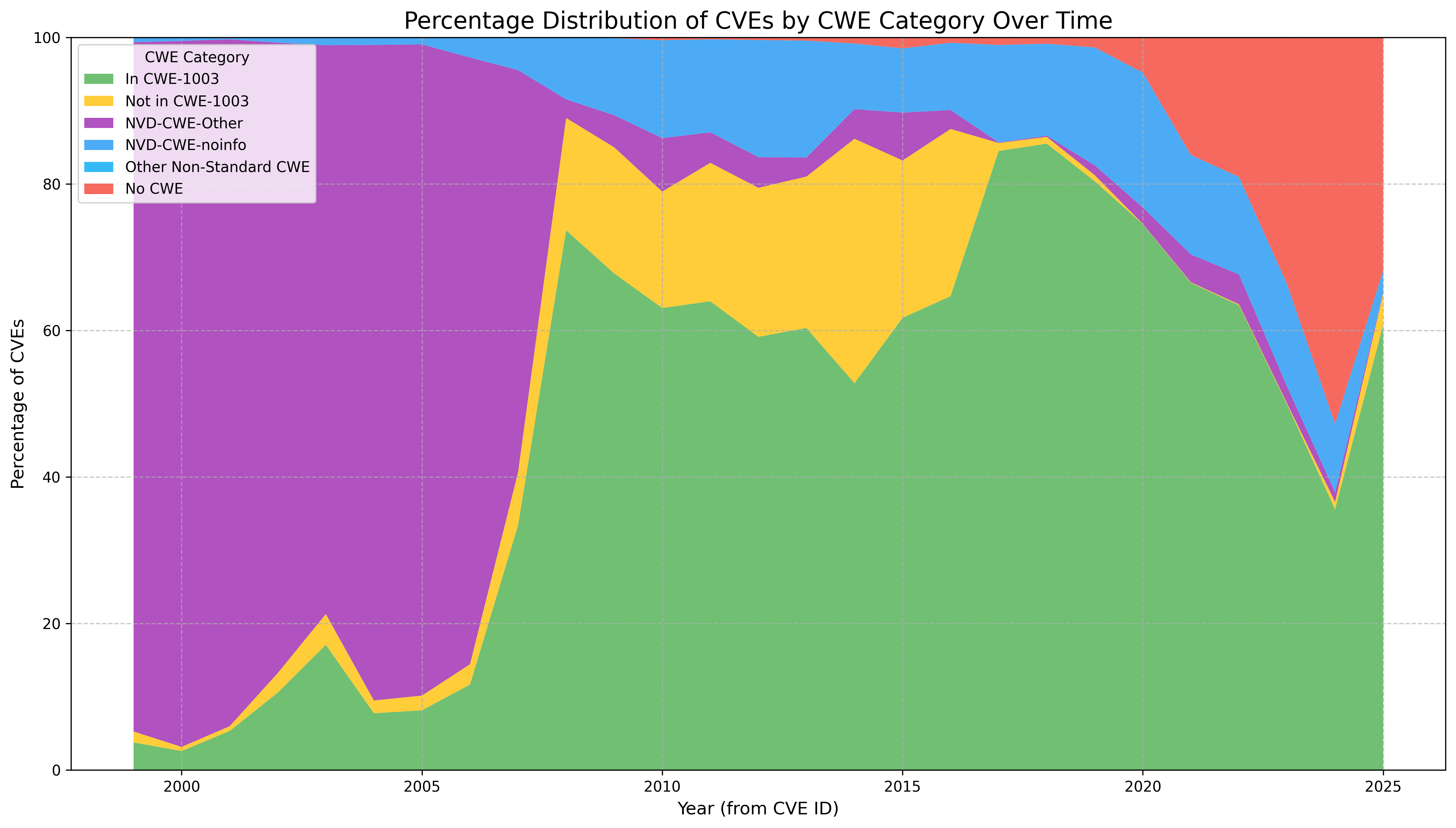

“NVD is using CWE as a classification mechanism that differentiates CVEs by the type of vulnerability they represent.”

“The NVD makes use of a subset of the entire CWE List, which is enumerated by the CWE-1003 (Weaknesses for Simplified Mapping of Published Vulnerabilities) view. NVD analysts will associate the most specific CWE value within the CWE-1003 view based on the publicly available information at the time of analysis.” https://nvd.nist.gov/vuln/cvmap/How-We-Assess-Acceptance-Levels, https://nvd.nist.gov/vuln/categories

Takeaways

- The count of published CVEs per year is increasing at a very significant rate.

- Organizations need an effective prioritization method to know what to remediate first.

- CISA KEV is a source of vulnerabilities that have been exploited in the wild. EPSS gives the probability a vulnerability will be exploited in the wild (in the next 30 days).

- CISA SSVC is an alternative to CVSS.

- “CWE is the root mistake, which can lead to a vulnerability (tracked by CVE in some cases when known), which can be exploited by an attacker (using techniques covered by CAPEC)”, which can lead to a Technical Impact (or consequence), which can result in a Business Impact

- NVD uses CWE-1003 (Weaknesses for Simplified Mapping of Published Vulnerabilities)

- A CVE may have zero or more CWEs associated with it e.g. Log4Shell has 4 CWEs

- A CWE may have zero or more Common Consequences/Technical Impacts associated with it e.g. Log4Shell CWE-917 has 2.

- A CWE may be associated with zero or more CVEs e.g. CWE-917 is associated with CVE-2023-22665, CVE-2023-41331, CVE-2023-41331, and many other CVEs.

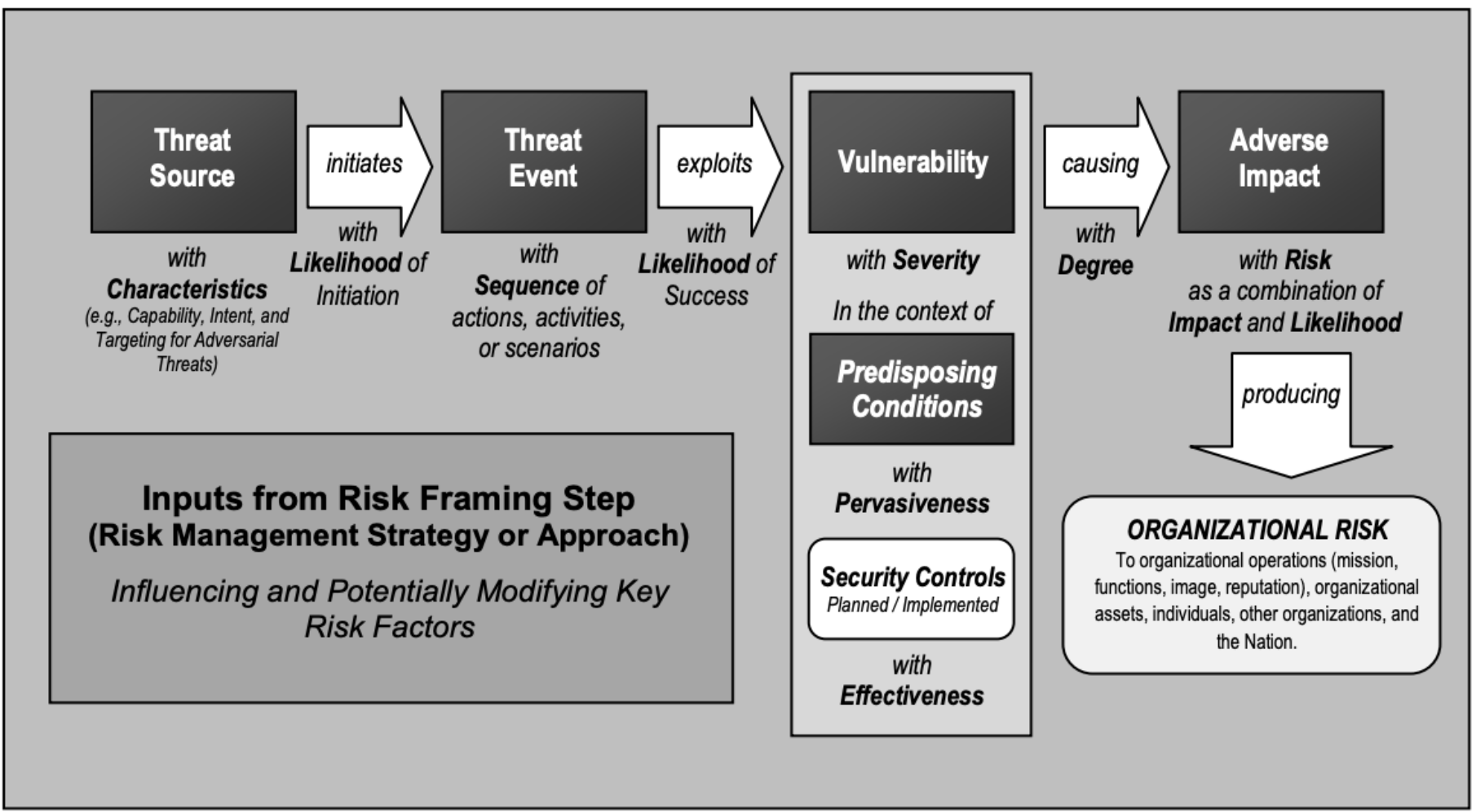

Understanding Risk¶

Overview

To understand how to Prioritize CVEs by Risk, we need to understand Risk, and how we can use the building blocks (standards, key risk factors) to inform risk.

This section gives a

- Definition of Risk and the key risk factors

- Risk Taxonomy or orientation map, showing where CVSS, CISA KEV, EPSS fit

- The different data sources that can be used to inform the key risk factors

Risk Definition¶

Risk Definition from NIST Special Publication 800-30 r1 Guide for Conducting Risk Assessments

Risk is per Asset and depends on Impact of a Vulnerability being exploited by a Threat

- RISK A measure of the extent to which an

entity is threatened by a potential circumstance or event, and

typically is a function of:

- the adverse impact, or magnitude of harm, that would arise if the circumstance or event occurs; and

- the likelihood of occurrence.

- Threat the potential for a threat-source to successfully exploit a particular information system vulnerability.

- Vulnerability Weakness in an information system, system security procedures, internal controls, or implementation that could be exploited by a threat source

- Impact The magnitude of harm that can be expected to result from the consequences of unauthorized disclosure of information, unauthorized modification of information, unauthorized destruction of information, or loss of information or information system availability.

- Asset The data, personnel, devices, systems, and facilities that enable the organization to achieve business purposes.

Risk Remediation Taxonomy¶

"Risk Remediation Taxonomy based on a BSides Conference presentation"

Risk is per Asset and depends on the Impact of a Vulnerability being exploited by a Threat

- The Vulnerability branch indicates there can be multiple

vulnerabilities for a given Asset

- Each vulnerability can be considered separately with its

associated Threat and Impact

- The Threat ("the potential for a

threat-source to successfully exploit a particular

information system vulnerability") depends on

- Evidence of Exploit activity, or Probability of Exploit

activity

- e.g. a vulnerability is known actively exploited in the wild

- e.g. a vulnerability has no known exploitation or known proof of concept or any known implementation

- The Exploitability of the Vulnerability

- e.g. a vulnerability that can be exploited remotely automatically via a generic tool/script with no permissions or user interaction required has high Exploitability.

- e.g. a vulnerability that requires local access, special permissions, bypass of a security feature, and user interaction, has low Exploitability.

- Evidence of Exploit activity, or Probability of Exploit

activity

- The Threat ("the potential for a

threat-source to successfully exploit a particular

information system vulnerability") depends on

- Each vulnerability can be considered separately with its

associated Threat and Impact

- The Asset branch is for one Asset

- An Asset can be impacted by multiple Vulnerabilities and Threats (the Vulnerability and Threat red boxes include multiple sections to convey there are multiple)

- Likelihood of Exploitation can also be viewed from an Asset point of view also (i.e. for all Threats and Vulnerabilities that affect that asset)

- Remediation

- Remediation is part of the overall Risk Remediation picture - but will not be covered here.

Risk Remediation Taxonomy Detailed¶

Where CVSS, EPSS, CISA KEV Fit¶

Adding more detail to the Vulnerability branch, to show where CVSS, EPSS, CISA KEV fit...

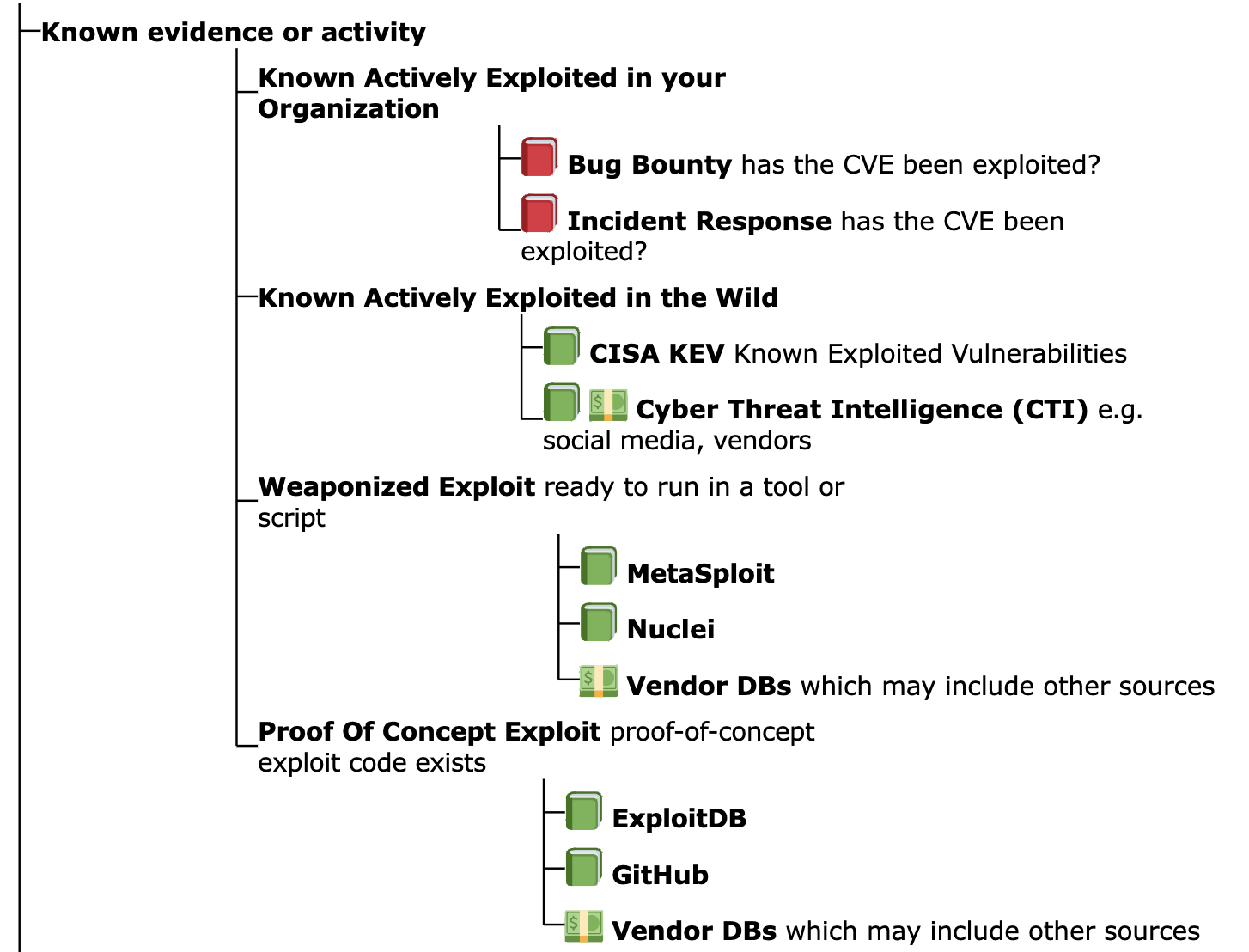

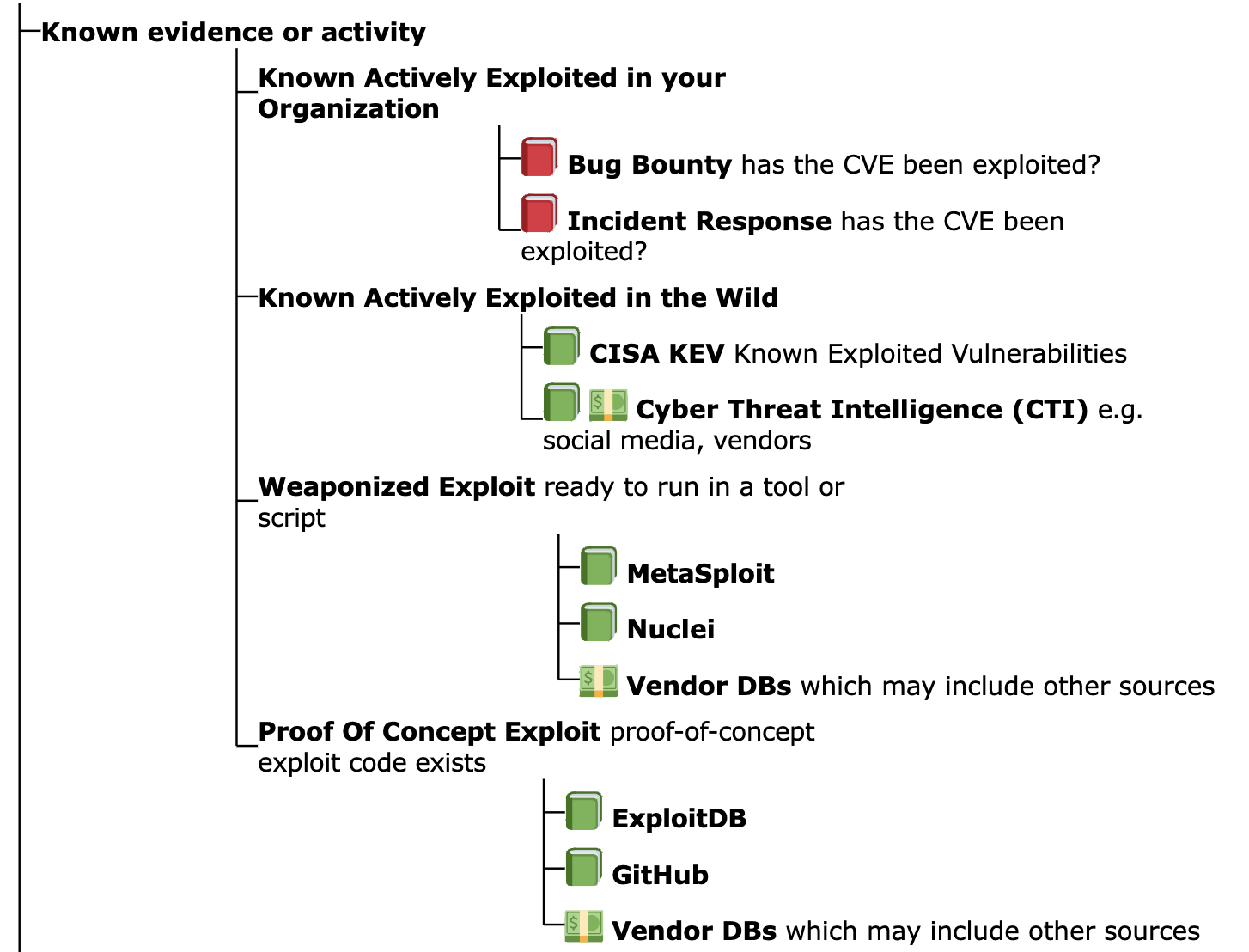

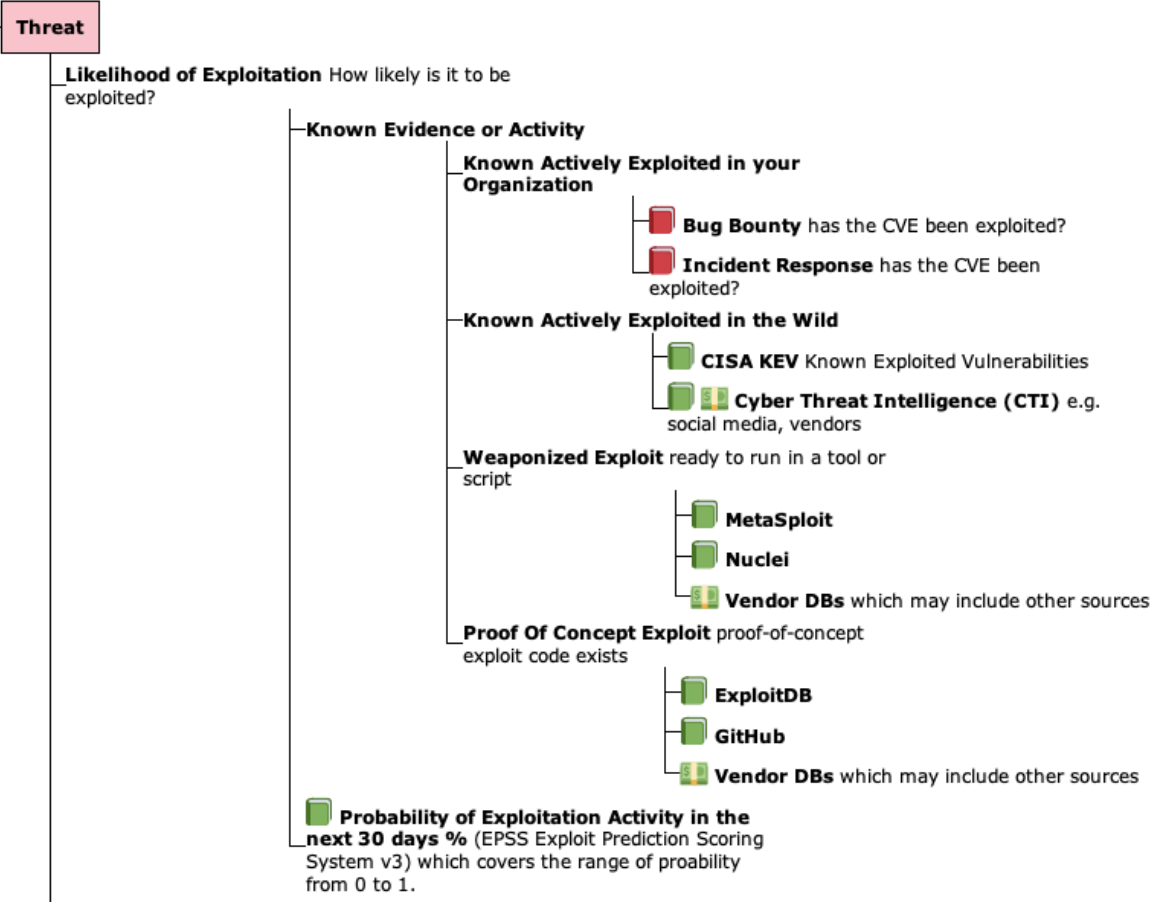

Threat Likelihood of Exploitation Data Sources¶

The Threat "Likelihood of Exploit" branch items are arranged by most likely on top

- Known Actively Exploited in your organization

- If a Vulnerability was previously exploited in your organization, then it's reasonable to think that Vulnerability is most likely to be exploited again in your organization in the future.

- Known Actively Exploited in the wild

- CISA KEV lists a subset of known actively exploited Vulnerabilities in the wild.

- VulnCheck KEV lists additional known actively exploited Vulnerabilities in the wild (and includes CISA KEV CVEs)

- There isn't an authoritative common public list of ALL Vulnerabilities that are Known Actively Exploited in the wild.

- Weaponized Exploit

- A Vulnerability with a "Weaponized Exploit" (but not yet Known

Exploited) is more likely to be exploited than a Vulnerability

with "Proof Of Concept Exploit" available

- "vulnerabilities with published exploit code are as much as 7 times as likely to be exploited in the wild"

- "If it’s ‘weaponized’ (think metasploit), the odds of a vulnerability being exploited in the wild really balloon from about 3.7% to 37.1%." Per Jay Jacobs, Cyentia

- A Vulnerability with a "Weaponized Exploit" (but not yet Known

Exploited) is more likely to be exploited than a Vulnerability

with "Proof Of Concept Exploit" available

- Proof Of Concept Exploit

- ExploitDB is an example of where Vulnerability Proof Of Concept Exploits are available.

- EPSS Probability of Exploitation

- The EPSS score covers the range of Likelihood of Exploitation from 0 to 100%.

Why Should I Care?¶

Prioritizing by exploitation reduces cost and risk

Prioritizing vulnerabilities that are being exploited in the wild, or are more likely to be exploited, reduces the

- cost of vulnerability management

- risk by reducing the time adversaries have access to vulnerable systems they are trying to exploit

Quote

-

"many vulnerabilities classified as “critical” are highly complex and have never been seen exploited in the wild - in fact, less than 4% of the total number of CVEs have been publicly exploited" (see BOD 22-01: Reducing the Significant Risk of Known Exploited Vulnerabilities).

Cybersecurity and Infrastructure Security Agency emphasizes prioritizing remediation of vulnerabilities that are known exploited in the wild:

-

"As a top priority, focus your efforts on patching the vulnerabilities that are being exploited in the wild or have competent compensating control(s) that can. This is an effective approach to risk mitigation and prevention, yet very few organizations do this. This prioritization reduces the number of vulnerabilities to deal with. This means you can put more effort into dealing with a smaller number of vulnerabilities for the greater benefit of your organization's security posture."

How Many Vulnerabilities are Being Exploited?¶

Only about 5% or fewer of all CVEs have been exploited

- “Less than 3% of vulnerabilities have weaponized exploits or evidence of exploitation in the wild, two attributes posing the highest risk,” Qualys

- “Only 3 percent of critical vulnerabilities are worth prioritizing,” https://www.datadoghq.com/state-of-application-security/

- “Less than 4% of the total number of CVEs have been publicly exploited”, CISA KEV

- “We observe exploits in the wild for 5.5% of vulnerabilities in our dataset,” Jay Jacobs, Sasha Romanosky, Idris Adjerid, Wade Baker

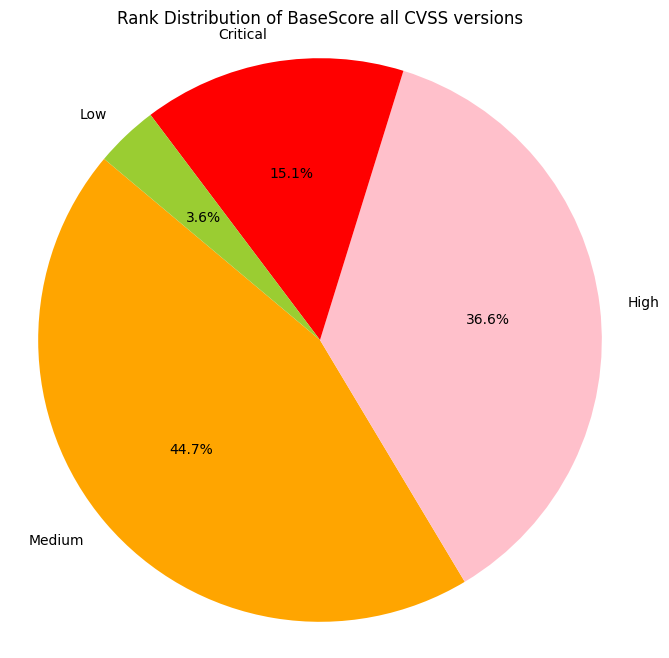

In contrast, for CVSS (Base Scores):

- ~15% of CVEs are ranked Critical (9+)

- ~65% of CVEs are ranked Critical or High (7+)

- ~96% of CVEs are ranked Critical or High or Medium (4+)

What Vulnerabilities are Being Exploited?¶

There isn't an authoritative common public list of ALL CVEs that are Known Actively Exploited in the wild

There is considerable variation in the

- Total Number of Vulnerabilities being Exploited from different sources (per above)

- The criteria for "Known Actively Exploited in the wild".

CISA KEV includes a subset of Vulnerabilities that are Known-Exploited in the wild.

CISA KEV currently includes ~1.1K CVEs, and defines criteria for inclusion

CISA Known Exploited Vulnerabilities Catalog (CISA KEV) is a source of vulnerabilities that have been exploited in the wild

There's several criteria including:

Quote

"A vulnerability under active exploitation is one for which there is reliable evidence that execution of malicious code was performed by an actor on a system without permission of the system owner."

"Events that do not constitute as active exploitation, in relation to the KEV catalog, include:

- Scanning

- Security research of an exploit

- Proof of Concept (PoC)

EPSS provides a probability of exploitation for all published CVEs (in the next 30 days)

For EPSS, the criteria for exploit evidence (used to feed the model) is a detection of traffic matching an intrusion detection/prevention systems (IDS/IPS), or honeypot, signature (not a successful exploitation).

Scanning would likely trigger a detection. In contrast, For CISA KEV, scanning does not constitute as active exploitation.

Various CTI lists other Known-Exploited CVEs

There are numerous Cyber Threat Intelligence sources e.g. vendors, publicly available data, that provide lists of CVEs that are Known-Exploited in addition to those listed on CISA KEV.

CVSS¶

CVSS Base Score should not be used alone to assess risk!

CVSS Base Score¶

Quote

CVSS Base (CVSS-B) scores are designed to measure the severity of a vulnerability and should not be used alone to assess risk. https://www.first.org/cvss/v4.0/user-guide#CVSS-Base-Score-CVSS-B-Measures-Severity-not-Risk

The CVSS Base score is not a good indicator of likelihood of exploit (it is not designed or intended to be)

- "There’s no inherent correlation between the vulnerability and if threat actors are exploiting them in terms of those severity ratings" Gartner, Nov 2021

- "CVSS score performs no better than randomly picking vulnerabilities to remediate and may lead to negligible risk reductions" Comparing Vulnerability Severity and Exploits Using Case-Control Studies, 2014

Quote

The Base metric group represents the intrinsic characteristics of a vulnerability that are constant over time and across user environments. It is composed of two sets of metrics: the Exploitability metrics and the Impact metrics.

- The Exploitability metrics reflect the ease and technical means by which the vulnerability can be exploited. That is, they represent characteristics of the “thing that is vulnerable”, which we refer to formally as the “vulnerable system”.

- The Impact metrics reflect the direct consequence of a successful exploit, and represent the consequence to the “things that suffer the impact”, which may include impact on the vulnerable system and/or the downstream impact on what is formally called the “subsequent system(s)”.

The CVSS Threat Metric Group contains an Exploit Maturity but it is the responsibility of the CVSS Consumer/user to populate the values

CVSS Exploit Maturity¶

See section CVSS Exploit Maturity for more details.

Quote

This metric measures the likelihood of the vulnerability being attacked, and is based on the current state of exploit techniques, exploit code availability, or active, “in-the-wild” exploitation. Public availability of easy-to-use exploit code or exploitation instructions increases the number of potential attackers by including those who are unskilled. Initially, real-world exploitation may only be theoretical. Publication of proof-of-concept exploit code, functional exploit code, or sufficient technical details necessary to exploit the vulnerability may follow. Furthermore, the available exploit code or instructions may progress from a proof-of-concept demonstration to exploit code that is successful in exploiting the vulnerability consistently. In severe cases, it may be delivered as the payload of a network-based worm or virus or other automated attack tools.

The Threat Likelihood of Exploitation Data Sources can be used here.

If there is an absence of exploitation evidence, then EPSS can be used to estimate the probability it will be exploited

EPSS and Exploitation Evidence¶

"If there is evidence that a vulnerability is being exploited, then that information should supersede anything EPSS has to say, because again, EPSS is pre-threat intel. If there is an absence of exploitation evidence, then EPSS can be used to estimate the probability it will be exploited." https://www.first.org/epss/faq

The "Known Evidence or Activity branch" lists data sources where we can get that evidence of exploitation.

Zero Days¶

Quote

EPSS scores won’t be available for Zero Days (because EPSS depends on the CVE being published and it can take several days for the associated CVE to be published).

"The State of Exploit Development: 80% of Exploits Publish Faster than CVEs".

Quote

A zero-day vulnerability is a flaw in software or hardware that is unknown to a vendor prior to its public disclosure, or has been publicly disclosed prior to a patch being made available. As soon as a zero day is disclosed and a patch is made available it, of course, joins the pantheon of known vulnerabilities.

Don’t go chasing zero days, patch your known vulnerabilities instead….

Vulnerabilities increase risk, whether or not they start as zero days. We advise organizations to operate with a defensive posture by applying available patches for known, exploited vulnerabilities sooner rather than later.

Quote

Zero day vulnerabilities made up only approximately 0.4% of vulnerabilities during the past decade. The amount spent on trying to detect them is out of kilter with the actual risks they pose. This is compared with the massive numbers of breaches and infections that come from a small number of known vulnerabilities that are being repeatedly exploited. As a top priority, focus your efforts on patching the vulnerabilities that are being exploited in the wild or have competent compensating control(s) that can. This is an effective approach to risk mitigation and prevention, yet very few organizations do this.

Focus on the Biggest Security Threats, Not the Most Publicized, Gartner, Nov 2017

Takeaways

- CVSS or EPSS should not be used alone to assess risk - they can be used together:

- CVSS Base Score is a combination of Exploitability and Impact

- Various data sources can be used as evidence of exploitation activity or likelihood of exploitation activity - but there isn't

- a single authoritative source

- an industry standard on how to do this

- EPSS should be used with other exploitation evidence; if there is an absence of exploitation evidence, then EPSS can be used to estimate the probability it will be exploited.

- EPSS scores won't be available for Zero-Days

- "Don’t go chasing zero days, patch your known vulnerabilities instead"

- It is the responsibility of the CVSS Consumer/user to populate the CVSS Exploit Maturity values i.e. unlike the CVSS Base Score, these are not provided.

- Criteria for "Exploitation" are different for EPSS and CISA KEV.

Likelihood of Exploitation Populations¶

Overview

Our ability to remediate depends on

- the priority (risk) of CVEs - the ones we want to remediate based on our security posture

- In the Understanding Risk chapter, we saw the ordered Likelihood of Exploitation for different populations of CVEs in the Risk Remediation Taxonomy.

- the number of CVEs for that priority (risk) - that we have the capacity/resources to fix

This section gives a

- view of the sizes of those populations

- the data sources for those populations

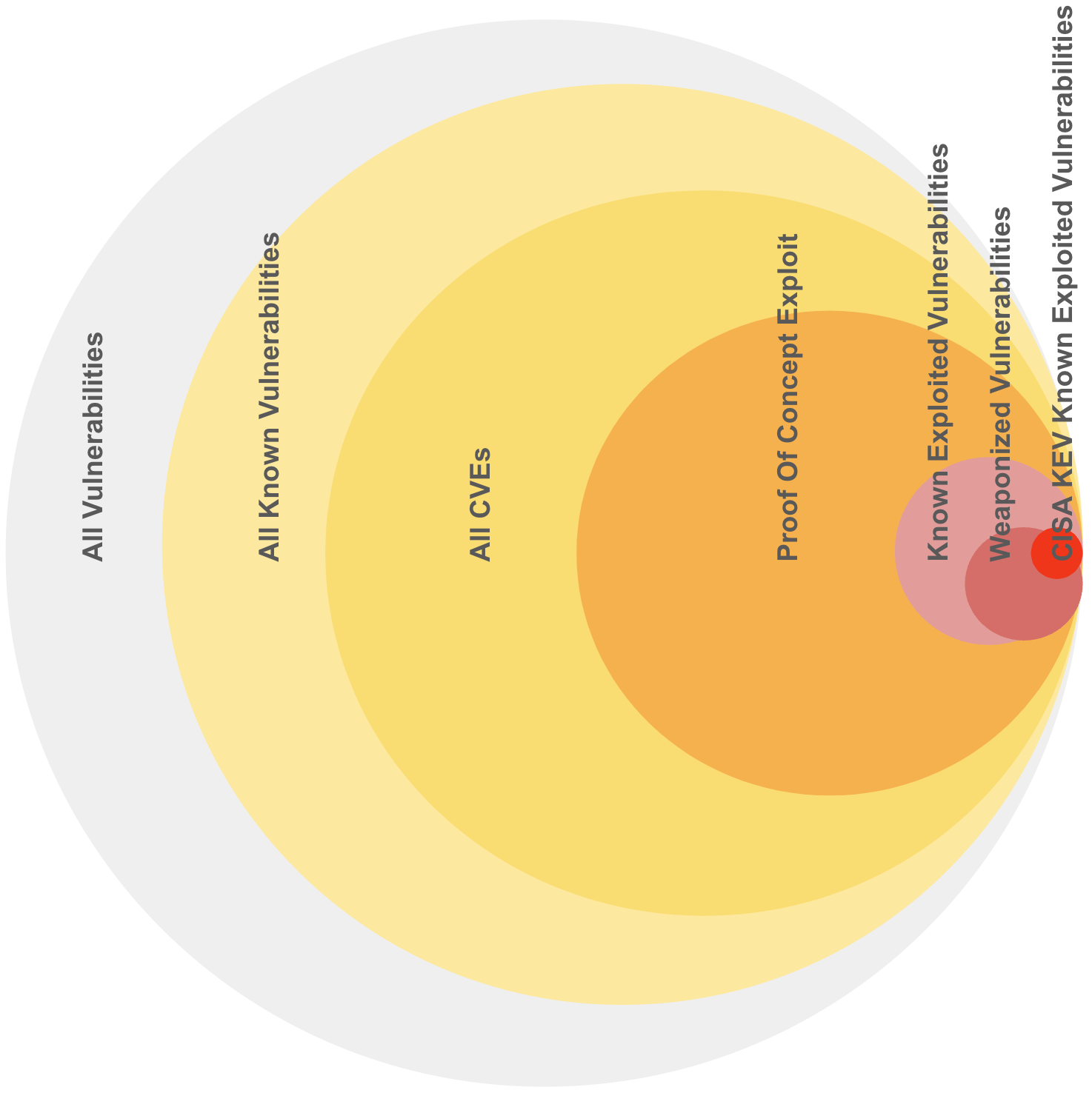

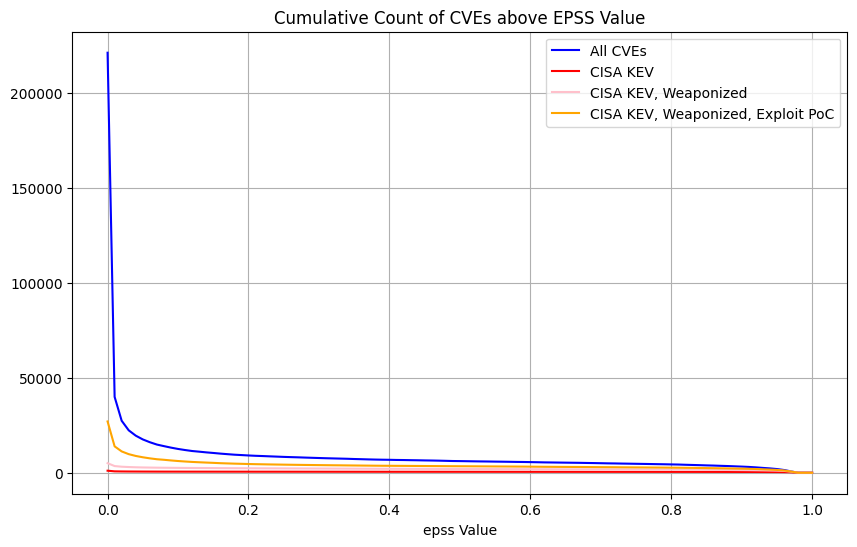

Population Sizes¶

Representative sizes and overlaps shown as there isn't authoritative exact data.

- ~~50% (~~100K) of all CVEs (~200K) have known exploits Proof Of Concepts available (based on a commercial CTI product used by the author)

- ~~5% (~10K) of all CVEs are actively exploited

- There isn't a single complete authoritative source for these CVEs

- ~~0.5% (~1K) of all CVEs (~200K) are in CISA Known Exploited Vulnerability

CVEs represent a subset of all vulnerabilities. Your organization will have a subset of these CVEs

- Not all exploits are public/known.

- Not all public exploits have CVEs.

- A typical enterprise will have a subset of exploits/CVEs: ~~10K order of magnitude unique CVE IDs.

- The counts of these unique CVE IDs may follow a Pareto type distribution i.e. there will be many instances of a small number of CVE IDs.

Quote

We know from executing tens of thousands of pen tests that most exploits don't require a CVE. When we're successful using a CVE, it usually isn't on the KEV list.

Likelihood of Exploitation Data Sources¶

This table shows the number of CVEs (from all published CVEs) that are listed in that data source:

| Data Source | Detail | ~~ CVE count K |

|---|---|---|

| CISA KEV | Active Exploitation | 1 |

| VulnCheck KEV | Active Exploitation | 2 |

| Metasploit modules | Weaponized Exploit | 3 |

| Nuclei templates | Weaponized Exploit | 2 |

| ExploitDB | Published Exploit Code | 25 |

Note

VulnCheck KEV was launched just before this guide was released. So it has not been included in any analysis for this guide initial release - but will likely be for future releases.

EPSS Scores are available for all published CVEs - and cover the range of Likelihood of Exploitation from 0 to 100%.

a proof of concept code for the exploit, is not a good indication that an exploit will actually show in the wild

The presence of a vulnerability in the EDB, i.e. if there exists a proof of concept code for the exploit, is not a good indication that an exploit will actually show in the wild. A Preliminary Analysis of Vulnerability Scores for Attacks in Wild , 2012, Allodi, Massacci

The population sizes for Likelihood of Exploitation decrease, as Likelihood of Exploitation increases

The population sizes for higher Likelihood of Exploitation (Active ~~5%, Weaponized ~~3%) are relatively small compared to Proof Of Concept (~~50%), and All CVEs (100%).

This table lists the main public data sources.

Other Vulnerability Data Sources¶

In addition, there are many more Vulnerability Data Sources:

- Open Source vulnerability database

- "This infrastructure serves as an aggregator of vulnerability databases that have adopted the OSV schema, including GitHub Security Advisories, PyPA, RustSec, and Global Security Database, and more."

- Red Hat Security Advisories/RHSB

- Go Vulnerability Database

- Dell Security Advisory

- Qualys Vulnerability database

- Tenable Vulnerability database

- Trickest "Almost every publicly available CVE PoC"

Cyber Threat Intelligence vendors may provide an aggregation of this data.

Takeaways

- There isn't a single complete authoritative source for all CVEs that are actively exploited - so we need to use multiple incomplete imperfect sources.

- The population sizes for higher Likelihood of Exploitation (Active ~~5%, Weaponized ~~3%) are relatively small compared to Proof Of Concept (~~50%), and All CVEs (100%).

- Not all vulnerabilities are public/known, and for those that are known, not all of them have CVEs assigned.

- A typical enterprise will have a subset of exploits/CVEs: ~~10K order of magnitude unique CVE IDs.

- The counts of these unique CVE IDs may follow a Pareto type distribution i.e. for your environment, there will likely be many instances of a small number of CVE IDs.

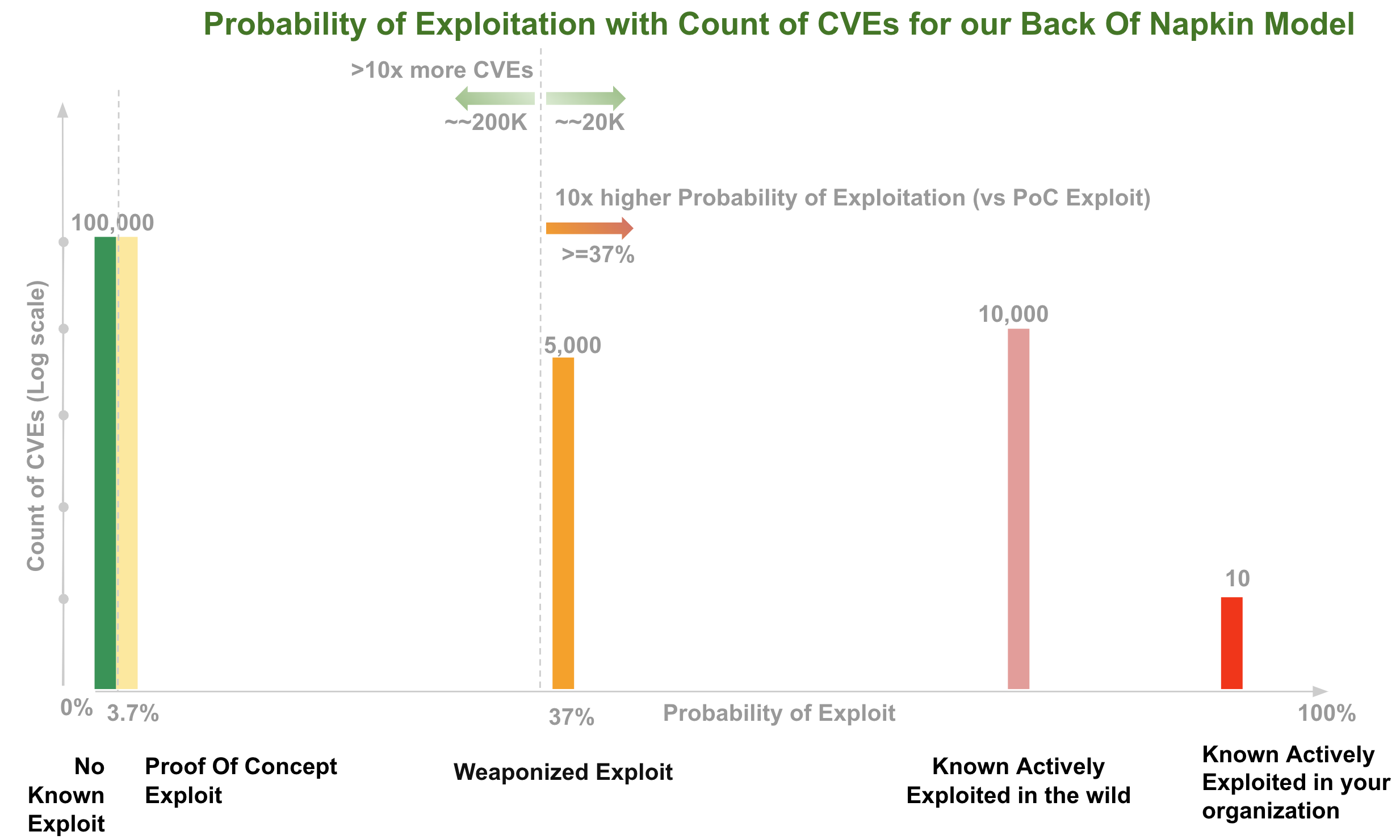

Applied Back of the napkin Likelihood of Exploitation Model¶

Overview

As users, we want to know which CVEs we need to remediate first based on our available resources/capacity to remediate them.

To develop an understanding of Risk Based Prioritization, we'll build a back of the napkin Risk Based Prioritization model that focuses on Likelihood of Exploitation.

We'll look at the tradeoff between Likelihood of Exploitation vs how many CVEs we need to fix.

When we look at Risk Based Prioritization models in products and production later in this guide; you'll be able to recognize many of the elements in our back of the napkin model

Back of the napkin Model¶

For this back of the napkin model:

- We use Threat Likelihood of Exploitation per our Risk Taxonomy

-

For this back of the napkin model, let's assume that

-

The odds of a vulnerability being exploited are in the order in the diagram (highest risk on top):

-

The probabilities quoted in section "Threat Likelihood of Exploit" are correct (though exact figures don't matter on this back of the napkin - the point is there's a significant difference)

- 37.1% it the vulnerability is weaponized

- 3.7% if a Proof Of Concept exists

- For counts of CVEs

- the counts of CVEs are per "Likelihood of Exploitation Populations" diagram

- there's a relatively small number of vulnerabilities that have been Known Exploited in our organization i.e. 10 or less

- We're looking at all published CVEs.

- You could apply this back of the napkin model to the subset of CVEs in your environment.

- We'll use a very exaggerated figure of ~~20K for CVEs that

have weaponized exploits or evidence of exploitation in the

wild (as it's a back of the napkin exercise and we want to

understand if our model is still useful in a well beyond

worst case scenario):

- So that gives ~~10% of CVEs that have weaponized exploits or evidence of exploitation in the wild

- In contrast, Qualys give a much smaller figure, “Less than 3% of vulnerabilities have weaponized exploits or evidence of exploitation in the wild, two attributes posing the highest risk”

-

A rough drawing on the back of our napkin of Probability of Exploitation vs the Count of CVEs:

Observations

For this back of the napkin model:

- Using the Weaponized Exploit Probability of Exploitation (37%) as a

threshold gives two "10x's" i.e. at this threshold there's

- 10x higher probability of exploitation or greater

- there's 10% of CVEs (or >10x more CVEs are below this threshold)

- we can see that as we raise the threshold for Probability of Exploit, we have less CVEs to fix, (but we may miss some CVEs that we should have fixed).

For our organization risk posture, maybe we want to use the lower Probability of Exploitation Threshold associated with a Proof Of Concept exploit being available (3.7%).

- In this case, there's ~~100K CVEs below this threshold in the back of napkin model, and ~~120K above it (to remediate based on our threshold). So we remediate a lot more CVEs (and a lot more than the ~~5% that are exploited), but we miss a lot less of the ones we should have fixed).

Probability of Exploitation and associated population counts informs Remediation

Having these Probability of Exploitation values vs associated count of CVEs allows us make an informed decision based on our

- risk posture - using Likelihood of Exploitation

- resources/capacity available to remediate CVEs

Back of the napkin Model Remediation Policy¶

Per our Risk Remediation Taxonomy, Likelihood of Exploitation informs one part of Risk per Vulnerability.

To complete our back of the napkin Model for Prioritization we can use e.g. CVSS also.

So our Remediation Policy (for our first pass triage) could be:

- for all CVEs that are at Weaponized Exploitation and above in our

Likelihood of Exploitation diagram (~~10% using our beyond worst

case figure)

- remediate all CVEs that have a High or Critical CVSS score (~~65%)

This would require fixing 6.5% of CVEs (10% x 65%).

Takeaways

With this very simple back of the napkin model, and very exaggerated counts of weaponized exploitation, we see that by using Likelihood of Exploitation, we need to remediate 1/10 of CVEs versus using CVSS Base Score alone!!!

Ended: Risk

Standards ↵

Common Vulnerability Scoring System (CVSS)¶

Overview

The Common Vulnerability Scoring System (CVSS) is widely used in the cybersecurity industry as a standard method for assessing the "severity" of security vulnerabilities.

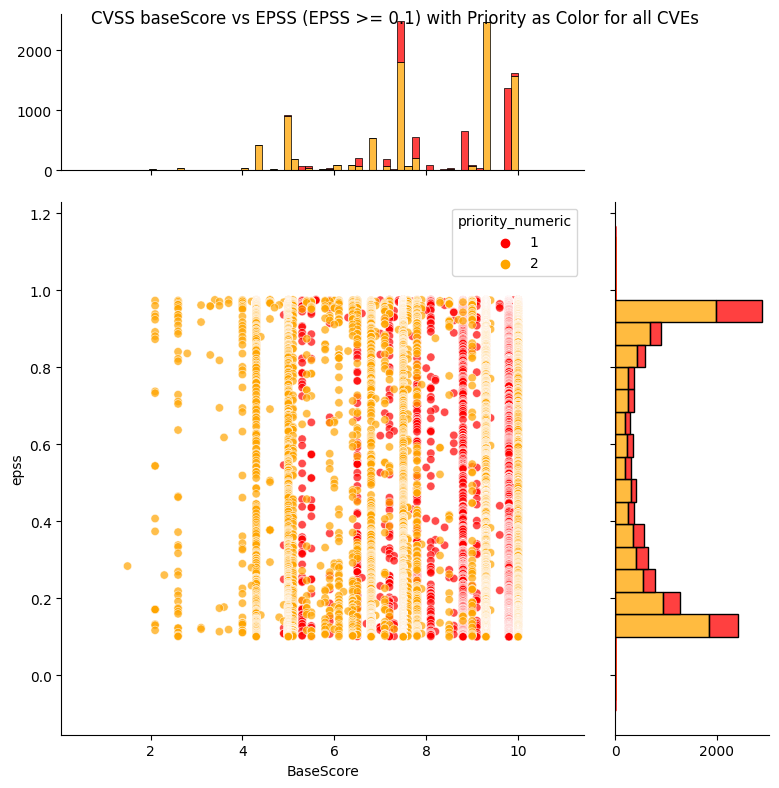

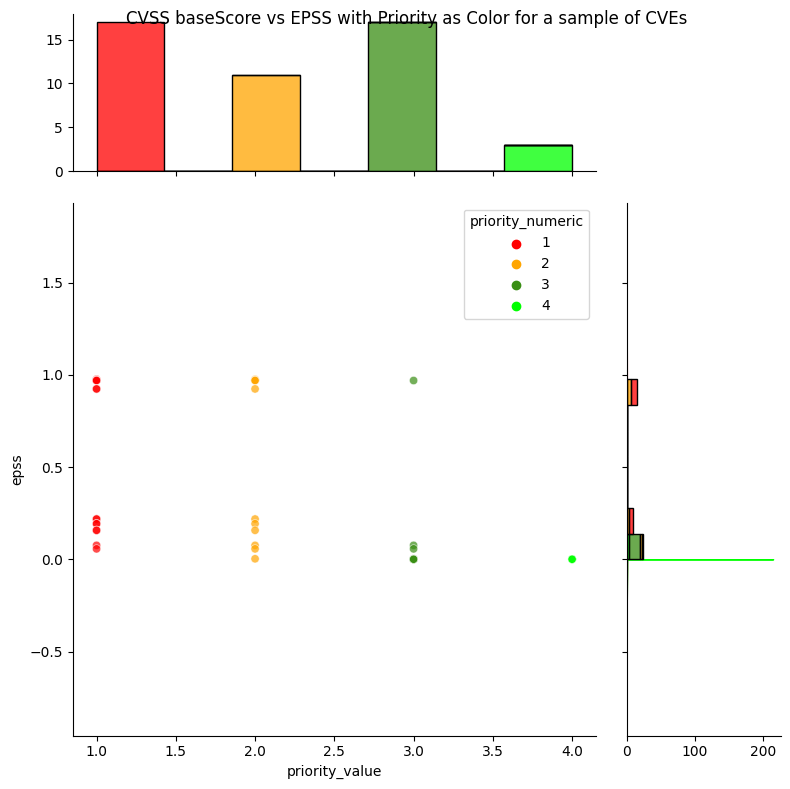

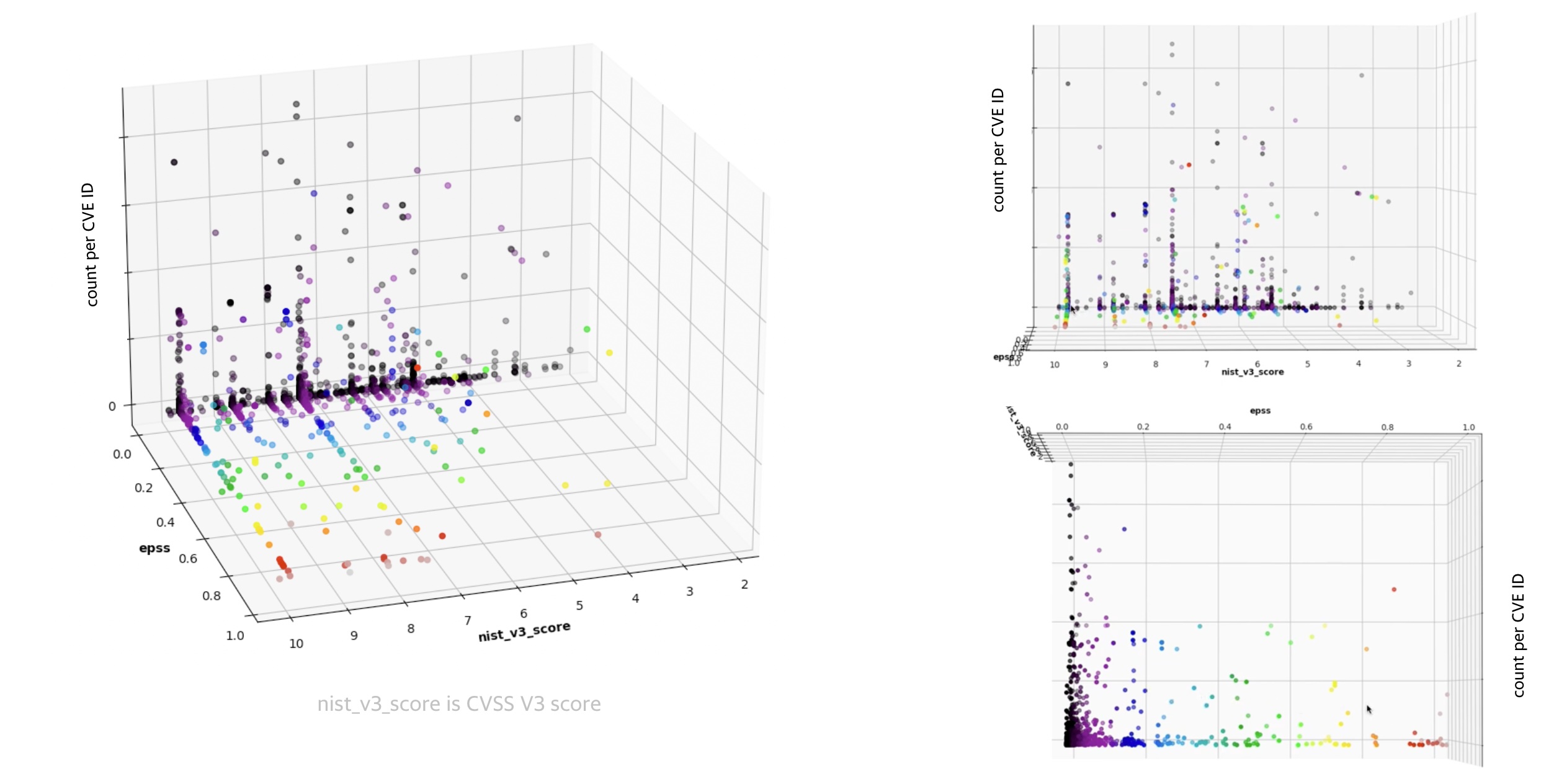

In this section, we analyze CVE CVSS values to understand opportunities for prioritization based on these values:

- CVSS Severity Rating

- CVSS Confidentiality, Integrity, Availability Impacts

CVSS Severity Rating Scale¶

Quote

"The use of these qualitative severity ratings is optional, and there is no requirement to include them when publishing CVSS scores. They are intended to help organizations properly assess and prioritize their vulnerability management processes."

https://www.first.org/cvss/v3.1/specification-document#Qualitative-Severity-Rating-Scale

Observations

- ~~15% of CVEs are ranked Critical (9+)

- ~~40% of CVEs are ranked High (7.0 - 8.9)

- ~~60% of CVSS v3 CVEs are ranked Critical or High (7+)

- >96% of CVEs are ranked Medium or higher (4+)

Don't use CVSS Base Scores alone to assess risk¶

Don't use CVSS Base Scores alone to assess risk.

Many organizations use CVSS Base Scores alone to assess risk despite repeated guidance against this.

A Critical or High CVSS Severity is not the same as a Critical or High Risk.

There's a ~10x difference in counts of CVEs for these 2 groups:

- >50% of CVEs are ranked Critical or High CVSS rating (CVSS score 7+)

- ~~5% of CVEs are exploited in the wild

Quote

CVSS Base (CVSS-B) scores are designed to measure the severity of a vulnerability and should not be used alone to assess risk.

https://www.first.org/cvss/v4.0/user-guide#CVSS-Base-Score-CVSS-B-Measures-Severity-not-Risk

CVSS Requirements for Regulated Environments¶

Some Regulated Environments requirements appear to conflict with this guidance 🤔

PCI¶

Quote

The Payment Card Industry Data Security Standard (PCI DSS) is an information security standard used to handle credit cards from major card brands. The standard is administered by the Payment Card Industry Security Standards Council, and its use is mandated by the card brands. It was created to better control cardholder data and reduce credit card fraud. Validation of compliance is performed annually or quarterly with a method suited to the volume of transactions

https://en.wikipedia.org/wiki/Payment_Card_Industry_Data_Security_Standard

Quote

PCI DSS 4.0 11.3.2.1 “External vulnerability scans are performed after any significant change as follows: Vulnerabilities that are scored 4.0 or higher by the CVSS are resolved.”

https://docs-prv.pcisecuritystandards.org/PCI%20DSS/Standard/PCI-DSS-v4_0.pdf

FedRAMP¶

Quote

The Federal Risk and Authorization Management Program (FedRAMP) is a United States federal government-wide compliance program that provides a standardized approach to security assessment, authorization, and continuous monitoring for cloud products and services https://en.wikipedia.org/wiki/FedRAMP

Quote

3.0 Scanning Requirements

Common Vulnerability Scoring System (CVSS) Risk Scoring: For any vulnerability with a CVSSv3 base score assigned in the latest version of the NVD, the CVSSv3 base score must be used as the original risk rating. If no CVSSv3 score is available, a CVSSv2 base score is acceptable where available. If no CVSS score is available, the native scanner base risk score can be used.

https://www.fedramp.gov/assets/resources/documents/CSP_Vulnerability_Scanning_Requirements.pdf

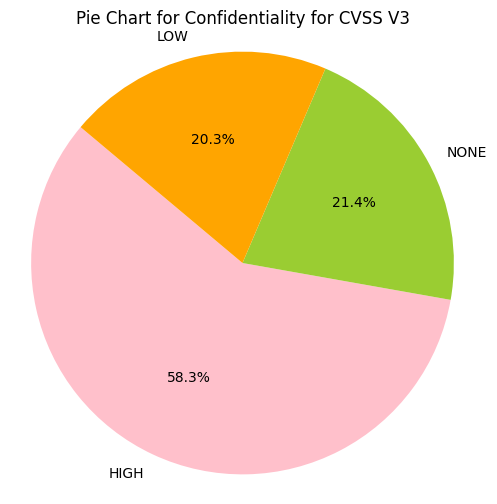

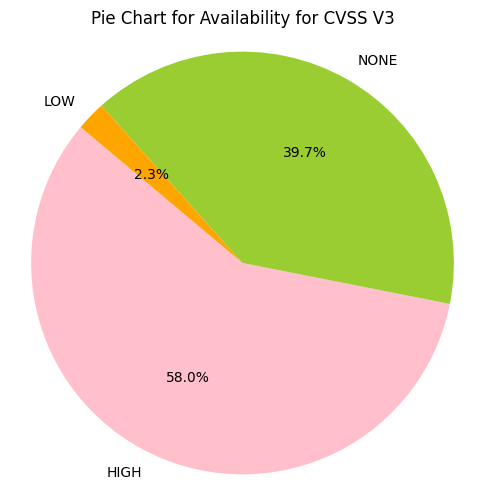

CVSS Confidentiality, Integrity, Availability Impacts¶

Quote

- The Confidentiality and Integrity metrics refer to impacts that affect the data used by the service. For example, web content that has been maliciously altered, or system files that have been stolen.

- The Availability impact metric refers to the operation of the service. That is, the Availability metric speaks to the performance and operation of the service itself – not the availability of the data.”

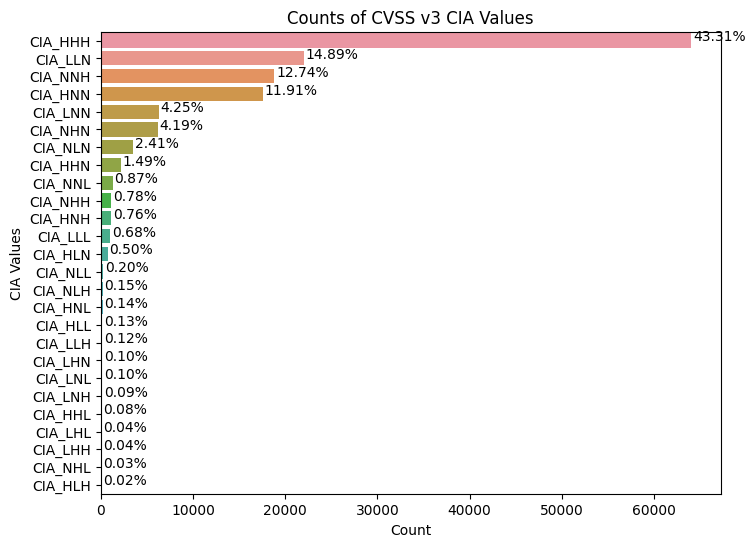

e.g. CIA_HHH means that Confidentiality Impact is HIGH, Integrity Impact is HIGH, Availability Impact is HIGH

Observations

- Greater than 50% of CVE Confidentiality Impact, Integrity Impact, Availability Impact values are HIGH.

- There are 27 (3x3x3) possible combinations of Confidentiality Impact,

Integrity Impact, Availability Impact and possible HIGH, LOW, NONE

values

- ~43% of CVSS v3 CVEs have Confidentiality Impact, Integrity Impact, Availability Impact value of HIGH

- The top 4 account for 83% of CVSS v3 CVEs

- So there isn't much granularity for prioritization based on

- CVSS Base Score or Rating

- CVSS Impact values

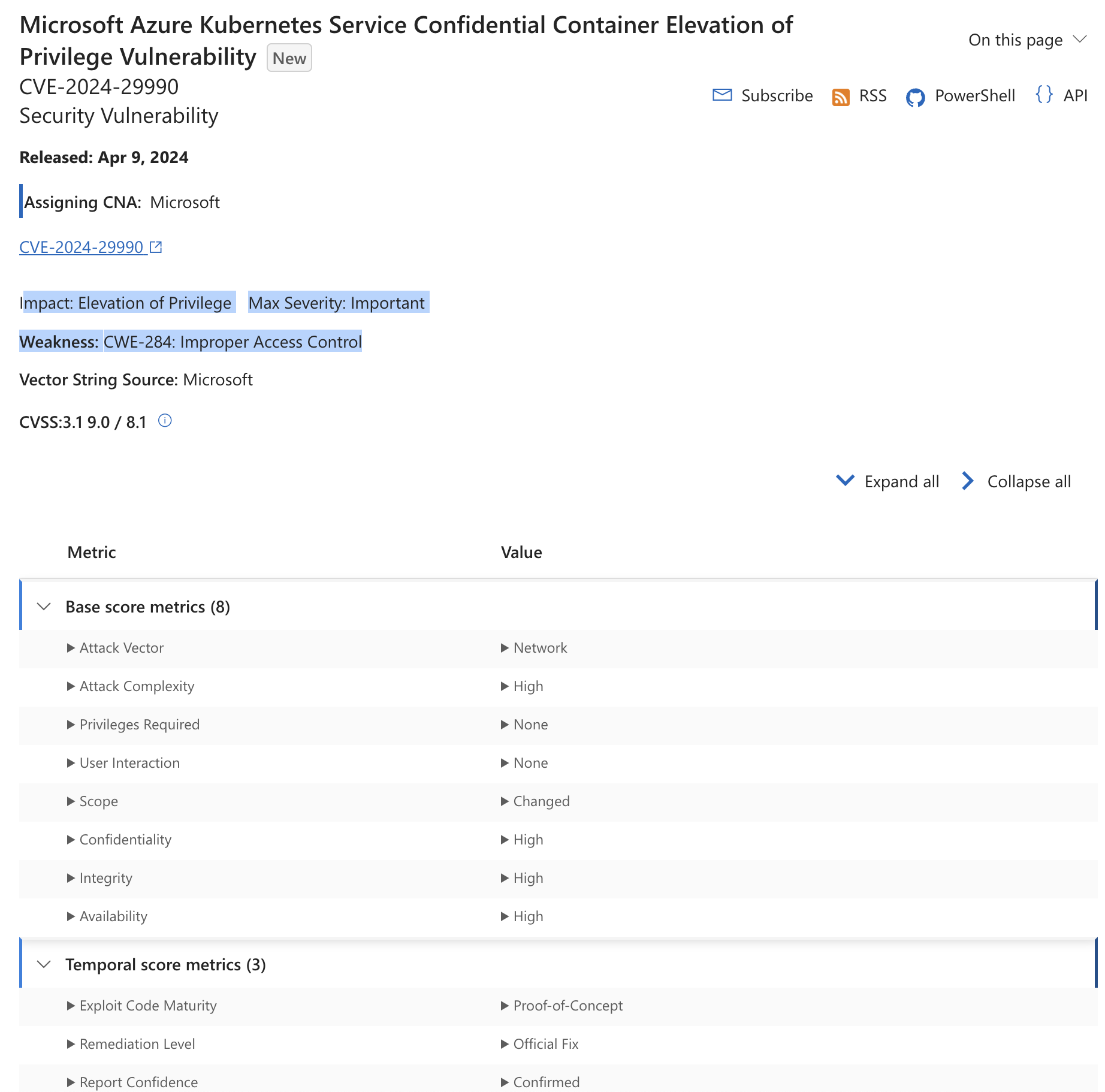

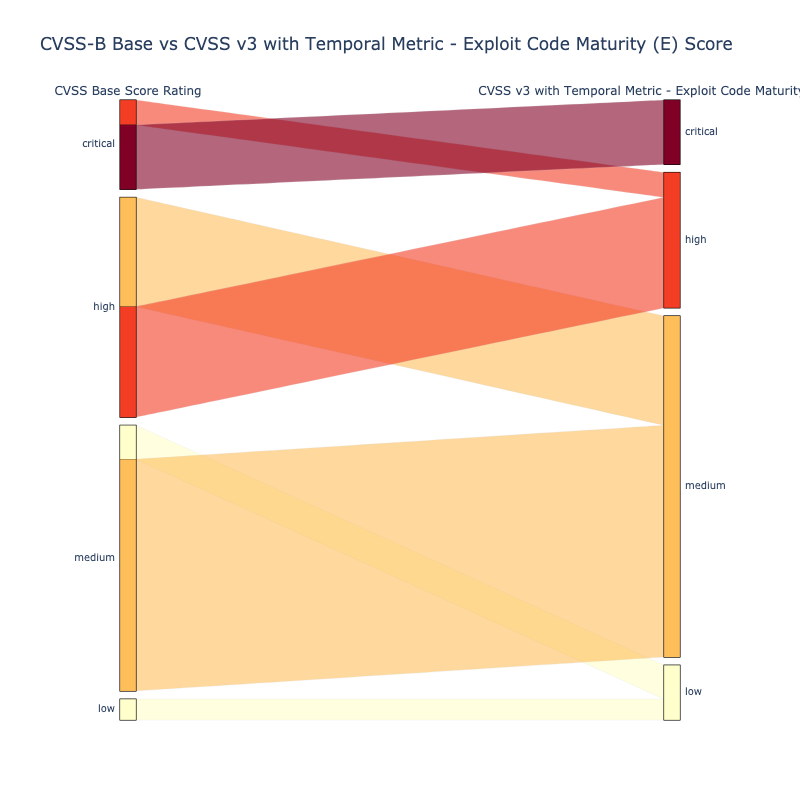

CVSS Exploit Maturity¶

In addition to the CVSS Base Metrics which are commonly used, CVSS supports other Metrics, including Threat Metrics.

Quote

It is the responsibility of the CVSS consumer to populate the values of Exploit Maturity (E) based on information regarding the availability of exploitation code/processes and the state of exploitation techniques. This information will be referred to as “threat intelligence” throughout this document.

Operational Recommendation: Threat intelligence sources that provide Exploit Maturity information for all vulnerabilities should be preferred over those with only partial coverage. Also, it is recommended to use multiple sources of threat intelligence as many are not comprehensive. This information should be updated as frequently as possible and its application to CVSS assessment should be automated.

https://www.first.org/cvss/v4.0/specification-document#Threat-Metrics

CVSS v3.1¶

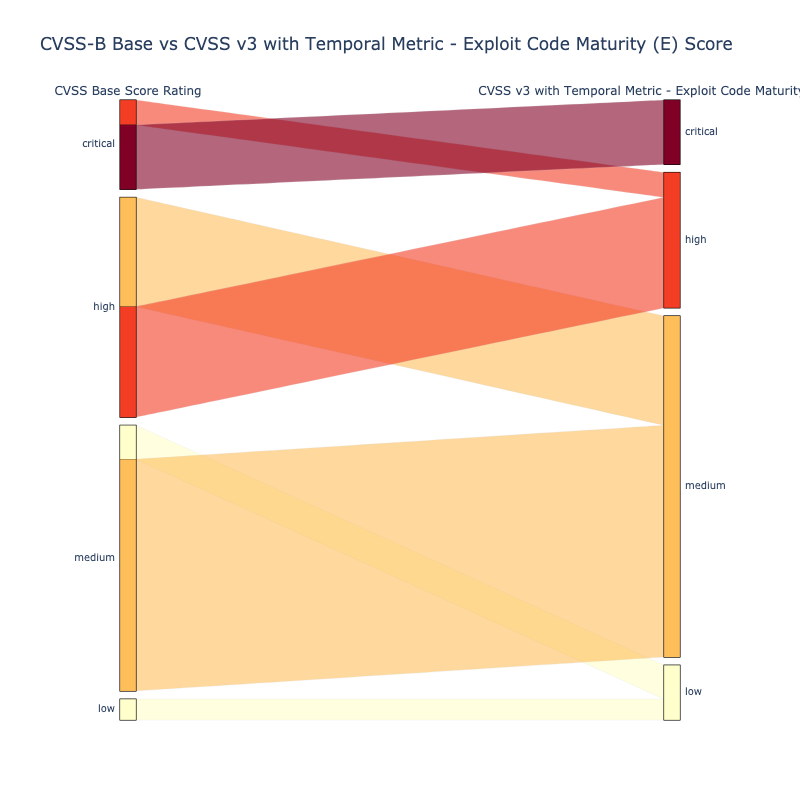

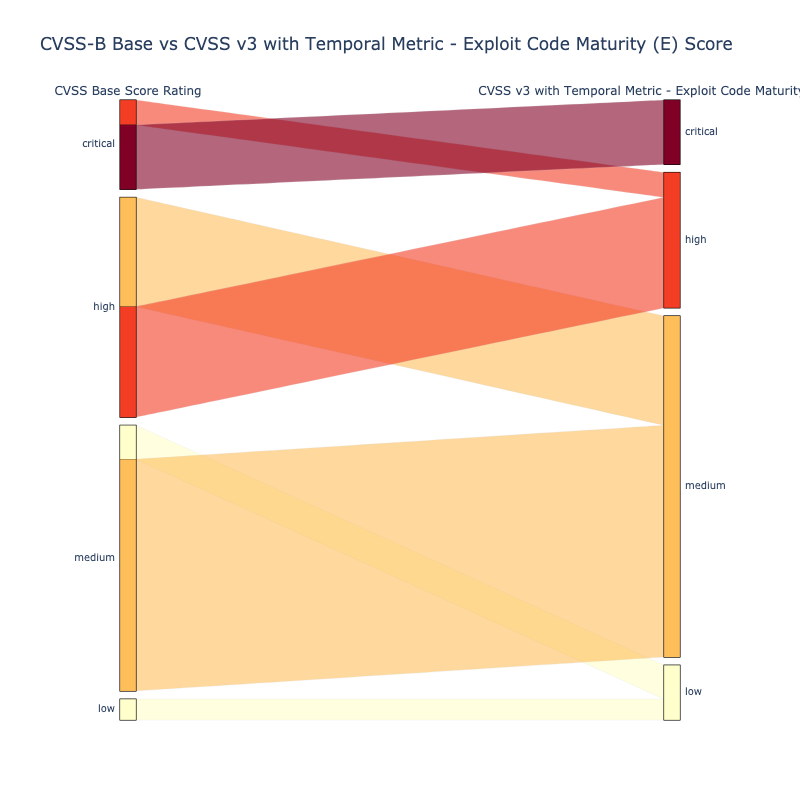

The "Temporal Metrics - Exploit Code Maturity (E)" causes the CVSS v3.1 Score to vary slightly.

- High (H): 9.8

- Functional (F): 9.6

- Proof-Of-Concept (P): 9.3

- Unproven (U): 9.0

- Not Defined (X): 9.8 results in the same score as High (H): 9.8

An example project that enriches NVD CVSS scores to include Temporal & Threat Metrics

"Enriching the NVD CVSS scores to include Temporal & Threat Metrics" is an example project where the CVSS Exploit Code Maturity/Exploitability (E) Temporal Metric is continuously updated.

- Fetches EPSS scores every morning

- Fetches CVSS scores from NVD if there are new EPSS scores.

- Calculates the Exploit Code Maturity/Exploitability (E) Metric when new data is found.

- Provides a resulting CVSS-BT score for each CVE

It uses an EPSS threshold of 36% as the threshold for High for Exploit Code Maturity/Exploitability (E).

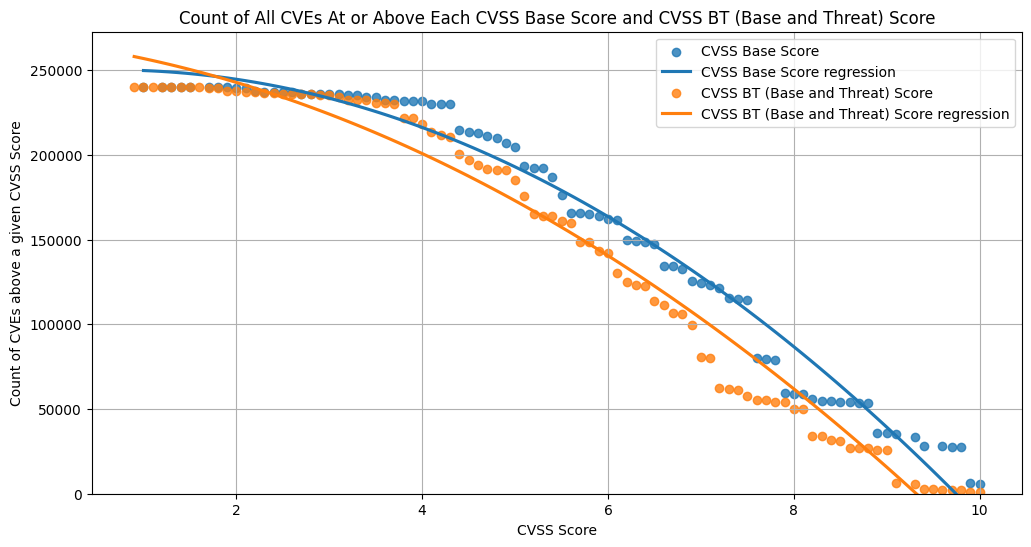

Count of CVEs at or above CVSS Base Score and CVSS Base and Threat Score¶

The data from "Enriching the NVD CVSS scores to include Temporal & Threat Metrics" is used here.

The continuous line is a polynomial regression of order 2.

Observations

- For CVSS Base and Threat

- there's a lot less CVEs above a score of ~9 (relative to CVSS Base)

- ~~35% of CVEs are High or Critical (versus ~~55% for CVSS Base)

CVSS v4.0¶

The Threat Metrics - Exploit Maturity (E) value causes the CVSS v4.0 Score to vary slightly

- Unreported: 8.1: High

- POC (P): 8.9: High

- Attacked (A): 9.3: Critical.

- Not Defined (X) results in the same score as Attacked (A)

Quote

The Threat Metric Group adjusts the “reasonable worst case” Base score by using threat intelligence to reduce the CVSS-BTE score, addressing concerns that many CVSS (Base) scores are too high.

The convenience of a single CVSS score comes with the cost of not being able to understand or differentiate between the risk factors from the score, and not being able to prioritize effectively using the score.

Takeaways

- Don't use CVSS Base (CVSS-B) scores alone to assess risk - you will waste a LOT of time/effort/$ if you do!

- CVSS Base scores and ratings don't allow for useful prioritization (because there's too many CVEs at the high end)

- CVSS Confidentiality, Integrity, Availability Impacts don't allow for useful prioritization (because there's too many CVEs with HIGH or CRITICAL values)

- CVSS Threat Metrics - Exploit Maturity (CVSS-BT) values don't allow for useful prioritization (because there's too many CVEs with HIGH or CRITICAL values) - but are still useful as the number of CVEs with HIGH or CRITICAL ratings is reduced.

- The convenience of a single CVSS score comes with the cost of not being able to understand or differentiate between the risk factors from the score, and not being able to prioritize effectively.

CISA KEV¶

Overview

This section gives an overview of CISA KEV.

See also:

- Where CVSS, EPSS, CISA KEV Fit in the Risk Taxonomy.

- EPSS and CISA KEV for an analysis of CISA KEV CVEs.

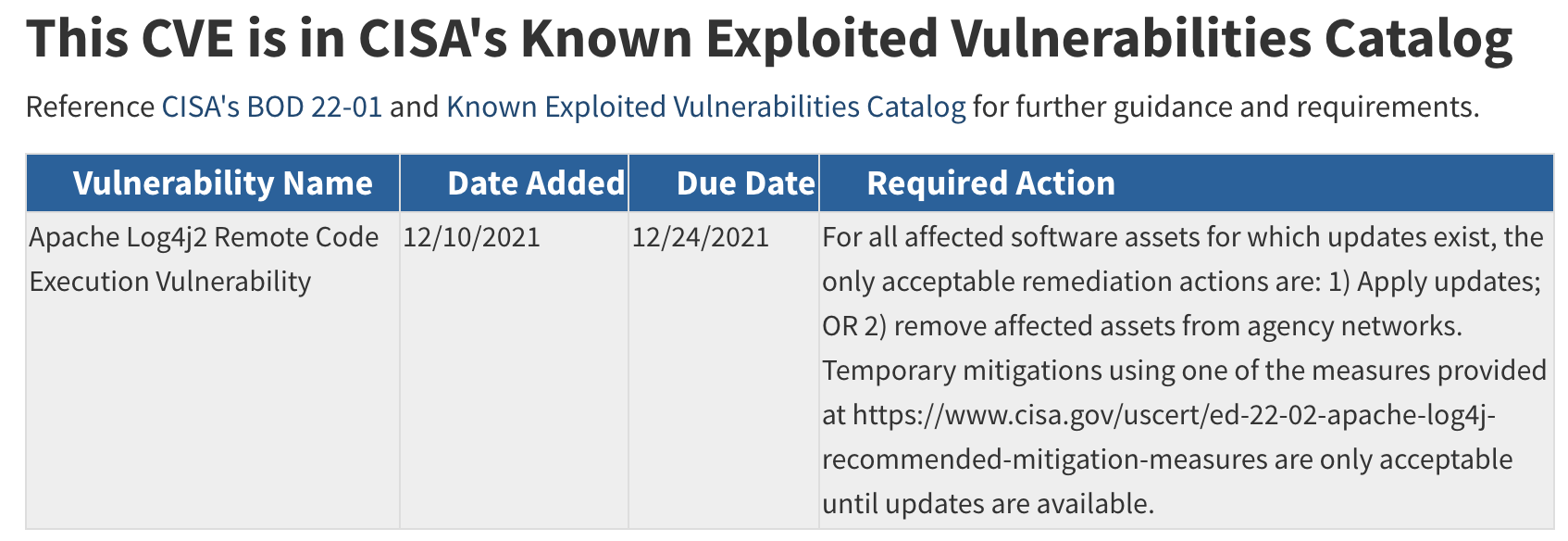

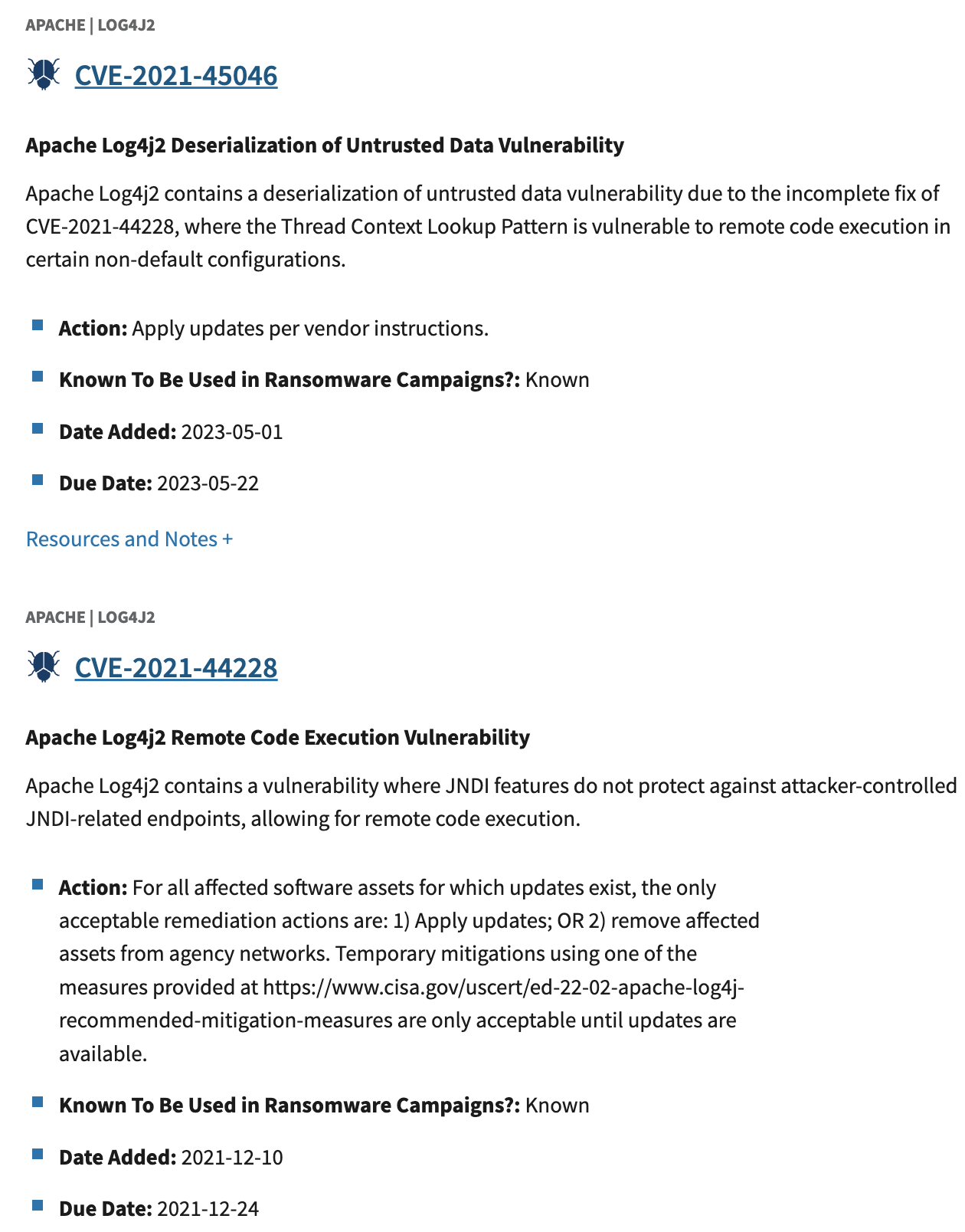

- Log4Shell Example for an example of a CISA KEV entry.

CISA KEV¶

Quote

For the benefit of the cybersecurity community and network defenders—and to help every organization better manage vulnerabilities and keep pace with threat activity — CISA maintains the authoritative source of vulnerabilities that have been exploited in the wild: the Known Exploited Vulnerability (KEV) catalog https://www.cisa.gov/known-exploited-vulnerabilities-catalog.

The KEV catalog sends a clear message to all organizations to prioritize remediation efforts on the subset of vulnerabilities that are causing immediate harm based on adversary activity.

Organizations should use the KEV catalog as an input to their vulnerability management prioritization framework.

Vulnerability management frameworks—such as the Stakeholder-Specific Vulnerability Categorization (SSVC) model—consider a vulnerability's exploitation status.

Criteria For Cisa To Add A Vulnerability To The Kev Catalog¶

There are three criteria for CISA to add a vulnerability to the KEV catalog

-

The vulnerability has an assigned Common Vulnerabilities and Exposures (CVE) ID.

-

There is reliable evidence that the vulnerability has been actively exploited in the wild.

-

There is a clear remediation action for the vulnerability, such as a vendor-provided update.

CISA KEV currently includes ~1.1K CVEs, and defines criteria for inclusion

CISA Known Exploited Vulnerabilities Catalog (CISA KEV) is a source of vulnerabilities that have been exploited in the wild.

- It contains a subset of known exploited CVEs.

There's several criteria including:

Quote

"A vulnerability under active exploitation is one for which there is reliable evidence that execution of malicious code was performed by an actor on a system without permission of the system owner."

"Events that do not constitute as active exploitation, in relation to the KEV catalog, include:

- Scanning

- Security research of an exploit

- Proof of Concept (PoC)

Applying CISA KEV¶

Quote

“All federal civilian executive branch (FCEB) agencies are required to remediate vulnerabilities in the KEV catalog within prescribed timeframes under Binding Operational Directive (BOD) 22-01, Reducing the Significant Risk of Known Exploited Vulnerabilities. Although not bound by BOD 22-01, every organization, including those in state, local, tribal, and territorial (SLTT) governments and private industry can significantly strengthen their security and resilience posture by prioritizing the remediation of the vulnerabilities listed in the KEV catalogue as well. CISA strongly recommends all stakeholders include a requirement to immediately address KEV catalogue vulnerabilities as part of their vulnerability management plan.

Takeaways

- CISA maintains a catalog of vulnerabilities that have been exploited in the wild.

- CISA KEV contains a subset of known exploited CVEs.

- Organizations should use this KEV catalog as an input to their vulnerability management prioritization framework to prioritize by Risk.

Exploit Prediction Scoring System (EPSS) ↵

Introduction to EPSS¶

Overview

In this section we introduce EPSS

- what it is and what it gives us

- why we should care

- how to use it together with evidence of known exploitation

- a plot of EPSS scores for all CVEs

What is EPSS?¶

Exploit Prediction Scoring System (EPSS) is a data-driven effort for estimating the likelihood (probability) that a software vulnerability will be exploited in the wild. The Special Interest Group (SIG) consists of over 400 researchers, practitioners, government officials, and users who volunteer their time to improve this industry standard.

EPSS is managed under FIRST (https://www.first.org/epss), the same international non-profit organization that manages the Common Vulnerability Scoring System (CVSS), https://www.first.org/cvss/.

- EPSS produces probability scores for all known published CVEs based on current exploitation ability, and updates these scores daily

- The scores are free for anyone to use

- EPSS should be used:

- as a measure of the threat aspect of risk

- when there is no other evidence of current exploitation

- together with other measures of risk

- EPSS is best suited to vulnerabilities that are remotely exploitable in enterprise environments.

Tip

This guide does not duplicate the EPSS information, FAQ on https://www.first.org/epss.

Why Should I Care?¶

Prioritizing by exploitation reduces cost and risk

Prioritizing vulnerabilities that are being exploited in the wild, or are more likely to be exploited, reduces the

- cost of vulnerability management

- risk by reducing the time adversaries have access to vulnerable systems they are trying to exploit

Quote

-

"many vulnerabilities classified as “critical” are highly complex and have never been seen exploited in the wild - in fact, less than 4% of the total number of CVEs have been publicly exploited" (see BOD 22-01: Reducing the Significant Risk of Known Exploited Vulnerabilities).

Cybersecurity and Infrastructure Security Agency emphasizes prioritizing remediation of vulnerabilities that are known exploited in the wild:

-

"As a top priority, focus your efforts on patching the vulnerabilities that are being exploited in the wild or have competent compensating control(s) that can. This is an effective approach to risk mitigation and prevention, yet very few organizations do this. This prioritization reduces the number of vulnerabilities to deal with. This means you can put more effort into dealing with a smaller number of vulnerabilities for the greater benefit of your organization's security posture."

How Many Vulnerabilities are Being Exploited?¶

Only about 5% or fewer of all CVEs have been exploited

- “Less than 3% of vulnerabilities have weaponized exploits or evidence of exploitation in the wild, two attributes posing the highest risk,” Qualys

- “Only 3 percent of critical vulnerabilities are worth prioritizing,” https://www.datadoghq.com/state-of-application-security/

- “Less than 4% of the total number of CVEs have been publicly exploited”, CISA KEV

- “We observe exploits in the wild for 5.5% of vulnerabilities in our dataset,” Jay Jacobs, Sasha Romanosky, Idris Adjerid, Wade Baker

In contrast, for CVSS (Base Scores):

- ~15% of CVEs are ranked Critical (9+)

- ~65% of CVEs are ranked Critical or High (7+)

- ~96% of CVEs are ranked Critical or High or Medium (4+)

What Vulnerabilities are Being Exploited?¶

There isn't an authoritative common public list of ALL CVEs that are Known Actively Exploited in the wild

There is considerable variation in the

- Total Number of Vulnerabilities being Exploited from different sources (per above)

- The criteria for "Known Actively Exploited in the wild".

CISA KEV includes a subset of Vulnerabilities that are Known-Exploited in the wild.

CISA KEV currently includes ~1.1K CVEs, and defines criteria for inclusion

CISA Known Exploited Vulnerabilities Catalog (CISA KEV) is a source of vulnerabilities that have been exploited in the wild

There's several criteria including:

Quote

"A vulnerability under active exploitation is one for which there is reliable evidence that execution of malicious code was performed by an actor on a system without permission of the system owner."

"Events that do not constitute as active exploitation, in relation to the KEV catalog, include:

- Scanning

- Security research of an exploit

- Proof of Concept (PoC)

EPSS provides a probability of exploitation for all published CVEs (in the next 30 days)

For EPSS, the criteria for exploit evidence (used to feed the model) is a detection of traffic matching an intrusion detection/prevention systems (IDS/IPS), or honeypot, signature (not a successful exploitation).

Scanning would likely trigger a detection. In contrast, For CISA KEV, scanning does not constitute as active exploitation.

Various CTI lists other Known-Exploited CVEs

There are numerous Cyber Threat Intelligence sources e.g. vendors, publicly available data, that provide lists of CVEs that are Known-Exploited in addition to those listed on CISA KEV.

What Can EPSS Do For Me?¶

- EPSS allows network defenders to better (de)prioritize remediation of published CVEs for your organization based on likelihood of exploitation, while providing you the information you need to inform the prioritization trade-offs.

- EPSS is useful for product security (PSIRT) teams when prioritizing vulnerabilities found within their own products when there is a known CVE.

- EPSS data and trends are useful for researchers looking to better understand and explain vulnerability exploitation specifically, and malicious cyber activity more generally.

- EPSS can be used by regulators and policy makers when defining patch management requirements.

- (More detailed User Scenarios for different Personas (written by real users in that role) are provided in Requirements.)

What Does EPSS Provide?¶

- EPSS Score

- Probability scores for all known CVEs. Specifically, the probability that each vulnerability will be exploited in the next 30 days.

- Percentile

- The percentile scores represent a rank ordered list of all CVEs from most likely to be exploited, to least likely to be exploited

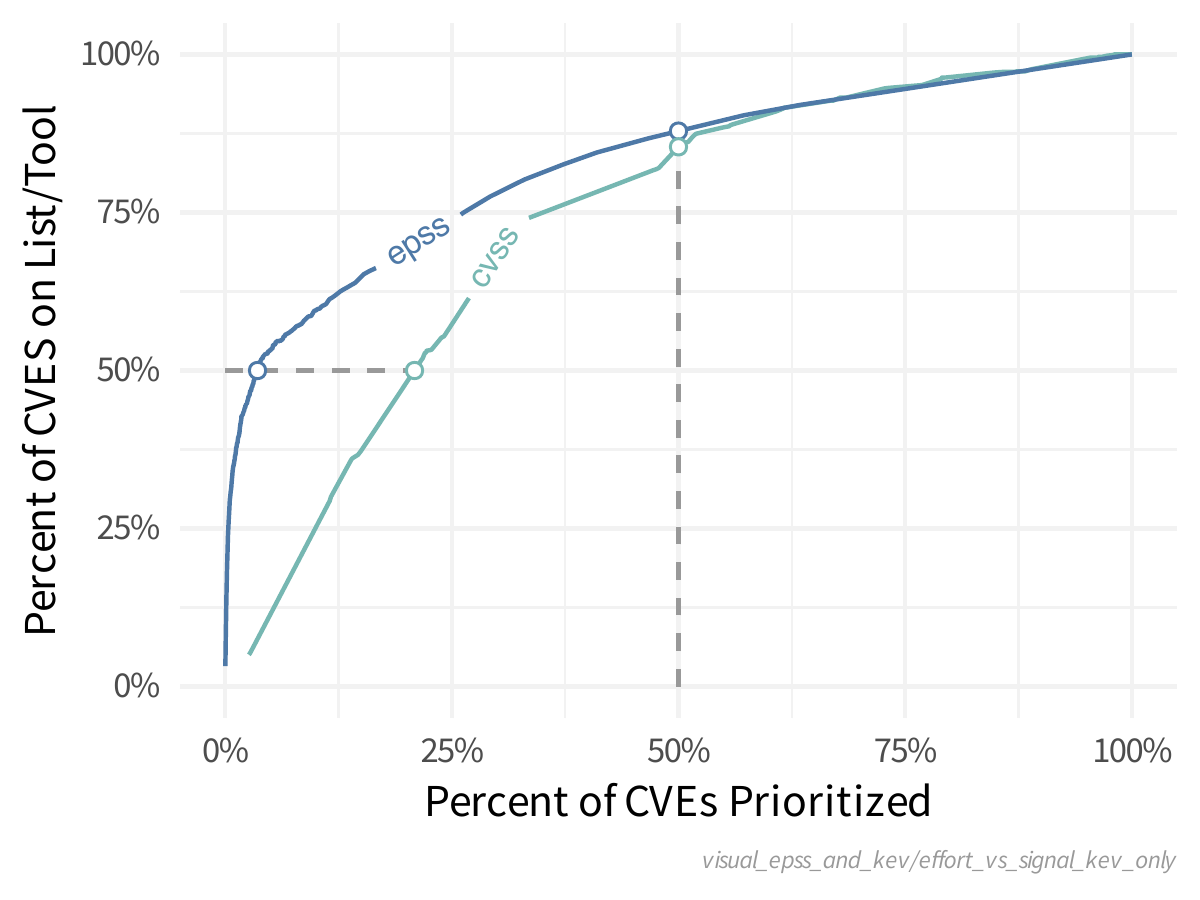

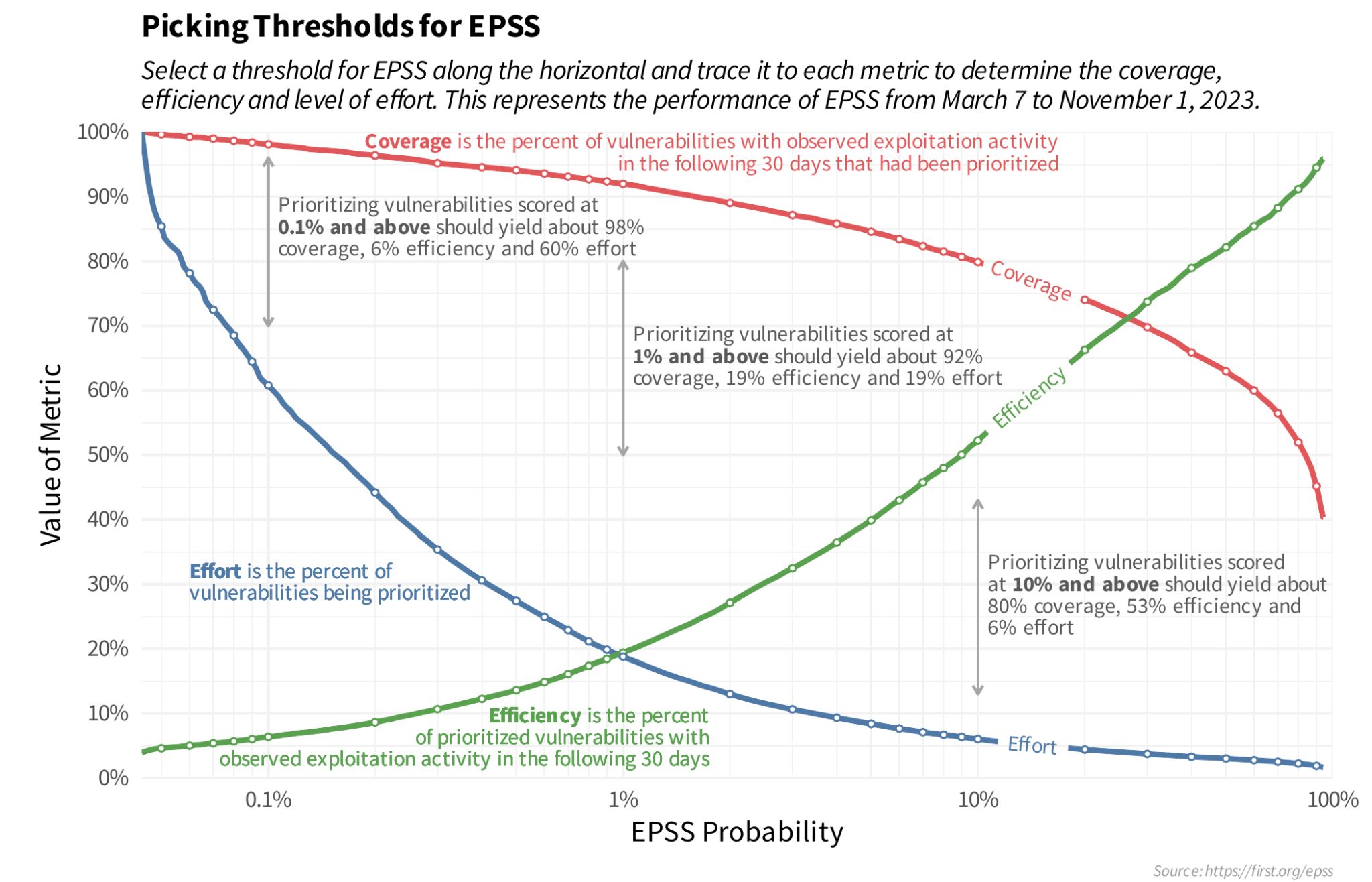

- Coverage, Efficiency, Effort figure showing the tradeoffs

between alternative remediation strategies.

- Specifically, this figure illustrates the tradeoffs between three key parameters that you may use when determining your optimal remediation strategy: coverage, efficiency, and level of effort

Using EPSS with Known Exploitation¶

Prioritize First (Pink) accounts for ~~5-10% of CVEs, Prioritize Next (Orange) ~90-95%

Active Exploitation

If there is evidence that a vulnerability is being exploited, then that information should supersede anything EPSS has to say, because again, EPSS is pre-threat intel.

Using EPSS when there is no Known Evidence of Active Exploitation

- There isn't an authoritative common public list of ALL CVEs that are Known Actively Exploited in the wild

- So as a user, it will be common to see CVEs with a High EPSS score, where you have no other intel on their exploitation.

Quote

If there is an absence of exploitation evidence, then EPSS can be used to estimate the probability it will be exploited.

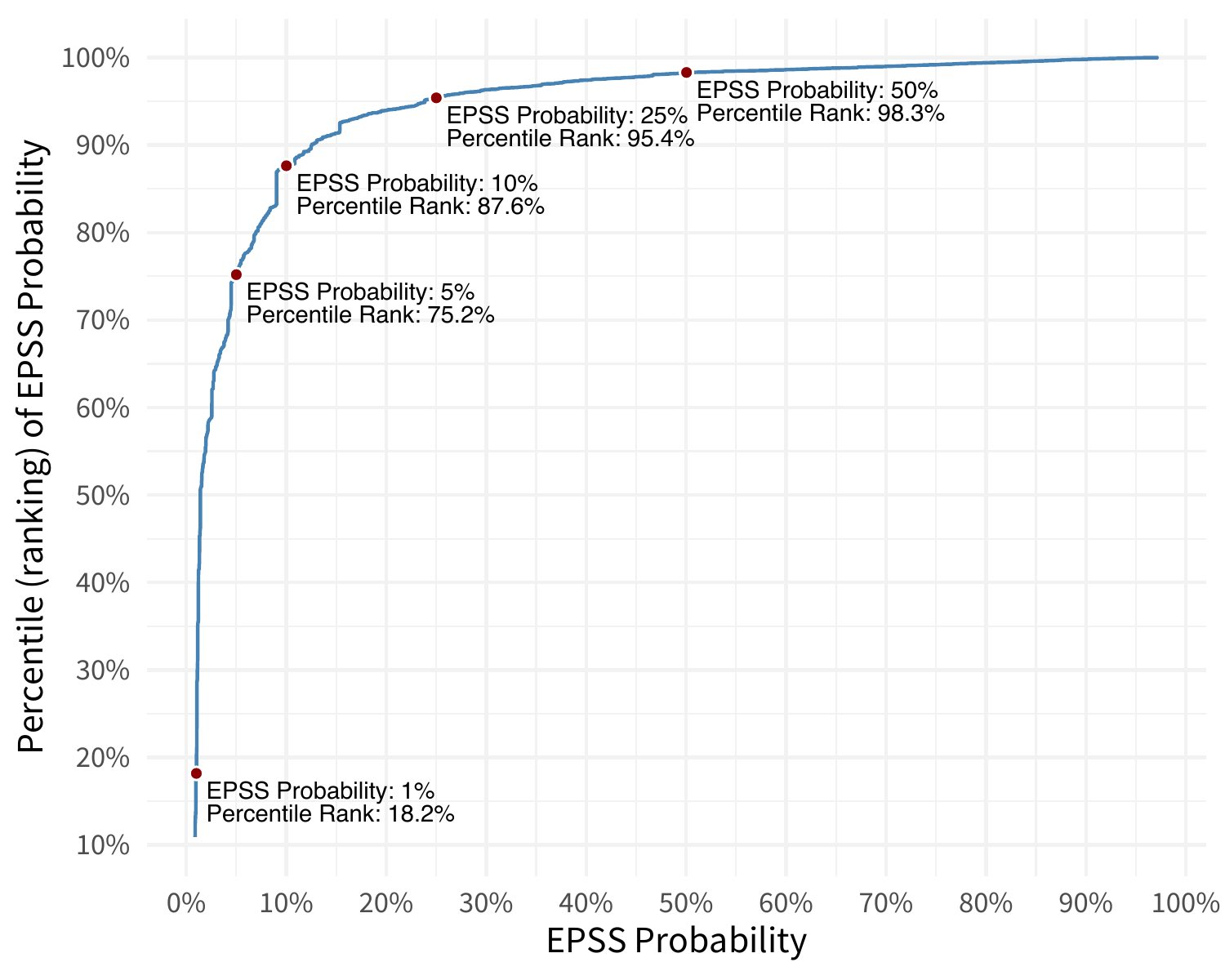

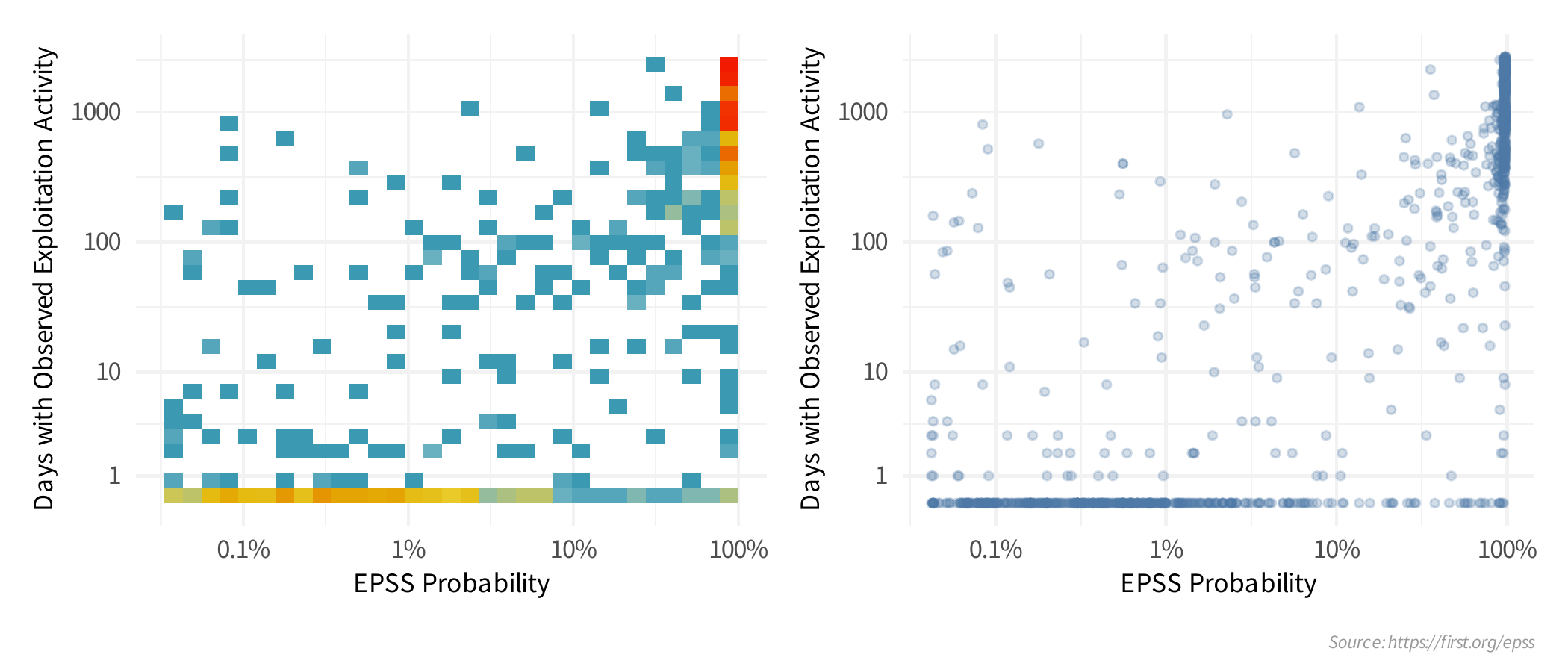

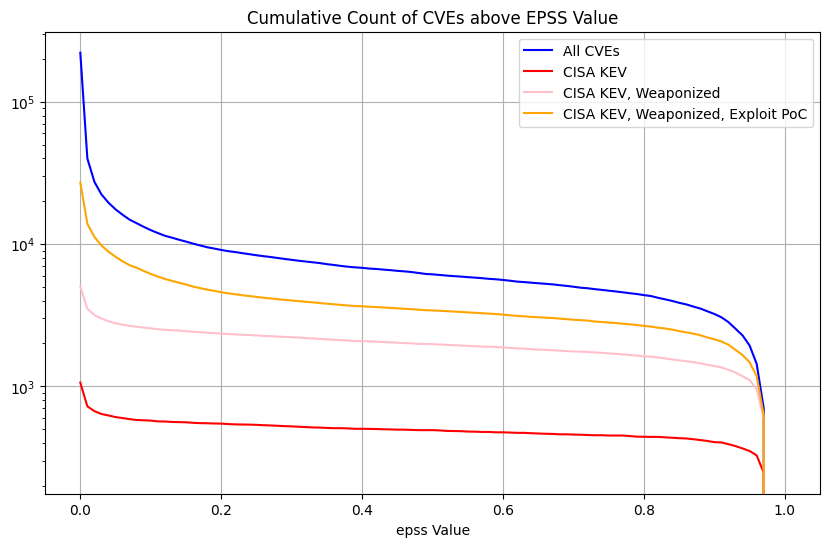

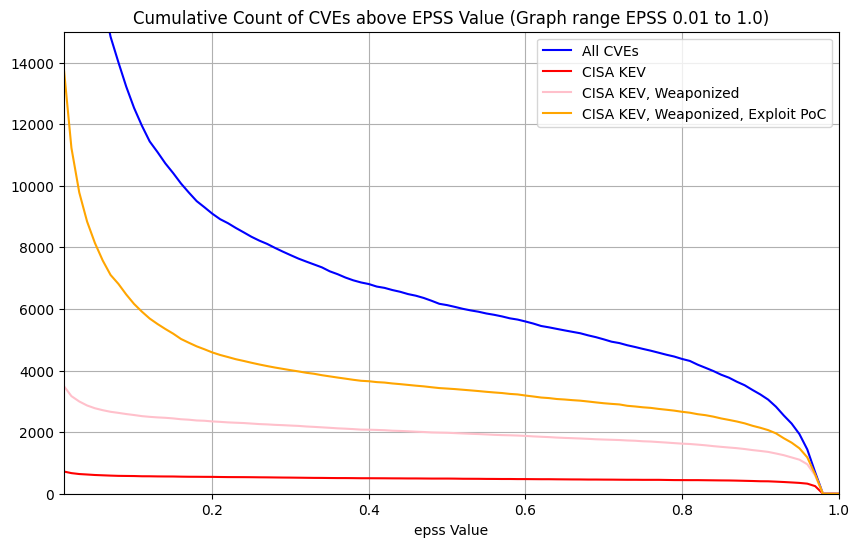

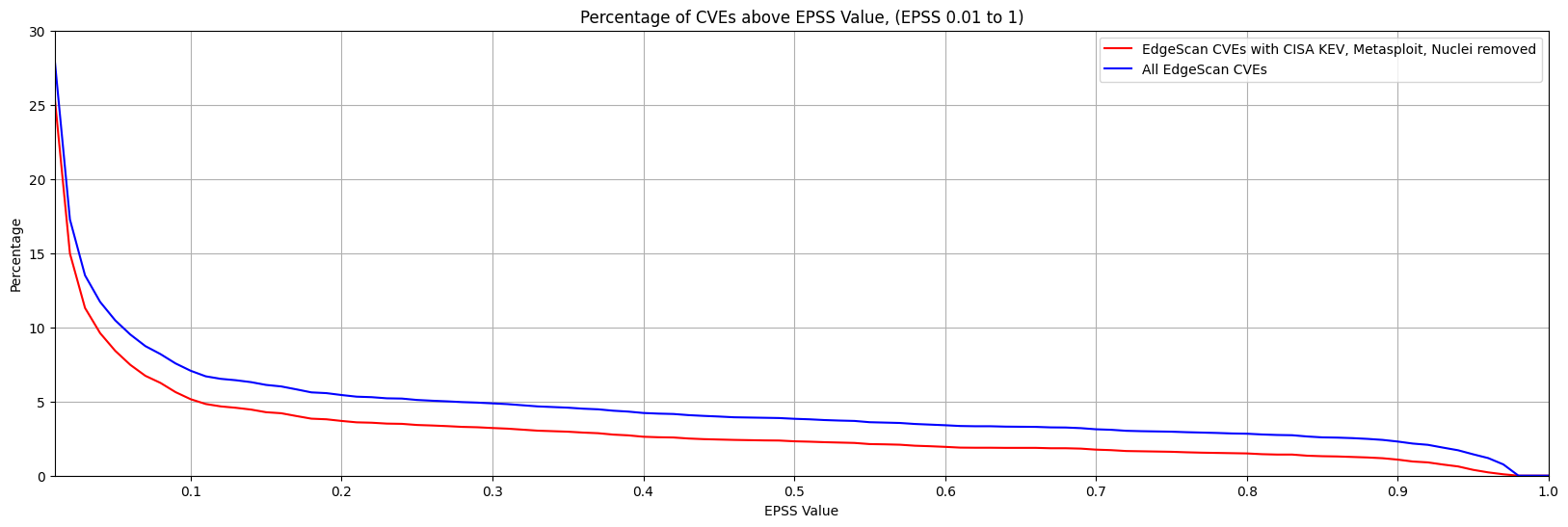

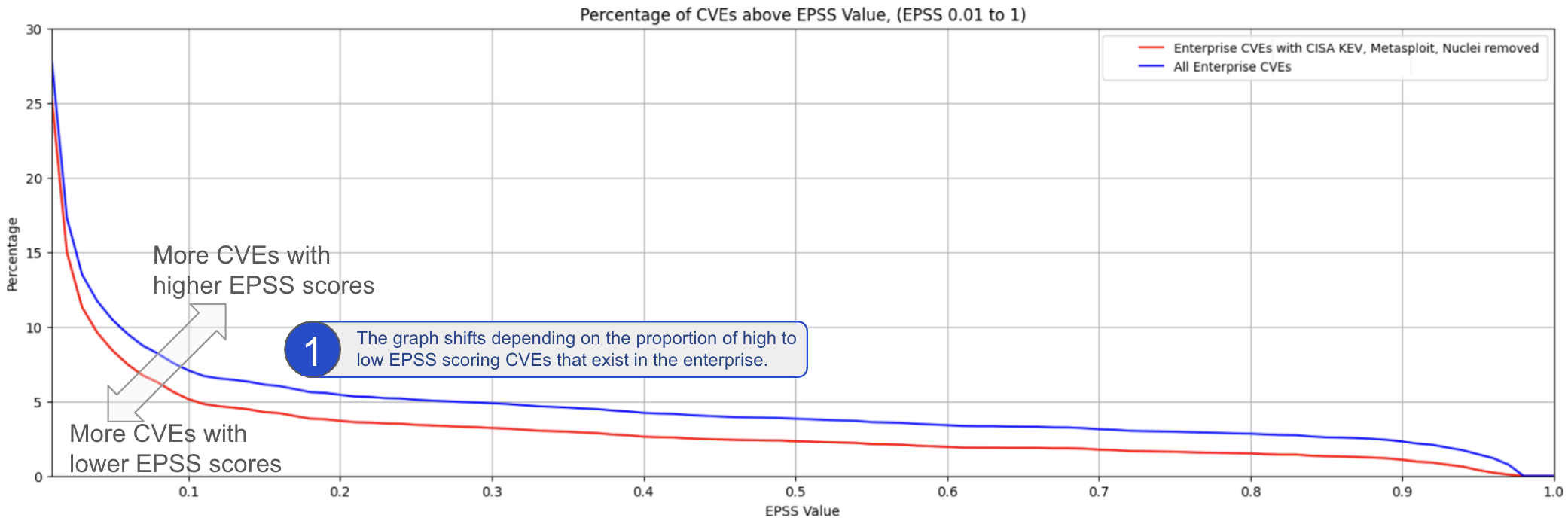

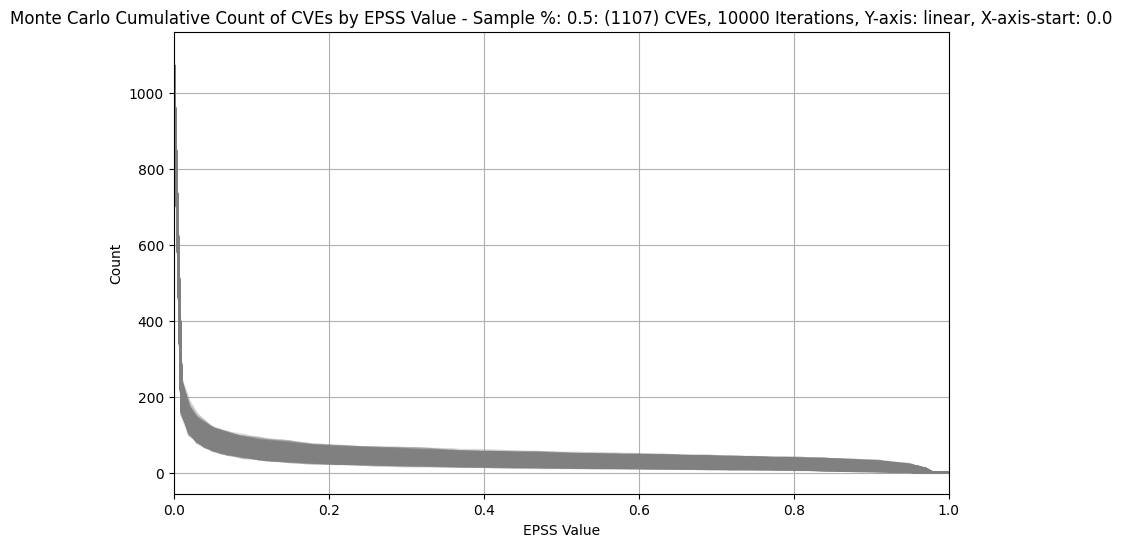

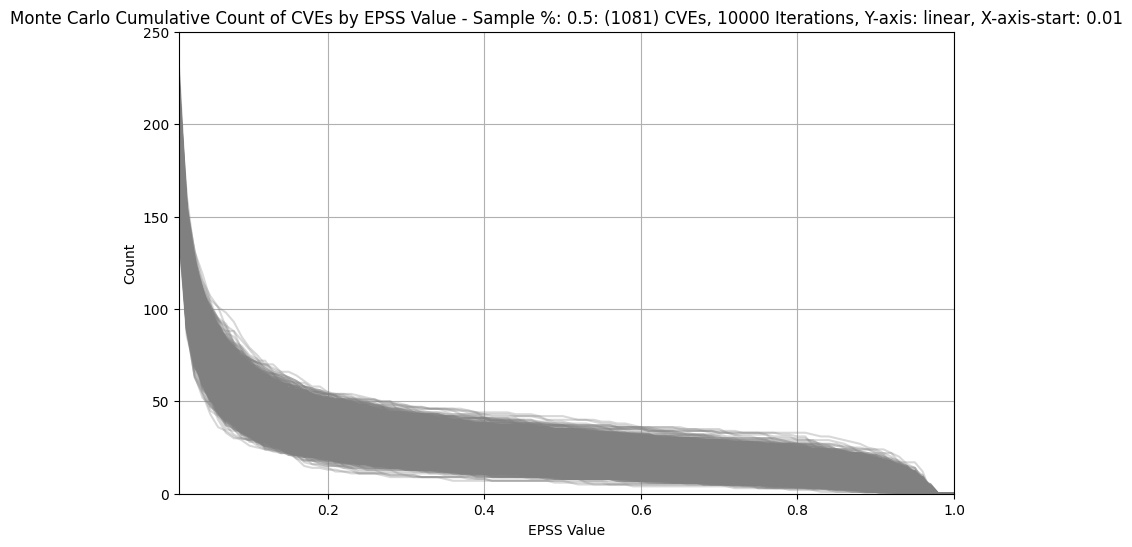

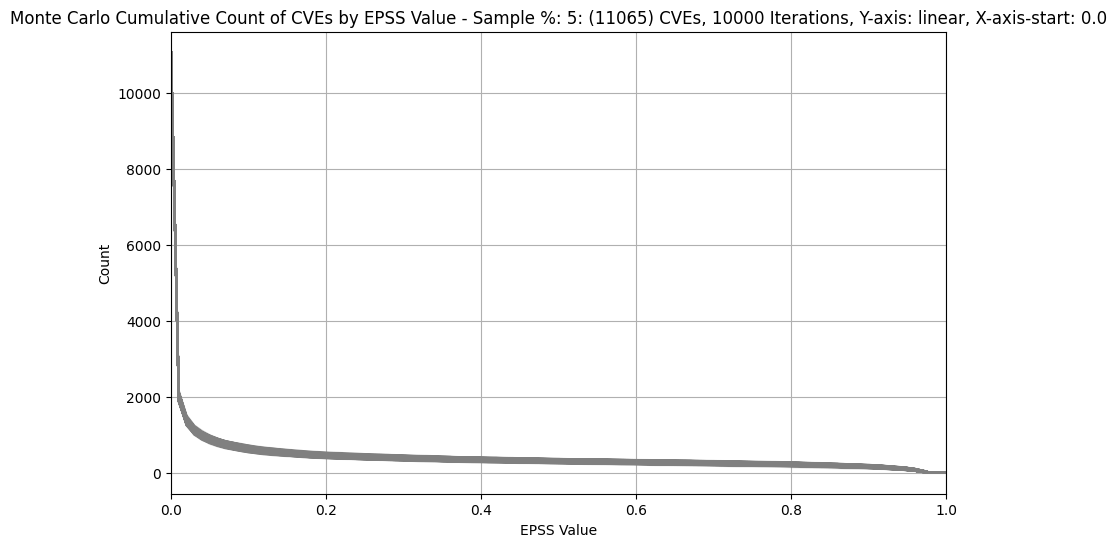

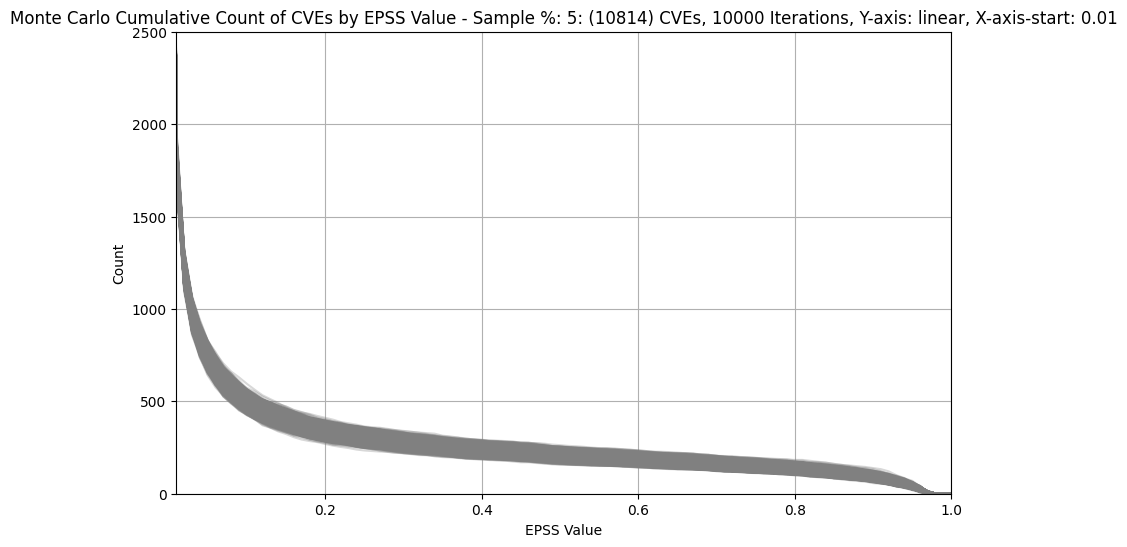

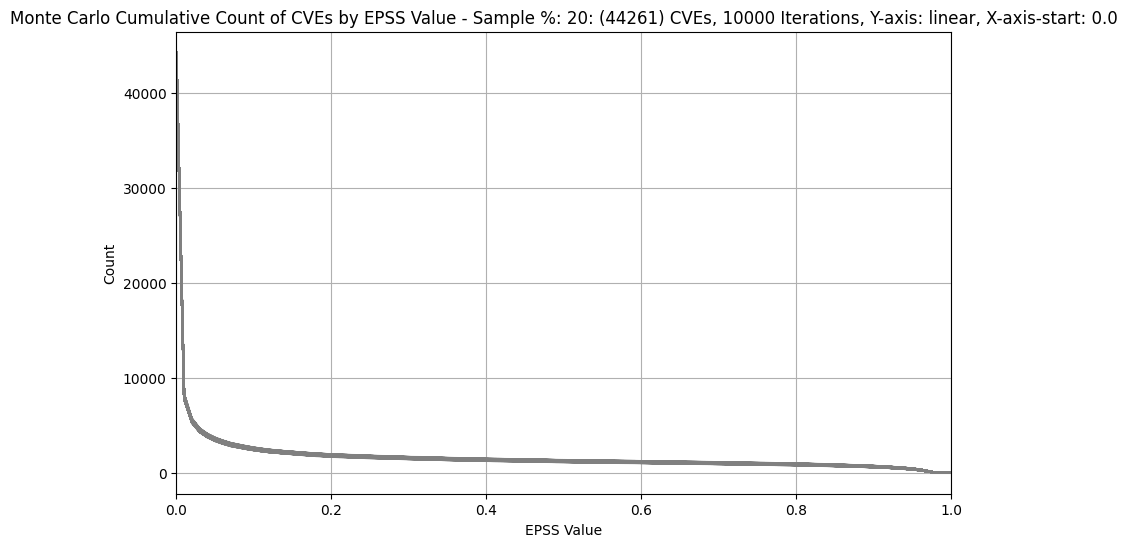

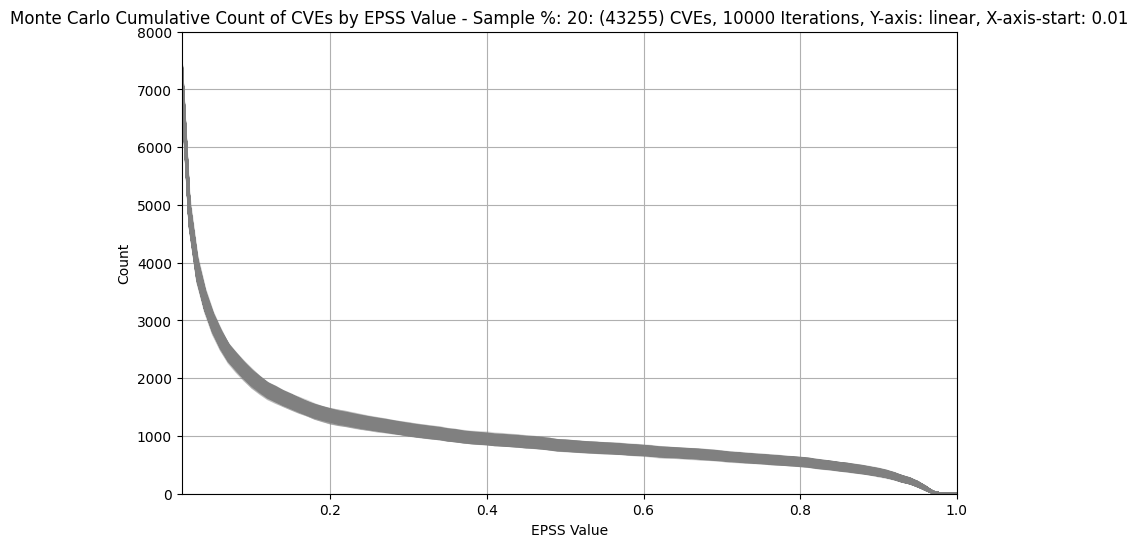

Count of CVEs at or above EPSS Score¶

In stark contrast to CVSS Base Scores and Ratings, (which are top heavy - most CVEs at the upper end of Severity), EPSS is bottom heavy (the vast majority of CVEs have a low EPSS score).

Image from https://www.first.org/epss/articles/prob_percentile_bins

Takeaways

- Prioritizing vulnerabilities that are being exploited in the wild, or are more likely to be exploited, reduces the

- cost of vulnerability management

- risk by focusing on the vulnerabilities that need to be fixed first

- EPSS provides

- a score for all published CVEs on how likely they are to be exploited (in the next 30 days)

- information on tradeoffs on coverage, efficiency, and level of effort

Quote

If there is evidence that a vulnerability is being exploited, then that information should supersede anything EPSS has to say, because again, EPSS is pre-threat intel. If there is an absence of exploitation evidence, then EPSS can be used to estimate the probability it will be exploited.

Applying EPSS to your environment¶

Overview

In this section we look at some of the considerations in interpreting EPSS scores for CVEs in YOUR environment:

- Type of Environment where EPSS works best

- Types of CVEs that EPSS works best for

EPSS is only available for published CVEs

EPSS produces probability scores for all known published CVEs based on current exploitation ability, and updates these scores daily.

This has the following repercussions:

- Timeliness: It will not be available for vulnerabilities exploited before they have an associated CVE (a process that can sometimes take weeks).

- Coverage:

- Vulnerabilities without a CVE ID or which are listed on other Other Vulnerability Data Sources will not receive an EPSS score.

EPSS for YOUR Environment¶

The EPSS Model ground truth and validation is based on exploitation observations from network- or host-layer intrusion detection/prevention systems (IDS/IPS), and honeypots, deployed globally at scale by the EPSS commercial data partners.

The EPSS score is the likelihood the observations will contain a specific CVE in the next 30 days in this "EPSS Environment".

- The EPSS score for a CVE does not depend on count of observations i.e. 1 or many observations results in the same score.

If YOUR environment is similar to the EPSS model environment, then a similar probability of exploitation activity should apply to YOUR environment, and therefore the EPSS scores for the CVEs in your environment.

Quote

Organizations should measure and validate the usefulness of EPSS in their environments. No organization should assume that its environment matches the data used to train EPSS. However, many organizations’ environments should be a near-enough match.

Probably Don’t Rely on EPSS Yet, Jonathan Spring, June 6, 2022

Enterprise Environment¶

EPSS is best suited to enterprise environments

Quote

Similarly, these detection systems will be typically installed on public-facing perimeter internet devices, and therefore less suited to detecting computer attacks against internet of things (IoT) devices, automotive networks, ICS, SCADA, operational technology (OT), medical devices, etc

Network Attacks¶

EPSS is best suited to network based attacks

Vulnerabilities that are remotely exploitable (i.e. Network Attack Vector in CVSS Base Score terms) have a higher Exploitability (CVSS Base Score Exploitability metrics group)

- remotely exploitable versus those that require physical or local proximity.

- can be exercised automatically over the network without requiring user-interaction (e.g. clicking a button or a link).

EPSS is best suited to these types of vulnerabilities.

Quote

Moreover, the nature of the detection devices generating the events will be biased toward detecting network based attacks, as opposed to attacks from other attack vectors such as host-based attacks or methods requiring physical proximity

Example

At the time of writing this guide, CISA Warns of Active Exploitation Apple iOS and macOS Vulnerability.

This CVE has a consistently low EPSS score near zero (https://api.first.org/data/v1/epss?cve=CVE-2022-48618&scope=time-series).

This is to be expected because the CVE Attack Vector is "Local", not Network, per (https://nvd.nist.gov/vuln/detail/CVE-2022-48618)

False Positives and Negatives¶

As with any tool, with these IDS/IPS and honeypot exploitation observations used to feed the EPSS model, there will be False Positives (though these are typically low with signature-based detection systems), and False Negatives:

Quote

Any signature-based detection device is only able to alert on events that it was programmed to observe. Therefore, we are not able to observe vulnerabilities that were exploited but undetected by the sensor because a signature was not written.

The EPSS Model uses the attributes of each vulnerability to predict exploitability (and not the exploitation observations which are for training and validation only)

- So if a vulnerability is rated low, that's because it shares attributes (or lack of attributes) with many of the non-exploited vulnerabilities.

- Conversely, if it's rated high, it has shared qualities with vulnerabilities that have historically been exploited.

This partially compensates for the False Positives and False Negatives from the IDS/IPS and honeypot exploitation observations, and the Exploitation Types and Environment that EPSS is less suited for.

EPSS Percentile Score for your Environment¶

The number of CVEs found in your environment will be a subset of all published CVEs, and will depend on:

- the technology stacks in your environment

- your ability to detect these CVEs

EPSS does not differentiate between 1 detection of a CVE versus many

EPSS does not differentiate between 1 instance of a CVE versus many i.e. in an organization, there may be many instances of a small number of CVEs, and then fewer instances of other CVEs.

- E.g. if there's a CVE in a software component that's pervasive in your organization, there will be many counts of that CVE.

- Your Remediation effort will be based on the counts per CVEs in your environment

Percentiles are a direct transformation from probabilities and provide a measure of an EPSS probability relative to all other scores. That is, the percentile is the proportion of all values less than or equal to the current rank.

- The EPSS Percentile score is relative to all ~~220K published CVEs that have an EPSS score.

- A fraction of those CVEs will apply to a typical organization e.g. ~~10K order of magnitude.

- A user is likely more interested in the EPSS Percentile for their organization - than for all CVEs.

- A CVE's EPSS Percentile could be e.g. 60% - but in the 90% percentile for the CVEs in the organization (if the organization has few CVEs with high EPSS score).

Source Code is provided to calculate EPSS percentiles for a list of CVEs

The EPSS Percentile is easily calculated for your organization (based on the subset of CVEs applicable to your organization and their EPSS scores).

Source code is provided with this guide to calculate EPSS percentiles for a list of CVEs.

Takeaways

- When applying EPSS to your environment, you should understand that EPSS is best suited to

- Enterprise environments

- Detect Network attacks

- Code is provided to calculate EPSS score percentiles for your environment/CVEs

EPSS and CISA KEV¶

Overview

In this section, we apply interpretation of, and guidance on, EPSS to CISA KEV:

- CISA KEV will be used as the reference (source of truth) for active exploitation.

- CISA KEV is the most known and used catalog of CVEs actively exploited in the wild, and is publicly available.

We start with analysis via plots and interpretation and code

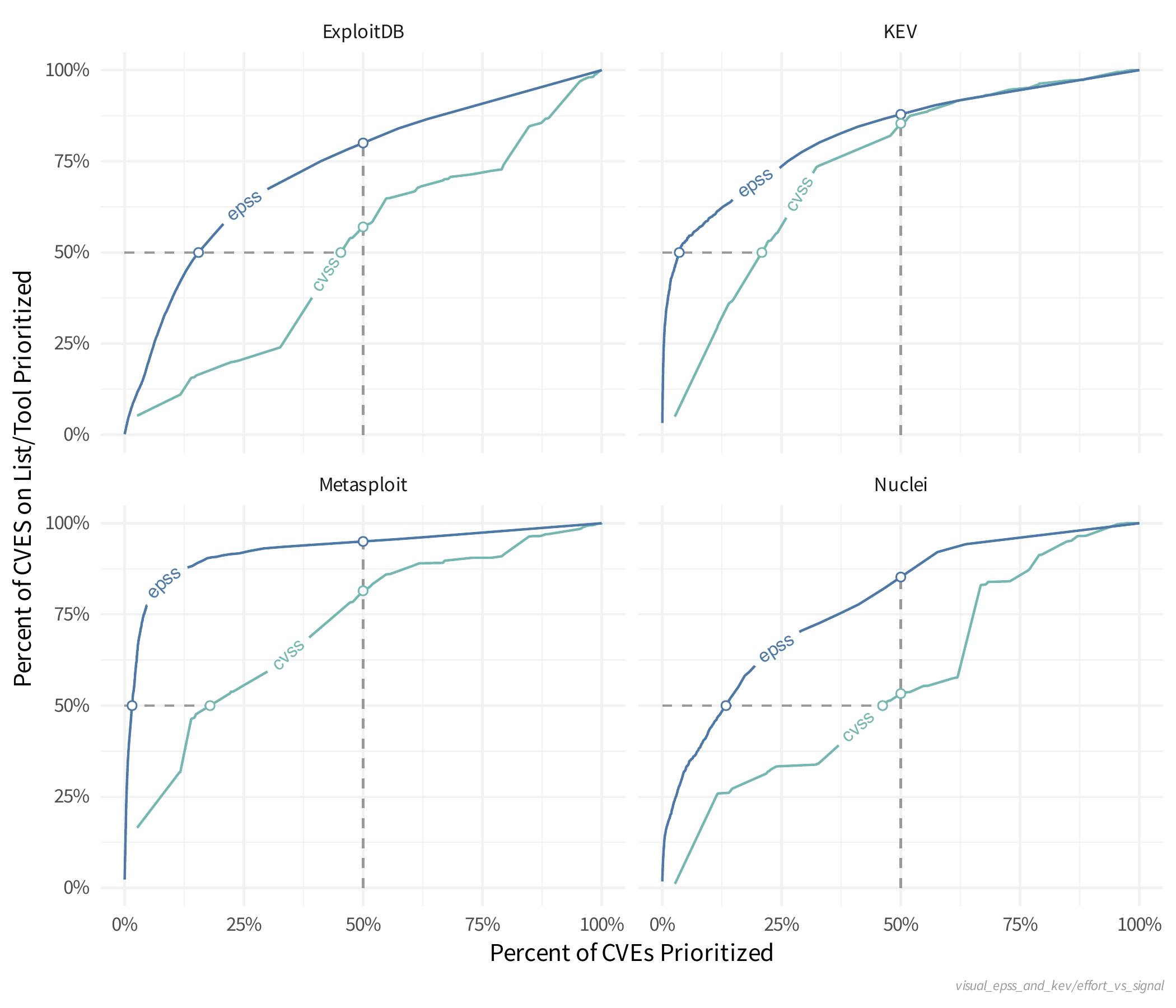

- Analyze the Data Sources relative to CISA KEV

- Analyze EPSS relative to CISA KEV (and these Data Sources)

Then Jay Jacobs (EPSS) presents analysis and interpretation based on data internal to the EPSS model.

User Story

As a user, I want to know what CVEs

- are actively exploited or likely to be

- are not actively exploited or not likely to be

So I can focus on what to remediate first in my environment.

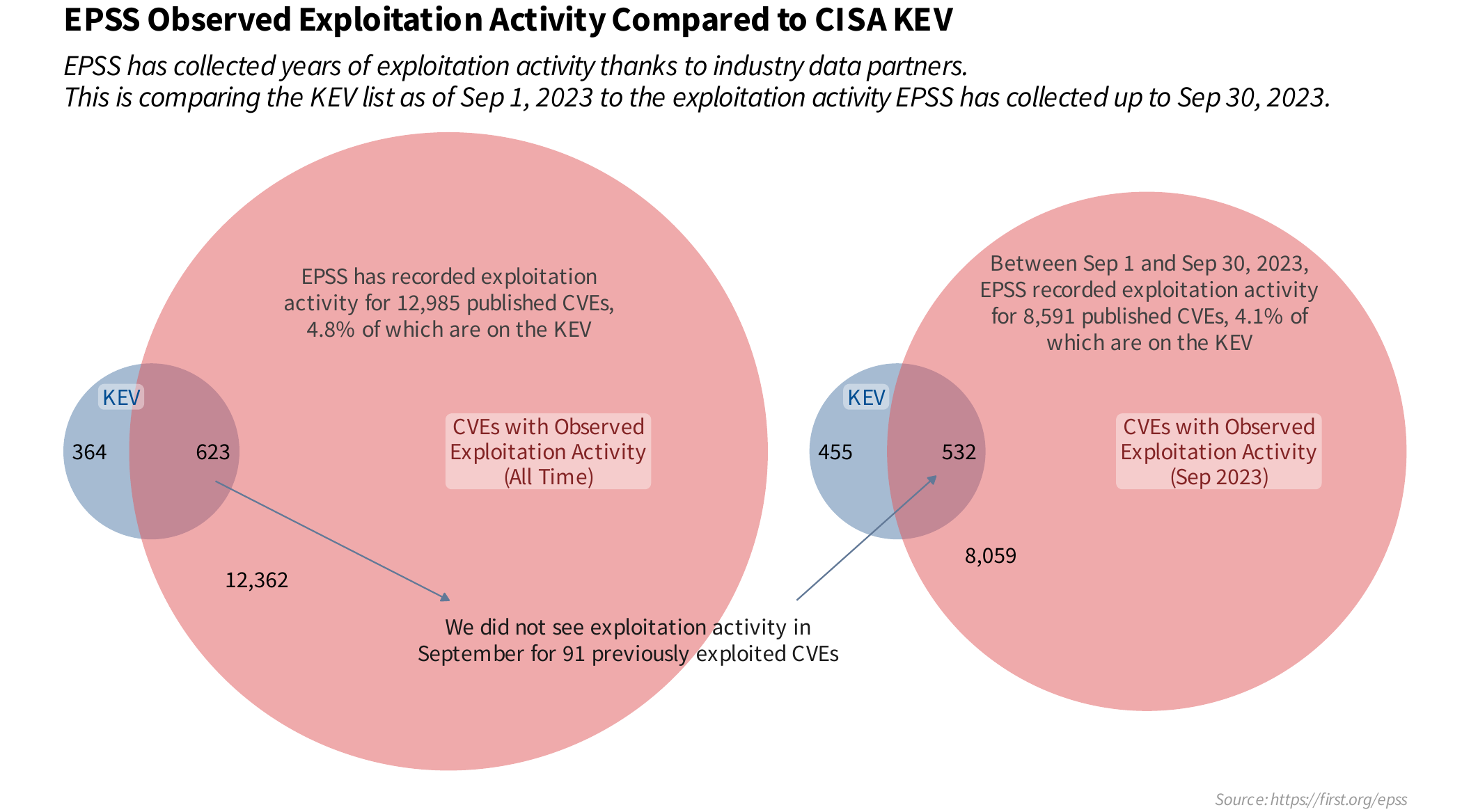

CISA KEV contains a subset of known exploited CVEs

- All CVEs in CISA KEV are actively exploited (see criteria for inclusion in CISA KEV)

- Only ~~5% of CVEs are known exploited

- CISA KEV contains a subset of known exploited CVEs

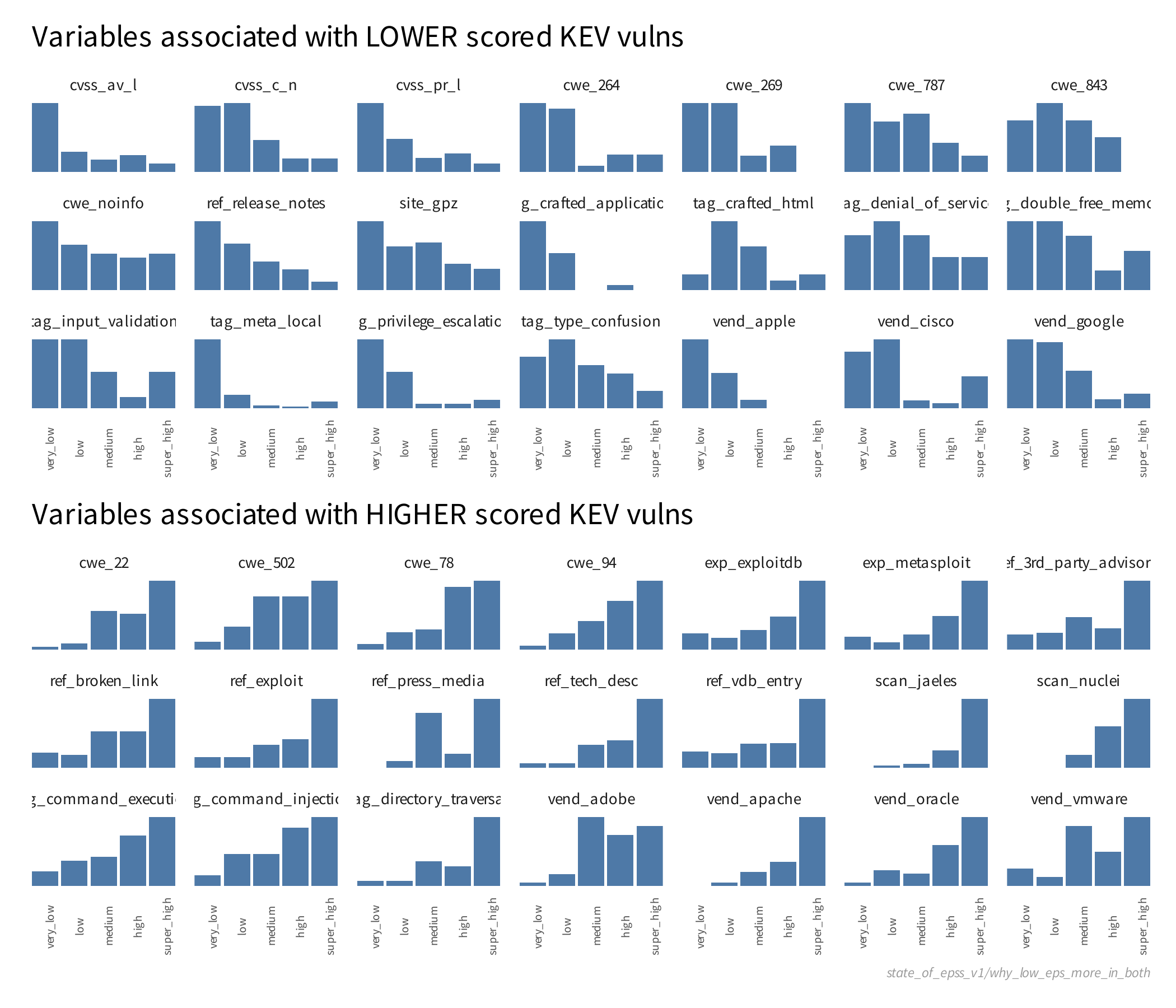

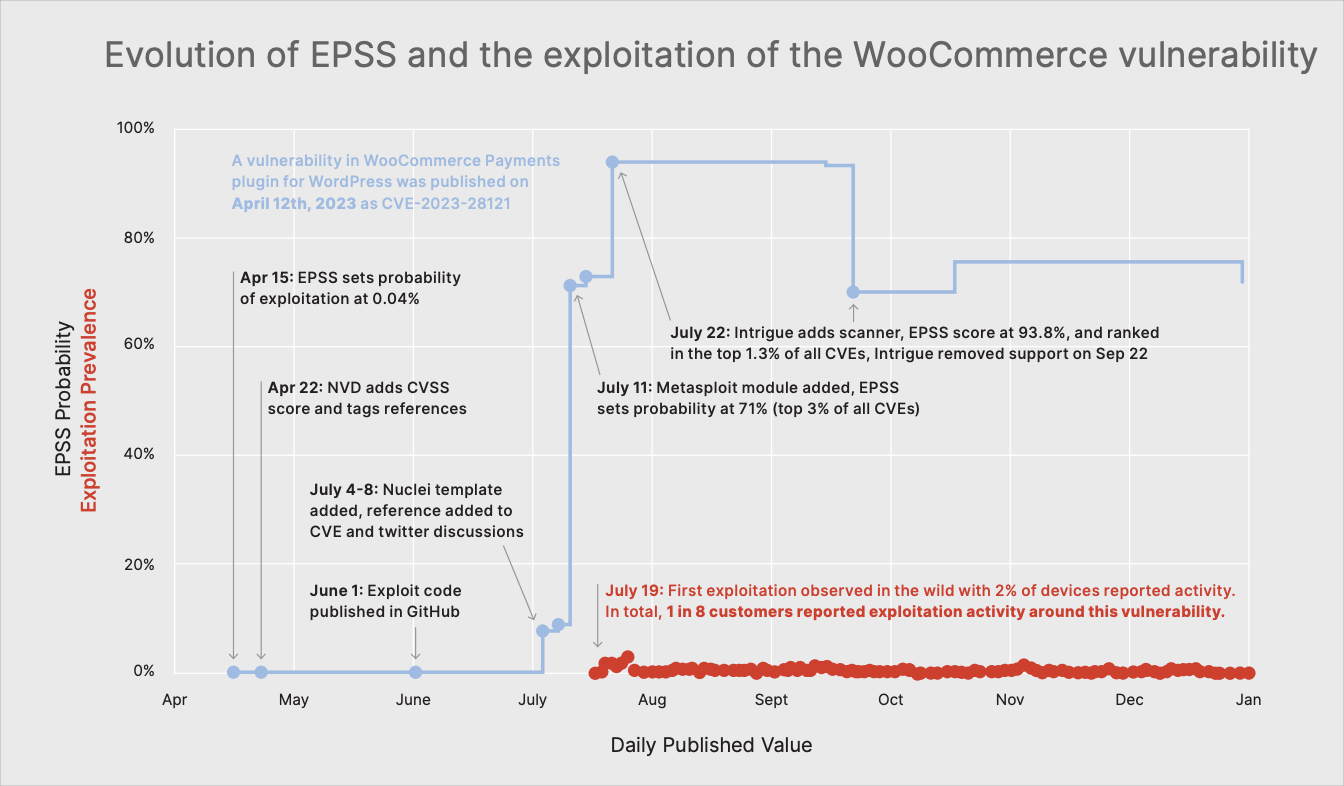

What does EPSS look like for CISA KEV?¶

TODO: redo colors for other

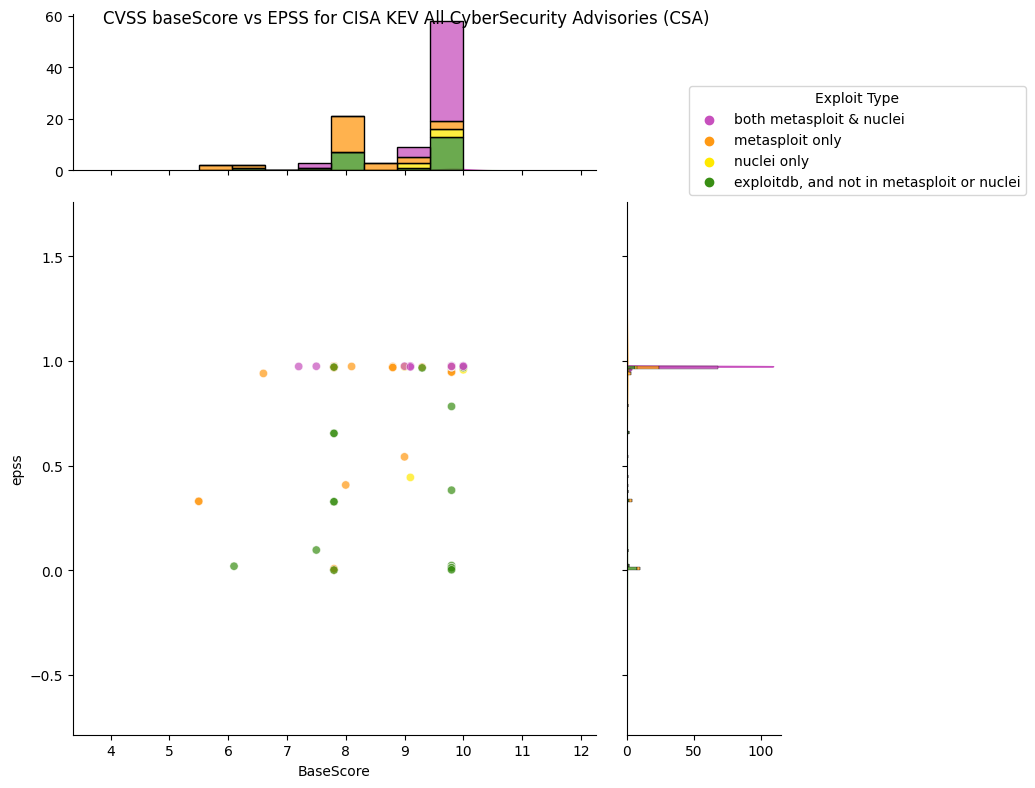

What does EPSS look like for CISA KEV CyberSecurity Advisories (CSA)?¶

CISA Cybersecurity Advisories (CSA) represent the Top Routinely Exploited Vulnerabilities from the CISA KEV Catalog

CISA (Cybersecurity and Infrastructure Security Agency) co authors (with several international cybersecurity agencies) separate Cybersecurity Advisories (CSA) on the Top Routinely Exploited Vulnerabilities from the CISA KEV Catalog e.g.

- AA23-215A Joint CSA 2022 Top Routinely Exploited Vulnerabilities August 2023

- AA21-209A Joint CSA Top Routinely Exploited Vulnerabilities July 2021

- AA22-279A 2022 covering CVEs from 2022, 2021

- AA22-117A 2022 covering CVEs from 2021

- AA20-133A 2020 covering CVEs from 2016 to 2019

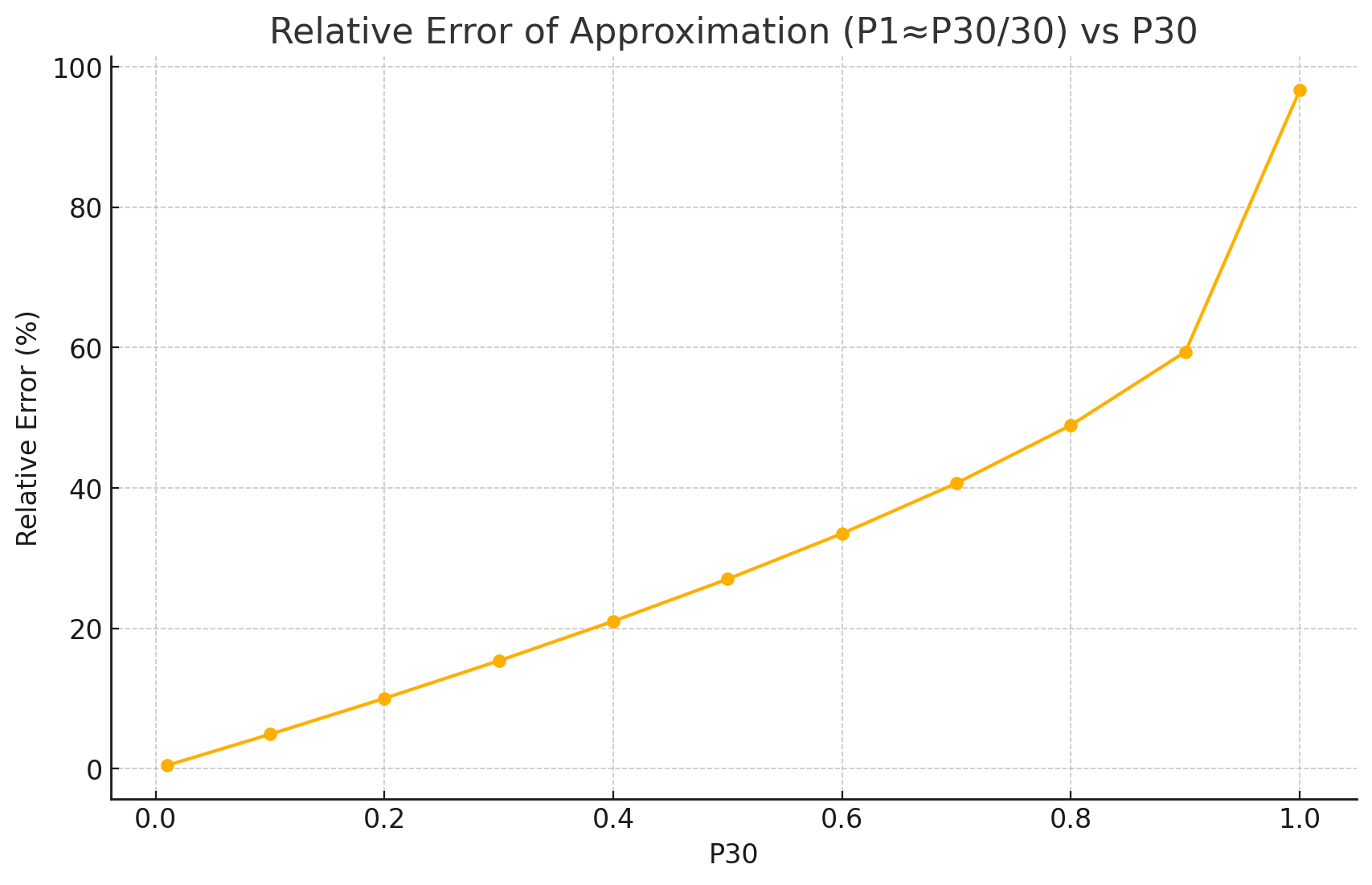

All CISA KEV CyberSecurity Advisories (CSA) Top Routinely Exploited Vulnerabilities¶